What Is an Automotive Machine Vision System?

An automotive machine vision system uses cameras, sensors, and software to help vehicles interpret their surroundings. It acts as the "eyes" of the car, identifying objects, lanes, and pedestrians to improve decision-making while driving. This technology plays a crucial role in modern vehicles by reducing accidents and enhancing efficiency. For example, predictive analytics systems powered by machine vision could prevent up to 25% of vehicle accidents, according to the IEEE Transportation Safety Committee. Tesla's Autopilot system has already shown a 40% reduction in accident rates when engaged, proving the real-world benefits of this innovation.

Key Takeaways

- Automotive machine vision systems make cars safer by spotting dangers. They can cut crash rates by up to 50%.

- These systems must work well with car parts like brakes. This helps them share information quickly for better performance.

- Smart programs use data from cameras and sensors to decide fast. This makes driving smoother in different situations.

- Machine vision also checks car parts during production. It ensures parts are high quality and reduces waste.

- Even though they cost a lot and struggle in bad weather, these systems are important for safer and better cars.

How an Automotive Machine Vision System Works

The role of cameras and sensors

Cameras and sensors form the backbone of any automotive machine vision system. These devices capture and interpret data from the vehicle's surroundings, enabling the system to "see" and understand its environment. Cameras provide high-resolution images, which are essential for detecting objects, lane markings, and traffic signs. Sensors, such as RADAR, LIDAR, and ultrasonic devices, complement cameras by measuring distances and detecting obstacles, even in challenging conditions.

Each sensor type has unique strengths and limitations. For example, cameras excel in capturing detailed visuals but struggle in low-light or adverse weather. RADAR and LIDAR, on the other hand, perform well in poor visibility but come with higher costs. The table below highlights the performance metrics of various sensors used in machine vision systems:

| Sensor Type | Cost | Size | Speed Detection | Sensitivity to Color | Robust to Weather | Robust to Day and Night | Resolution | Range |

|---|---|---|---|---|---|---|---|---|

| Ultrasonic | Low | Small | Low | No | High | High | Low | Short |

| Camera | Low | Medium | Low | High | Low | Low | High | Medium-long |

| RADAR | Medium | Small-Medium | High | No | High | High | Medium | Short, medium, long |

| LIDAR | High | Medium-Large | Medium | No | Medium | High | High | Long |

By combining these technologies, the system achieves a comprehensive understanding of its surroundings, ensuring safer and more efficient driving.

Data processing and decision-making

Once the cameras and sensors collect data, the system processes it in real time. This step involves analyzing the input to identify objects, predict movements, and make decisions. Real-time processing allows the system to respond instantly to changes, such as a pedestrian crossing the road or a sudden lane shift.

Machine vision systems rely on advanced algorithms to enhance decision-making accuracy. These algorithms interpret the data, classify objects, and determine the best course of action. For instance:

- Real-time processing ensures immediate insights and quick adjustments.

- Enhanced accuracy improves measurements and reduces errors.

- Increased productivity eliminates delays caused by manual inspections.

This seamless data processing enables the system to act swiftly and precisely, reducing the likelihood of accidents and improving overall driving efficiency.

Integration with vehicle systems

For an automotive machine vision system to function effectively, it must integrate with the vehicle's existing systems. This integration allows the system to communicate with components like braking, steering, and acceleration. For example, when the system detects an obstacle, it can send a signal to the braking system to slow down or stop the vehicle.

Modern vehicles use a centralized control unit to manage this integration. This unit ensures that all systems work together harmoniously. By doing so, the vehicle can execute complex maneuvers, such as adaptive cruise control or automated parking, with ease.

Tip: Integration is key to unlocking the full potential of machine vision systems. Without it, the system's capabilities would remain limited.

As technology advances, this integration will become even more seamless, paving the way for fully autonomous vehicles.

Components of an Automotive Machine Vision System

Cameras and imaging devices

Cameras are the cornerstone of automotive machine vision inspection systems. They capture high-resolution images that allow the system to detect objects, lane markings, and traffic signs. To achieve this, cameras rely on key performance indicators like sharpness, dynamic range, and sensitivity. These factors ensure the clarity and accuracy of the captured images.

| Key Performance Indicator | Description |

|---|---|

| Sharpness | Clarity of the image |

| Dynamic Range | Range of light intensities |

| Sensitivity | Response to light levels |

| Uniformity | Consistency of color and brightness |

Effective optics and lighting play a crucial role in imaging. Specialty optics, such as telecentric lenses, enhance image contrast and feature extraction. Consistent illumination ensures that features remain visible, even when parts or conditions vary. This is especially important for surface inspection tasks, where detecting blemishes or imperfections is critical.

Sensors and their applications

Sensors complement cameras by providing additional data about the environment. For example, RADAR measures distances and detects objects in poor visibility, while LIDAR creates detailed 3D maps of the surroundings. Ultrasonic sensors are ideal for short-range detection, such as during parking. These sensors enable dimensional inspection by measuring the size and shape of objects with high precision.

In the manufacturing industry, sensors are vital for quality control. Real-time defect detection ensures that products meet strict standards, reducing waste and improving efficiency. This capability is particularly useful for surface inspection, where even minor flaws can impact product quality.

Software and algorithms

Software and algorithms are the brains behind machine vision inspection systems. They process the data collected by cameras and sensors, transforming it into actionable insights. Image processing algorithms identify objects, classify them, and predict their movements. For example, edge detection algorithms highlight lane markings, while object recognition software identifies pedestrians or vehicles.

Scalable and efficient processing ensures real-time analysis, which is crucial for safety. Reliable communication between components allows the system to respond quickly to changes in the environment. Power-efficient algorithms also reduce energy consumption, making the system more sustainable.

In automotive applications, software plays a key role in quality control. It enables dimensional inspection and surface inspection by analyzing images for defects or inconsistencies. This ensures that inspection systems maintain high standards in both production and operation.

Applications of Automotive Machine Vision Systems

Advanced Driver Assistance Systems (ADAS)

Advanced Driver Assistance Systems (ADAS) rely heavily on automotive machine vision systems to enhance driving safety and convenience. These systems use cameras and sensors to monitor the environment, providing features like collision warnings, lane departure alerts, and adaptive cruise control. For example, when a vehicle approaches another car too quickly, ADAS can automatically apply the brakes to prevent an accident.

ADAS systems also excel in real-time inspection of the road, ensuring that drivers receive immediate feedback about potential hazards. By combining real-world data with synthetic data, these systems achieve higher accuracy and reliability. The table below highlights performance metrics comparing two ADAS systems:

| Metric | System-1 (Real Data) | System-2 (Real + Synthetic Data) |

|---|---|---|

| Accuracy | 0.57 | 0.60 |

| Precision | 77.46% | 82.56% |

| Recall | 58.06% | 61.71% |

| Mean Average Precision | 64.50% | 70.37% |

| F1 Score | 0.662 | 0.705 |

These metrics demonstrate how integrating synthetic data improves the system's ability to detect and respond to road conditions effectively.

Autonomous driving technologies

Autonomous driving technologies represent the pinnacle of automotive machine vision systems. These systems enable vehicles to navigate without human intervention by interpreting their surroundings and making decisions in real time. Cameras, sensors, and algorithms work together to detect obstacles, predict movements, and adjust the vehicle's path accordingly.

Reliability is a critical factor in autonomous driving. Various methodologies ensure that these systems perform consistently under diverse conditions:

- Stochastic Analysis evaluates reliability in unpredictable situations, accounting for sensor noise and environmental changes.

- Monte Carlo Simulations quantify the impact of random fluctuations on system performance.

- Deep Learning Integration enhances real-time decision-making and dynamic environment adjustments.

Despite these advancements, autonomous driving systems still face challenges in matching human driving capabilities. Automated software testing frameworks play a vital role in improving reliability, ensuring that these systems meet quality standards and perform consistently.

Note: The YOLOv5s algorithm has been optimized to improve metrics like precision and recall. Real-world testing shows that this algorithm reliably detects target positions and depth information, further enhancing autonomous driving capabilities.

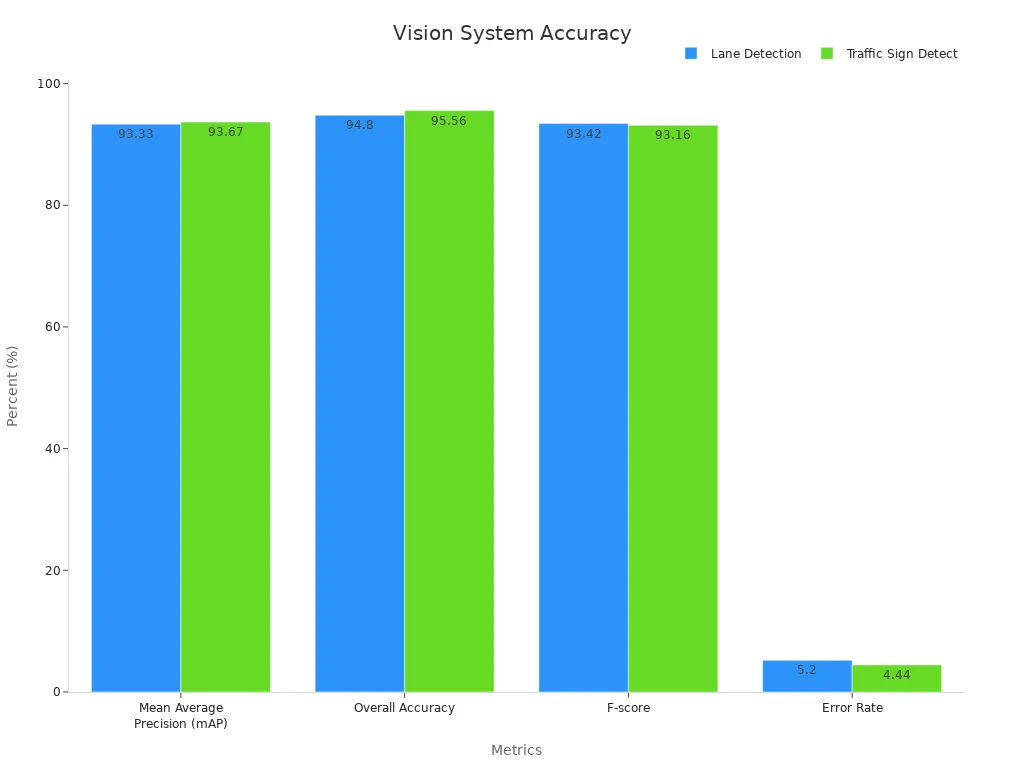

Traffic sign and lane detection

Traffic sign and lane detection are essential applications of automotive machine vision systems. These features help vehicles identify road signs and lane markings, ensuring safe and efficient navigation. Cameras capture high-resolution images, while algorithms process the data to recognize signs and lanes with remarkable accuracy.

The table below showcases the performance metrics for traffic sign recognition and lane detection:

| Metric | Lane Detection | Traffic Sign Detection |

|---|---|---|

| Mean Average Precision (mAP) | 93.33% | 93.67% |

| Overall Accuracy | 94.80% | 95.56% |

| F-score | 93.42% | 93.16% |

| Error Rate | 5.20% | 4.44% |

These high accuracy rates highlight the effectiveness of machine vision systems in recognizing critical road elements. By reducing error rates, these systems contribute to safer driving experiences and pave the way for fully autonomous vehicles.

Pedestrian and obstacle recognition

Pedestrian and obstacle recognition is one of the most critical applications of automotive machine vision systems. These systems help vehicles detect and respond to objects, people, and other potential hazards in their path. By using cameras and sensors, the system continuously scans the environment to identify obstacles like parked cars, cyclists, or pedestrians crossing the road.

You might wonder how this works so effectively. The system uses advanced image processing algorithms to classify objects based on their size, shape, and movement. For example, when a pedestrian steps onto a crosswalk, the system recognizes the person and predicts their movement. It then alerts the driver or automatically applies the brakes to prevent a collision.

Machine vision systems also excel in low-visibility conditions. Infrared cameras and RADAR sensors detect obstacles even in fog, rain, or at night. This capability ensures that you stay safe, regardless of the weather or lighting.

Tip: Always keep your vehicle's sensors clean and well-maintained. Dirt or debris can reduce the accuracy of obstacle detection systems.

By improving obstacle recognition, these systems significantly reduce the risk of accidents. They also enhance your confidence as a driver, knowing that the vehicle is actively monitoring its surroundings.

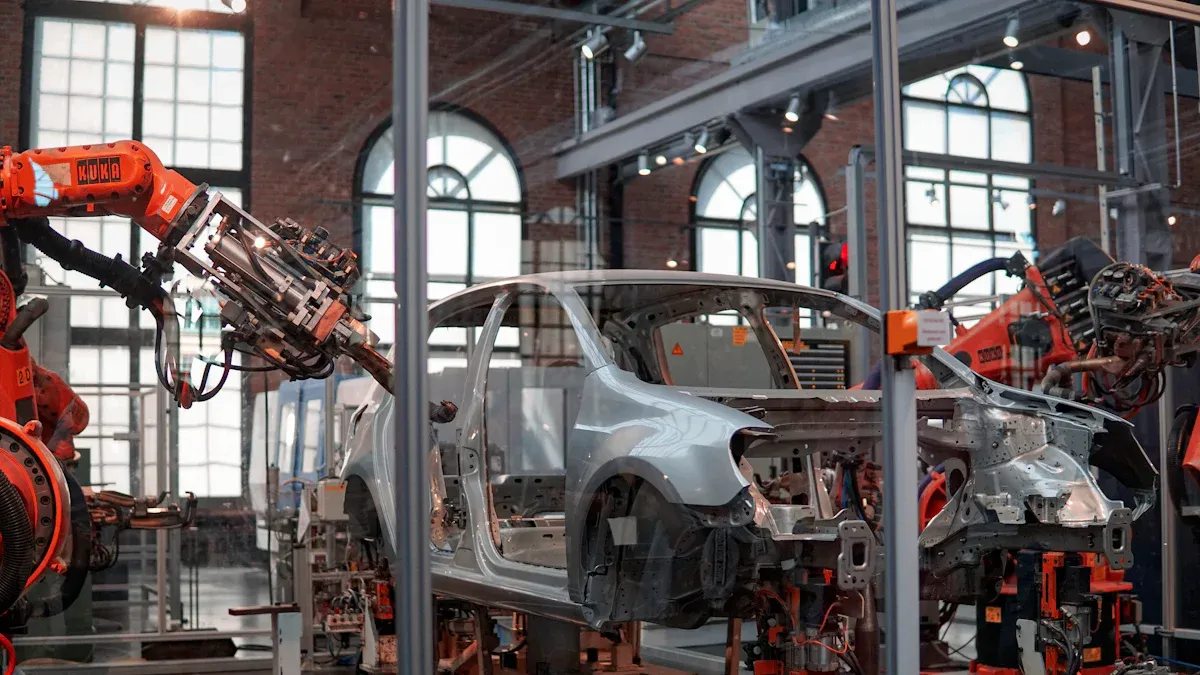

Automotive parts inspection in manufacturing

Automotive parts inspection is another vital application of machine vision technology. In manufacturing, ensuring the quality of every component is essential. Even a minor defect in a part can lead to significant safety risks or costly recalls. Machine vision systems address this challenge by automating the inspection process with remarkable precision.

These systems use high-resolution cameras and sensors to examine parts for defects such as cracks, scratches, or misalignments. Advanced algorithms analyze the captured images to detect flaws that might be invisible to the human eye. For instance, the system can measure the dimensions of a part to ensure it meets exact specifications.

You might be surprised to learn how accurate these systems are. Defect detection rates in the automotive industry exceed 99%. This high level of accuracy minimizes false positives and ensures that only flawless parts move forward in the production line.

- Key benefits of machine vision in automotive parts inspection include:

- Faster inspection times compared to manual methods.

- Consistent quality control across all production batches.

- Reduced waste by identifying defects early in the process.

Note: Manufacturers often use machine vision systems for surface inspection, where detecting even the smallest imperfections is crucial.

By automating quality control, machine vision systems not only improve safety but also enhance efficiency in manufacturing. This technology ensures that every part meets the highest standards before it reaches your vehicle.

Benefits of Automotive Machine Vision Systems

Enhanced safety and accident prevention

Automotive machine vision systems significantly improve safety by reducing accidents and enhancing response times. These systems detect potential hazards, such as pedestrians or vehicles, and take immediate action to prevent collisions. For example, machine learning-based collision avoidance systems can reduce accidents by 40-50%, while driver monitoring systems achieve reductions of up to 70%. Lane-keeping assistance systems also contribute by lowering accident rates by 30%.

| Safety System Type | Accident Reduction Percentage |

|---|---|

| ML-based collision avoidance systems | 40-50% |

| Driver monitoring systems | Up to 70% |

| Lane-keeping assistance systems | 30% |

Machine vision systems also enhance alert accuracy. ML-powered systems reduce false alerts by 60% and increase true positives by 35%, ensuring you receive only relevant notifications. This precision minimizes distractions and allows you to focus on driving safely.

| Safety System Type | Impact on Alerts |

|---|---|

| ML-powered systems | 60% reduction in false alerts, 35% increase in true positives |

Tip: Regularly calibrate your vehicle's sensors to maintain their accuracy and reliability.

Improved driving efficiency and convenience

Machine vision systems optimize driving efficiency by analyzing traffic patterns and providing real-time data on vehicle speeds and volumes. This information helps reduce congestion and travel times. Automated traffic-incident-detection systems play a key role by improving response times and enabling better traffic management.

For instance, these systems can identify incidents quickly, allowing authorities to address them faster. This reduces delays and ensures smoother traffic flow. By improving operational efficiency, machine vision systems make your daily commute more convenient and less stressful.

Additionally, automated inspection capabilities in manufacturing ensure that vehicles are built with precision. This enhanced quality control results in reliable vehicles that perform efficiently on the road.

Support for autonomous vehicle development

Machine vision technology is essential for the development of autonomous vehicles. It enables these vehicles to navigate safely by detecting obstacles, recognizing lanes, and predicting movements. Recent advancements have improved the reliability of these systems, even in challenging conditions like low light or adverse weather.

A study published in Applied Sciences highlighted significant improvements in pedestrian detection and lane-keeping algorithms. These advancements ensure that autonomous vehicles operate safely and efficiently. As a result, you can expect future vehicles to offer greater autonomy and reliability.

Machine vision also supports automated inspection during vehicle production. This ensures that every component meets strict standards, contributing to the overall safety and performance of autonomous vehicles.

Increased precision in automotive manufacturing

Automotive machine vision systems have revolutionized manufacturing by delivering unmatched precision. These systems ensure that every component meets exact specifications, reducing errors and improving overall quality. You benefit from vehicles that are safer, more reliable, and built to last.

Machine vision eliminates the inconsistencies of manual inspections. High-resolution cameras and advanced algorithms detect even the smallest defects, such as surface scratches or dimensional inaccuracies. This level of precision ensures that only flawless parts make it into the final product. For example, a machine vision system can measure the thickness of a brake pad with micrometer-level accuracy, something that would be challenging for the human eye.

Automating inspections also increases efficiency. Unlike manual methods, machine vision systems operate continuously without fatigue. This allows manufacturers to inspect more parts in less time, boosting productivity. You might be surprised to learn how much this improves the manufacturing process. The table below highlights key benefits:

| Benefit | Description |

|---|---|

| Improved Inspection Accuracy | Machine vision eliminates human error, detecting defects with greater precision than manual inspection. |

| Increased Efficiency | Automating inspections reduces time and increases productivity, allowing for continuous operation. |

Tip: Consistent maintenance of machine vision systems ensures they perform at peak efficiency, further enhancing precision.

By integrating machine vision, manufacturers achieve higher standards of quality control. This technology not only reduces waste but also ensures that every vehicle part meets strict safety and performance requirements. As a result, you can trust that your car is built with the highest level of care and precision.

Challenges and Limitations

Technical challenges (e.g., weather conditions, lighting)

Weather and lighting conditions pose significant challenges for automotive machine vision systems. Fog, rain, and low light can obscure objects, making it harder for the system to detect them accurately. For example, fog reduces visibility and blurs images, which affects the system's ability to identify pedestrians or vehicles. Algorithms like YOLO struggle to perform well in such conditions, often misidentifying key targets.

| Evidence Description | Impact on Machine Vision Operations |

|---|---|

| Fog obscures or blurs target features, compromising YOLO’s ability to accurately detect objects. | Performance remains suboptimal in foggy conditions, necessitating further advancements. |

| Fog significantly reduces visibility and degrades image quality, leading to misidentification of key targets. | Conventional object detection algorithms struggle to capture target features accurately. |

| Traditional image defogging techniques require substantial computational resources and often underperform in dynamic environments. | These methods are not always effective in improving detection accuracy under adverse weather conditions. |

Lighting also plays a critical role. Poor lighting at night or during storms can degrade image quality, making it difficult for cameras to capture clear visuals. While infrared sensors and advanced algorithms help mitigate these issues, they still require further development to ensure consistent performance in all conditions.

High costs of implementation

The cost of implementing automotive machine vision systems remains a major barrier. Integrating cameras, sensors, and software into vehicles requires significant investment. For example, 3D machine vision technology is becoming more common across industries, but its adoption in automotive applications involves high expenses. These costs include hardware, software development, and system integration.

Global supply chain disruptions have further increased costs. U.S. tariff policies have forced companies to rethink sourcing strategies and redesign products, which complicates the integration process. While these challenges drive innovation, they also make it harder for manufacturers to adopt machine vision systems at scale. Despite these hurdles, the growing market for machine vision technology offers opportunities for efficiency improvements and long-term cost savings.

Ethical and regulatory concerns

Ethical and regulatory issues also limit the widespread adoption of machine vision systems. One key concern is the need for human oversight in AI systems. Designers must integrate ethical principles from the start to ensure accountability and transparency. Without these safeguards, the technology could lead to unintended consequences.

Several case studies highlight these concerns:

- The Tesla Autopilot case revealed the risks of biased training data. In one incident, the system failed to recognize a truck, leading to a fatal accident.

- Another study emphasized the importance of human oversight, showing that AI systems must remain accountable to avoid misuse or errors.

Regulations also vary across regions, creating challenges for manufacturers. Meeting these diverse requirements adds complexity to the development process. By addressing these ethical and regulatory concerns, you can ensure that machine vision systems are both safe and reliable.

Integration with existing vehicle systems

Automotive machine vision systems work best when they integrate seamlessly with your vehicle's existing systems. This integration allows the vision system to communicate with critical components like braking, steering, and acceleration. When the system detects a hazard, it sends signals to these components to take immediate action. For example, if a pedestrian steps into your path, the system can activate the brakes to prevent a collision.

Modern vehicles use centralized control units to manage this integration. These units act as the brain of your car, ensuring all systems work together. They coordinate the vision system with features like adaptive cruise control, lane-keeping assistance, and automated parking. This coordination enables your vehicle to perform complex tasks with precision.

Tip: Regular software updates keep your vehicle's control unit compatible with the latest machine vision technologies.

Integration also improves the efficiency of your vehicle. By sharing data between systems, the vision system can optimize fuel consumption and reduce wear on components. For instance, it can adjust acceleration based on traffic patterns, helping you save fuel during your daily commute.

| Integration Benefits | Description |

|---|---|

| Enhanced Safety | Systems work together to prevent accidents and respond to hazards quickly. |

| Improved Efficiency | Data sharing reduces fuel consumption and wear on vehicle components. |

| Advanced Features | Enables complex tasks like automated parking and adaptive cruise control. |

You might wonder how this integration affects older vehicles. Retrofitting machine vision systems into older models is possible, but it requires specialized hardware and software. Manufacturers often design these systems to be compatible with a wide range of vehicles, ensuring you can enjoy their benefits regardless of your car's age.

By integrating machine vision systems with existing vehicle components, you gain a safer, smarter, and more efficient driving experience.

An automotive machine vision system serves as the foundation for safer and smarter vehicles. It helps cars "see" and interpret their surroundings, ensuring better decision-making on the road. You benefit from improved safety, greater driving efficiency, and the promise of fully autonomous vehicles in the future. This technology continues to evolve, offering exciting possibilities like enhanced obstacle detection and smarter navigation systems. As advancements progress, you can expect even more innovative features that redefine how vehicles operate.

FAQ

What is the main purpose of an automotive machine vision system?

The system helps vehicles "see" and interpret their surroundings. It identifies objects, lanes, and pedestrians to improve safety and efficiency. You benefit from features like collision avoidance, lane-keeping assistance, and adaptive cruise control.

Can machine vision systems work in bad weather?

Yes, but performance may vary. Sensors like RADAR and LIDAR handle fog and rain better than cameras. Infrared cameras also improve visibility at night. However, extreme conditions can still challenge these systems.

Are machine vision systems only for autonomous vehicles?

No, they are also used in Advanced Driver Assistance Systems (ADAS). These systems enhance safety and convenience in regular vehicles by providing features like lane departure warnings, traffic sign recognition, and pedestrian detection.

How do machine vision systems improve manufacturing?

They automate quality control by inspecting parts for defects. High-resolution cameras and algorithms detect flaws with precision. This ensures that every component meets strict standards, reducing waste and improving production efficiency.

Are these systems expensive to implement?

Yes, the initial costs can be high due to advanced hardware and software. However, they offer long-term savings by improving safety, reducing accidents, and enhancing manufacturing efficiency. Over time, the benefits outweigh the costs.

See Also

Understanding Quality Assurance Within Machine Vision Systems

Exploring Illumination Techniques in Machine Vision Systems

An Overview of Camera Usage in Machine Vision

A Guide to Electronics in Machine Vision Systems

Comparing Firmware-Based Machine Vision and Conventional Systems