What is Contrastive Language-Image Pretraining in Machine Vision Systems

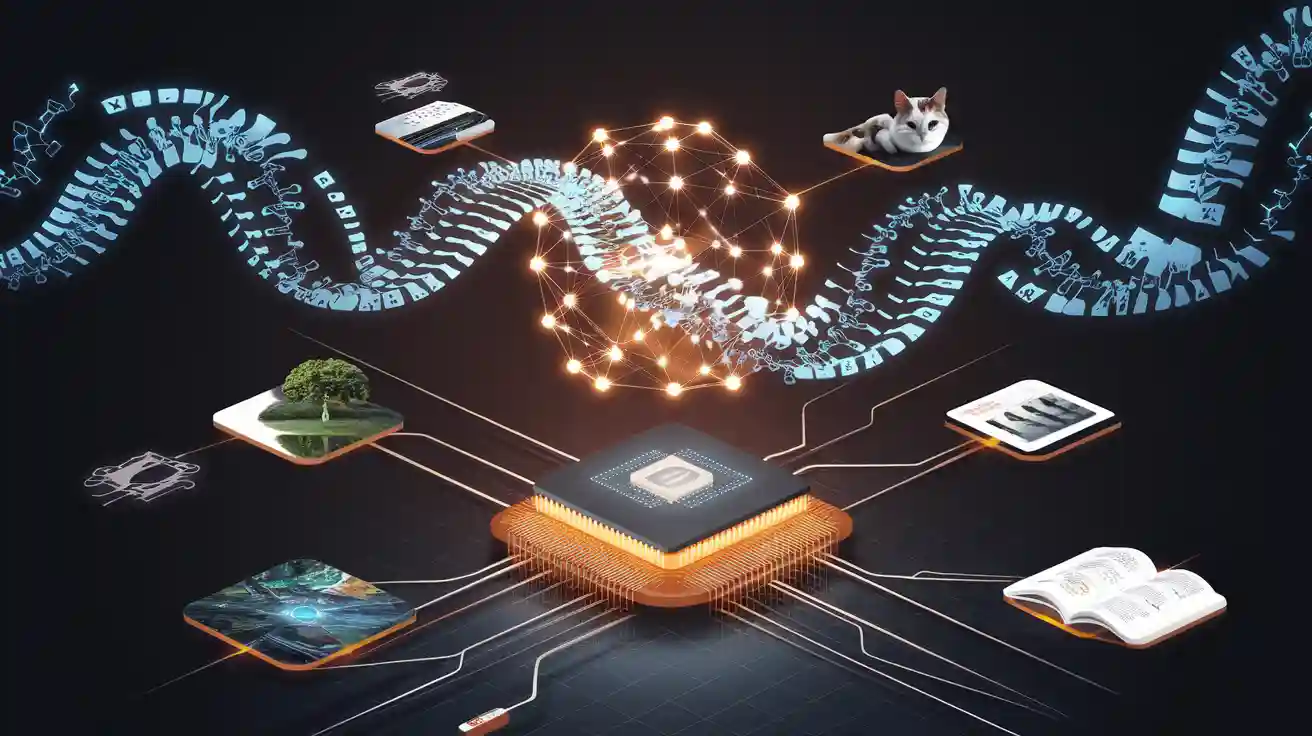

Contrastive Language-lmage Pretraining machine vision systems signify a revolutionary advancement in artificial intelligence. This cutting-edge approach trains machines to comprehend the intricate relationship between language and images by aligning visual and textual data. By processing a picture and its corresponding text simultaneously, these systems establish a stronger connection between the two modalities.

For example, models like CLIP utilize billions of image-text pairs to achieve exceptional accuracy. The training dataset for MVC includes 10,909,109,091 examples, while VLFeedback data comprises 9,222,922,229 examples. These extensive datasets enable machines to build robust associations between language and images, significantly improving their capabilities in tasks such as object recognition and semantic retrieval.

CounterCurate FineCops-Ref MVC Object Replacement 26,164 4,171 7,189 Attribute Replacement 27,964 1,844 919 Count Modification 10,010 0 919 Position Change 56,711 1,555 3,041 Total 120,849 7,570 11,149

The Contrastive Language-lmage Pretraining machine vision system is transforming how machines interpret multimodal data, empowering them to recognize objects, understand text, and even generate images based on descriptions. This innovation paves the way for smarter, more adaptable machine vision systems that bridge the gap between human communication and artificial intelligence.

Key Takeaways

- CLIP helps computers link pictures and words. This improves tasks like finding objects and matching meanings.

- It uses two separate systems to handle text and images. This makes learning faster and works without special training for each task.

- Training with many picture-word pairs helps the model learn better. It can handle different tasks, like finding pictures by their meaning.

- CLIP's method matches picture-word pairs well. This makes it more accurate and needs less training.

- But CLIP has problems, like unfair data and needing lots of computer power. Careful planning and smart training can help fix these issues.

How Contrastive Language-Image Pretraining Works

Dual Encoder Architecture in CLIP

The dual encoder architecture is the backbone of CLIP. It uses two separate encoders: one for processing text and another for images. These encoders transform the input data into a shared feature space, enabling the model to compare and align the two modalities effectively. For example, when you input an image of a dog and the text "a cute dog," the encoders generate vector representations for both. These vectors are then compared to determine their similarity.

This architecture offers several advantages. It allows the model to process text and image data independently, making it highly efficient. Additionally, the dual encoder design supports zero-shot learning, where the model can classify images without specific training for the task. Recent advancements, such as the ECLIPSE model, have demonstrated improvements in zero-shot accuracy and inference speed, showcasing the robustness of this approach.

Training with Image-Text Pairs

Training CLIP involves using large datasets of image-text pairs. Each pair consists of an image and its corresponding textual description. The model learns to associate the two by maximizing the similarity between matching pairs and minimizing it for mismatched ones. For instance, if you provide an image of a cat and the text "a playful kitten," the model strengthens the connection between them while weakening links to unrelated descriptions.

This method relies on contrastive learning, which focuses on distinguishing between positive and negative pairs. The process begins by encoding batches of image-text pairs separately. A similarity search then matches images with their corresponding text. This approach enables the model to generalize across diverse tasks, from semantic image retrieval to zero-shot classification.

Empirical studies highlight the effectiveness of this training method. For example, a study using frequency-based subsampling methods like WFPP showed significant performance improvements in both pre-training and fine-tuning stages. These results confirm the robustness of training with image-text pairs in contrastive pretraining.

Contrastive Loss Function and Its Role

The contrastive loss function plays a crucial role in aligning text and image data. It ensures that the model learns to maximize the similarity between correct pairs while minimizing it for incorrect ones. This process involves computing the dot product between the encoded vectors of an image and its corresponding text. The higher the dot product, the stronger the alignment.

This loss function enhances the model's ability to generalize, even with limited labeled data. For instance, in a study using the CIFAR-10 dataset, contrastive pretraining improved validation accuracy and reduced validation loss. These metrics indicate better feature acquisition and generalization capabilities, making the model more reliable for real-world applications.

By leveraging the contrastive loss function, CLIP achieves remarkable accuracy in tasks like zero-shot classification and semantic retrieval. This approach not only improves performance but also reduces the need for extensive task-specific training, making it a versatile tool in machine vision systems.

Applications of CLIP in Multimodal Machine Vision Systems

Zero-Shot Image Classification

One of the most groundbreaking applications of CLIP is zero-shot image classification. This approach allows you to classify images without requiring task-specific training. Instead of relying on predefined categories, CLIP uses its ability to align text and images to infer the correct label from a set of textual descriptions. For example, if you provide an image of a manatee and a list of possible labels like "manatee," "elephant," or "cat," CLIP can identify the correct label based on its training with diverse image-text pairs.

The effectiveness of zero-shot learning has been demonstrated through extensive case studies. For instance, recognition accuracy for species like Trichechus manatus (manatee) improved from 74.41% to 93.90%, while Tapirus terrestris (tapir) saw an increase from 39.21% to 75.44%. These results highlight the significant improvements achieved by incorporating geographic distribution data into the model. The table below summarizes these findings:

| Species | Recognition Accuracy (Before) | Recognition Accuracy (After) | Improvement |

|---|---|---|---|

| Trichechus manatus | 74.41% | 93.90% | 19.59% |

| Tapirus terrestris | 39.21% | 75.44% | 36.23% |

| Felis catus | 62.35% | 75.44% | 13.09% |

These advancements make zero-shot image classification a powerful tool for tasks where labeled data is scarce or unavailable. By leveraging CLIP's multimodal capabilities, you can achieve high accuracy across a wide range of categories without extensive retraining.

Semantic Image Retrieval

Semantic image retrieval is another area where CLIP excels. This application involves finding images that match a specific textual query. For example, you might search for "a red sports car on a sunny day," and CLIP retrieves images that closely align with this description. Its ability to embed both text and images into a shared feature space enables precise and efficient retrieval.

Contrastive language-image models like CLIP have set new benchmarks in this domain. Unlike traditional methods that require fine-tuning on specific datasets, CLIP achieves state-of-the-art performance without domain-specific adjustments. For instance, models like SigLIP demonstrate robust visual representations for image retrieval tasks, even when applied across different datasets. The table below highlights some key performance metrics:

| Evidence Description | Performance Impact |

|---|---|

| Fine-tuning on one dataset and applying the model for retrieval on a different dataset typically leads to a significant decline in performance. | Up to -0.5 mMP@5 |

| Contrastive text-image embeddings, such as SigLIP, achieve state-of-the-art performance on several retrieval tasks without requiring domain-specific fine-tuning. | Robust visual representations for image retrieval |

| Contrastive text-image models demonstrate strong performance in pure image-to-image retrieval, challenging assumptions about their domain specificity. | Effective in fine-grained retrieval tasks |

| Text-image models and SSL embeddings can achieve state-of-the-art performance in e-Commerce with minimal fine-tuning. | Reduces computational costs while maintaining effectiveness |

By using CLIP for semantic image retrieval, you can streamline tasks like e-commerce product searches, digital asset management, and even personalized content recommendations. Its ability to generalize across domains makes it a versatile solution for various industries.

Text-to-Image Generation

CLIP also plays a pivotal role in text-to-image generation. This application involves creating images based on textual descriptions, enabling you to bring your ideas to life visually. Models like Stable Diffusion utilize CLIP's text encoder to convert text prompts into image embeddings, which guide the generation process. For example, if you input "a futuristic cityscape at sunset," the model generates an image that matches this description.

One of the key techniques in this process is "CLIP guidance." Here, CLIP serves as a gradient signal to steer the image generation toward the desired outcome. This approach has been widely adopted in generative art, where artists use CLIP to create visually stunning and conceptually rich pieces. Additionally, its integration into diffusion models has expanded its applications to include everything from creative design to scientific visualization.

The versatility of CLIP in text-to-image generation showcases its potential to revolutionize creative industries. Whether you're an artist, a designer, or a researcher, this technology empowers you to translate textual ideas into compelling visual representations.

Limitations and Challenges of Multimodal Models Like CLIP

Biases in Training Data

Bias in training data poses a significant challenge for multimodal models like CLIP. These biases arise from the datasets used during pretraining, which often reflect societal stereotypes and imbalances. For example, studies have shown that CLIP exhibits racial bias by associating white individuals more strongly with in-group words. Gender bias also emerges in text-to-image generation tasks, where slight changes in prompts can lead to stereotypical depictions. Additionally, sexual objectification bias has been observed in CLIP's embeddings, further highlighting the need for ethical considerations in dataset curation.

| Study | Findings | Bias Type |

|---|---|---|

| Wolfe et al. (2023) | Evidence of sexual objectification bias in CLIP | Sexual Objectification |

| Wolfe and Caliskan (2022) | White individuals are more associated with in-group words | Racial Bias |

| Teo et al. (2024) | Stable Diffusion shows gender bias with slight prompt changes | Gender Bias |

To mitigate these biases, you must prioritize diverse and balanced datasets during training. Incorporating fairness-aware algorithms can also help reduce bias in multimodal machine learning models.

Computational Resource Demands

Training multimodal models like CLIP requires substantial computational resources. The process involves handling large-scale datasets of image-text pairs and optimizing complex architectures, such as dual encoders. This demand for high-performance hardware limits accessibility for smaller organizations and researchers. For instance, pretraining CLIP on billions of image-text pairs requires extensive GPU clusters and significant energy consumption.

The computational intensity also impacts reproducibility. Researchers often struggle to replicate results due to differences in hardware configurations and resource availability. Addressing this challenge involves exploring lightweight architectures and efficient training techniques. Models like TinyGPT-V and FastVLM demonstrate promising advancements in reducing resource demands while maintaining performance.

Balancing Generalization and Task-Specific Performance

Multimodal models like CLIP excel in generalization across diverse tasks but often face trade-offs when adapting to specific domains. For example, vision-language models must balance their ability to perform well on general tasks, such as zero-shot classification, with specialized tasks requiring domain-specific knowledge. Research into modular architectures and task-conditioned pretraining offers potential solutions to bridge this gap.

| Aspect | Description |

|---|---|

| Generalization | Ensuring sVLMs generalize effectively across diverse domains and tasks. |

| Evaluation Paradigms | Developing nuanced metrics to capture multimodal alignment and robustness. |

| Performance Trade-offs | Efficiency challenges in models like MiniGPT-4 and FastVLM. |

Improving evaluation paradigms is essential for assessing real-world performance. You can focus on benchmarks that measure multimodal alignment and robustness to noisy data. By refining these metrics, you can better understand the trade-offs between generalization and task-specific accuracy.

Contrastive Language-Image Pretraining (CLIP) has redefined how machines interpret multimodal data. By leveraging dual encoders, image-text pairs, and contrastive loss, it bridges the gap between visual and textual understanding. Its applications, such as zero-shot classification, semantic retrieval, and text-to-image generation, demonstrate its versatility across industries. However, challenges like biases, computational demands, and balancing generalization remain critical.

The significance of CLIP lies in its ability to generalize across diverse tasks. Models like CLIP excel in adapting to distribution shifts, as shown in studies of 83 CLIP models and 127 ImageNet classifiers. Emerging architectures, including Vision Transformer (ViT), promise further advancements. Researchers are also focusing on pre-training with local features to enhance task-specific performance.

| Benchmark | TULIP Performance | Improvement over SigLIP |

|---|---|---|

| ImageNet-1K | SOTA zero-shot | N/A |

| RxRx1 (few-shot) | 2× enhancement | Yes |

| MMVP | 3× higher scores | Yes |

Looking ahead, the evolution of CLIP and similar models will likely focus on improving robustness, predictive uncertainty, and safety measures. These advancements will shape the future of machine vision systems, enabling smarter, safer, and more adaptable technologies.

FAQ

What is the main purpose of CLIP in machine vision systems?

CLIP helps machines understand and connect images with text. It allows you to perform tasks like image classification, retrieval, and generation without needing task-specific training. This makes it versatile and efficient for multimodal applications.

How does CLIP handle zero-shot learning?

CLIP uses its training on diverse image-text pairs to classify images without additional training. You provide a description, and it matches the image to the most relevant label. This capability works even for categories it hasn’t seen before.

Why is the contrastive loss function important?

The contrastive loss function teaches CLIP to align matching image-text pairs while separating mismatched ones. This process improves the model’s ability to generalize and perform well across various tasks, even with limited labeled data.

What are some challenges of using CLIP?

CLIP faces challenges like biases in training data, high computational demands, and balancing generalization with task-specific performance. Addressing these issues requires careful dataset curation and exploring efficient training methods.

Can CLIP be used for creative tasks?

Yes! CLIP powers creative applications like text-to-image generation. You can input a description, and models using CLIP, like Stable Diffusion, create images that match your idea. This makes it a valuable tool for artists and designers. 🎨

See Also

Understanding Image Processing In Machine Vision Technology

Essential Libraries For Enhanced Image Processing In Vision

The Importance Of Image Recognition In Quality Control