How Few-Shot Learning Revolutionizes Machine Vision

Few-Shot Learning has transformed how machines interpret visual data. It allows systems to learn new tasks with just a few examples, making them highly efficient. Traditional models often struggle when data is scarce, but Few-Shot Learning overcomes this limitation. For instance, in healthcare, a Few-Shot Learning model achieved an 85% accuracy rate in diagnosing rare diseases, outperforming traditional methods that require vast datasets. This innovation also led to a 30% rise in early diagnoses. The scalability of Few-Shot Learning machine vision systems enables rapid adaptation to diverse scenarios, paving the way for breakthroughs in industries like e-commerce and medicine.

Key Takeaways

- Few-Shot Learning helps machines learn tasks with only a few examples. This makes it useful and easy to use when data is limited.

- It cuts down the need for big labeled datasets, saving both time and money on collecting and labeling data.

- Few-Shot Learning makes models flexible. They can quickly handle new tasks or changes, which is important in areas like robots and healthcare.

- Methods like meta-learning and making prototypes help models work well. They can do new tasks with very little data.

- Few-Shot Learning is used in many fields. It helps doctors find rare diseases, improves shopping suggestions, and helps self-driving cars spot objects fast.

What is Few-Shot Learning?

Definition and Concept

Few-shot learning is a machine learning paradigm designed to address the challenge of training models with minimal labeled data. Unlike traditional deep learning methods that require thousands of examples, few-shot learning enables systems to learn new tasks with just a handful of samples. This approach is particularly useful in scenarios where collecting large datasets is impractical or costly.

Few-shot learning relies on advanced techniques like transfer learning and meta-learning. Transfer learning allows models to leverage knowledge from previously learned tasks, while meta-learning focuses on teaching models how to learn efficiently across diverse tasks. By combining these strategies, few-shot learning systems can generalize well to unseen data and adapt quickly to new environments.

Few-shot learning is the problem of making predictions based on a limited number of samples. The goal is to learn to recognize similarities and differences between objects rather than just memorizing training examples.

Variations: N-shot, One-shot, and Zero-shot Learning

Few-shot learning encompasses several variations, each tailored to specific scenarios:

- N-shot learning: This broader concept includes few-shot, one-shot, and zero-shot learning. It involves training models to classify N classes using K samples per class. For example, in a 5-way-3-shot task, the model identifies five classes with three examples from each class.

- One-shot learning: A specialized case of few-shot learning where only one example per class is available for training. This method is ideal for applications like facial recognition, where obtaining multiple samples may be challenging.

- Zero-shot learning: This variation enables models to classify unseen classes without any training examples. It relies on semantic relationships between known and unknown classes, making it highly adaptable to novel tasks.

Few-shot learning also incorporates innovative approaches to improve performance:

- Meta-learning: Models learn how to learn by optimizing their ability to adapt across tasks.

- Data-level approaches: These methods create class prototypes by averaging embeddings of images, simplifying classification tasks.

- Parameter-level approaches: Models focus on learning parameters that generalize well across tasks, enhancing adaptability.

| Term | Description |

|---|---|

| Few-Shot Learning (FSL) | A learning paradigm where models are trained to recognize new classes with very few examples. |

| N-Shot Learning (NSL) | A broader concept encompassing Few-Shot, One-Shot, and Zero-Shot Learning. |

| One-Shot Learning (OSL) | A specific case of FSL where only one example per class is available for training. |

| Zero-Shot Learning (ZSL) | The ability to classify unseen classes without any training examples. |

| N-way-K-Shot Classification | A specific task in FSL where N classes are identified with K samples from each class. |

Few-shot learning’s ability to generalize from limited data makes it a powerful tool for applications like few-shot classification, object detection, and medical imaging.

| Advantage | Description |

|---|---|

| Reduced Dataset Requirement | Few-shot learning significantly lowers the need for large labeled datasets, enabling effective learning with only 5-10 samples. |

| Improved Adaptability | Models can quickly adapt to new classes with minimal data, such as incorporating new shoe designs using just 3-5 images. |

| Enhanced Generalization | Models learn to identify discriminative patterns from limited data, improving performance on unseen tasks. |

How Few-Shot Learning Works in Machine Vision

Support Sets and Query Sets

Few-shot learning relies on a unique training approach that uses support sets and query sets. These sets play a crucial role in helping the model learn and generalize effectively.

A support set contains a small number of labeled examples from specific classes. These examples act as the foundation for the model to understand the task. For instance, if the task involves identifying different types of flowers, the support set might include three labeled images of roses, tulips, and daisies.

The query set, on the other hand, includes unlabeled examples from the same classes as the support set. The model uses these examples to test its ability to generalize. For example, after learning from the support set, the model must correctly identify whether a new image of a rose belongs to the "rose" class.

Few-shot learning operates through episodes, which simulate real-world tasks. Each episode includes both support and query sets. This episodic training method allows the model to practice learning from limited data and testing its understanding repeatedly.

🧠 Did you know? This episodic structure mimics how humans learn. You don’t need hundreds of examples to recognize a new object. A few examples and some practice are often enough!

Researchers have validated this approach through experiments. They found that support sets enable models to learn effectively from limited data, while query sets test their ability to generalize to new examples. This combination ensures that few-shot learning systems perform well even with scarce labeled data.

Meta-Learning Principles

Meta-learning, often called "learning to learn," is a core principle behind few-shot learning. It focuses on teaching models how to adapt quickly to new tasks with minimal data. Instead of training a model for a single task, meta-learning prepares it to handle a variety of tasks efficiently.

This principle works by optimizing the model's ability to generalize across tasks. For example, a meta-learning algorithm might train a model to recognize patterns in animal images. Once trained, the model can quickly identify a new animal species using just a few examples.

Several algorithms demonstrate the power of meta-learning in machine vision. Model-Agnostic Meta-Learning (MAML) is one such algorithm. It fine-tunes the model's parameters, enabling it to adapt to new tasks with minimal adjustments. Matching Networks is another approach that uses similarity measures to classify new examples based on their resemblance to the support set.

Meta-learning has shown remarkable results in few-shot classification tasks. It enhances the model's ability to generalize and make accurate predictions with limited examples. This makes it a valuable tool for applications like medical imaging, where labeled data is often scarce.

🔍 Quick Fact: Meta-learning doesn’t just improve accuracy. It also reduces the time and computational resources needed to train models, making it a cost-effective solution for many industries.

By combining support sets, query sets, and meta-learning principles, few-shot learning systems achieve impressive performance. These techniques ensure that the models can learn efficiently, adapt quickly, and generalize effectively, even in data-scarce environments.

Approaches to Few-Shot Learning in Computer Vision

Data-Level Approaches

Data-level approaches focus on improving the quality and quantity of training data. These methods often involve techniques like data augmentation, feature extraction, and prototype creation. By enhancing the dataset, you can help the model learn better patterns even with limited examples. For instance, data augmentation generates variations of existing images, such as flipping or rotating them. This process increases the diversity of the dataset without requiring new samples.

Another effective strategy is feature extraction. It identifies key characteristics of images, making it easier for the model to differentiate between classes. Prototype creation is also common in few-shot learning approaches. It involves averaging the features of images within a class to create a "prototype." The model then compares new examples to these prototypes for classification.

| Method Type | Description |

|---|---|

| Data-level | Enhances few-shot learning by mining data features and expanding the amount of training data. |

Parameter-Level Approaches

Parameter-level approaches aim to optimize the model's parameters for better generalization. These methods focus on understanding the overall distribution of the data rather than memorizing specific examples. By doing so, you can ensure that the model adapts well to new tasks.

One popular technique is fine-tuning. It adjusts the model's parameters based on the limited data available for a new task. Another method involves using pre-trained models. These models already understand general patterns from large datasets, making them more effective when applied to few-shot learning tasks.

| Method Type | Description |

|---|---|

| Parameter-level | Improves learning ability of small samples by understanding the overall distribution of the sample. |

Meta-Learning Approaches

Meta-learning, or "learning to learn," is a cornerstone of few-shot learning approaches. It trains the model to adapt quickly to new tasks by leveraging knowledge from previous tasks. This method is particularly useful in computer vision, where the variety of tasks can be vast.

For example, meta-learning algorithms like MAML (Model-Agnostic Meta-Learning) optimize the model's parameters to make them adaptable. Matching Networks, another meta-learning technique, use similarity measures to classify new examples based on their resemblance to the support set. These methods enable the model to generalize effectively, even with minimal data.

| Method Type | Description |

|---|---|

| Meta-learning | Learns from the source domain to improve few-shot learning in the target domain. |

Few-shot learning approaches like these have proven effective in various scenarios. Whether you're working with limited data or aiming for rapid adaptation, these methods provide robust solutions for computer vision tasks.

Generative and Cross-Modal Methods

Generative and cross-modal methods play a vital role in enhancing few-shot learning for machine vision. These approaches help you create synthetic data or leverage information from multiple modalities, making it easier to train models with limited examples.

Generative Methods

Generative methods focus on creating new data samples that resemble the original dataset. These techniques allow you to expand your dataset without collecting additional real-world samples. For example, Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are popular tools for generating realistic images. GANs use two networks—a generator and a discriminator—that compete to produce high-quality synthetic data. VAEs, on the other hand, learn to encode and decode data, creating new samples based on the learned patterns.

Imagine you are training a model to recognize rare bird species. Collecting hundreds of images for each species might be impossible. Generative methods can create synthetic images of these birds, giving your model more data to learn from. This approach improves accuracy and reduces overfitting.

Cross-Modal Methods

Cross-modal methods use information from different data types or modalities, such as text, images, or audio. These methods help your model learn relationships between modalities, enabling it to generalize better. For instance, CLIP (Contrastive Language–Image Pretraining) links text descriptions with images. If you provide a text description of an object, the model can identify it in an image, even if it has never seen that object before.

💡 Tip: Cross-modal methods are especially useful in zero-shot learning, where your model must classify unseen classes using semantic relationships.

By combining generative and cross-modal methods, you can overcome data limitations and build robust machine vision systems. These techniques empower your models to learn efficiently, even in challenging scenarios.

Key Algorithms for Few-Shot Learning Machine Vision System

MAML (Model-Agnostic Meta-Learning)

MAML stands out as a powerful algorithm for few-shot learning machine vision systems. It teaches models to adapt quickly to new tasks by fine-tuning their parameters. Instead of training a model for a single task, MAML prepares it to handle diverse scenarios efficiently. For example, if you train a model to recognize animals, MAML enables it to identify a new species with minimal data.

This algorithm excels in fast adaptation. It adjusts the model’s parameters so you can achieve high accuracy with just a few examples. MAML also reduces dependency on large datasets, making it ideal for applications where data is scarce. Researchers have observed improved performance across various benchmarks, proving its versatility in machine vision tasks.

Matching Networks

Matching Networks leverage adaptive similarity computation to classify new examples. This algorithm compares query images to support sets, identifying patterns based on their resemblance. You can use Matching Networks to handle complex relationships between data points, even when the dataset is small.

Matching Networks enhance generalization capabilities. They allow your model to learn from limited examples and apply that knowledge to unseen tasks. For instance, if you train a model to recognize flowers, Matching Networks enable it to identify new flower types with high accuracy. This algorithm’s ability to handle non-linear relationships makes it a valuable tool for few-shot learning machine vision systems.

Prototypical Networks

Prototypical Networks simplify classification tasks by creating prototypes for each class. These prototypes represent the average features of images within a class. When you provide a query image, the algorithm compares it to these prototypes to determine the class.

This approach is computationally efficient. It reduces the complexity of deep neural network operations, making it faster and easier to implement. Prototypical Networks also deliver robust performance in few-shot learning scenarios. For example, if you train a model to recognize bird species, this algorithm enables it to classify new species accurately with minimal data.

| Algorithm | Key Advantages |

|---|---|

| Prototypical Networks | - Learns compact, meaningful representations |

| - Robust performance in few-shot learning scenarios | |

| - Computational efficiency compared to complex methods | |

| Matching Networks | - Adaptive similarity computation |

| - Enhanced generalization capabilities | |

| - Handles complex, non-linear relationships | |

| Model-agnostic Meta-learning (MAML) | - Fast adaptation to new tasks |

| - Improved performance across diverse scenarios | |

| - Reduced dependency on large datasets |

These algorithms form the backbone of few-shot learning machine vision systems. They enable models to learn efficiently, adapt quickly, and generalize effectively, even with limited data.

Relation Networks

Relation Networks (RNs) offer a unique approach to few-shot learning by focusing on relationships between data points. Instead of relying solely on feature extraction, RNs evaluate how query images relate to support set images. This method helps the model understand the "context" of the data, making it highly effective for classification tasks.

Here’s how it works: You provide a support set with labeled examples and a query image. The RN computes a similarity score between the query and each support example. It uses this score to determine the query's class. For instance, if you train a model to recognize animals, the RN compares a new image of a cat to the support set. It then identifies the image as a "cat" based on the highest similarity score.

🧠 Fun Fact: Relation Networks mimic how humans compare objects. You often identify something new by comparing it to what you already know!

RNs excel in tasks requiring fine-grained comparisons, such as facial recognition or medical imaging. They also reduce computational complexity by focusing on relationships rather than processing entire datasets. This makes them a practical choice for real-world applications.

| Advantages of Relation Networks | Description |

|---|---|

| Contextual Understanding | Focuses on relationships between data points. |

| Efficiency | Reduces computational overhead. |

| Versatility | Works well for fine-grained classification tasks. |

YOLOMAML

YOLOMAML combines the strengths of YOLO (You Only Look Once) and MAML (Model-Agnostic Meta-Learning). This hybrid algorithm is designed for few-shot object detection, enabling you to identify objects in images with minimal data.

YOLOMAML operates in two stages. First, it uses YOLO’s real-time object detection capabilities to locate objects in an image. Then, it applies MAML to fine-tune the model for new tasks. This combination allows you to detect and classify objects quickly, even in unfamiliar scenarios. For example, if you train YOLOMAML to detect cars, it can adapt to identifying trucks with just a few examples.

🚀 Pro Tip: YOLOMAML is ideal for applications like autonomous vehicles, where speed and adaptability are critical.

This algorithm stands out for its speed and adaptability. It processes images in real-time while maintaining high accuracy. Researchers have found YOLOMAML particularly useful in dynamic environments, such as robotics and surveillance.

| Feature | Benefit |

|---|---|

| Real-Time Detection | Processes images quickly for immediate results. |

| Few-Shot Adaptability | Learns new tasks with minimal data. |

| Versatile Applications | Suitable for robotics, vehicles, and security. |

By leveraging Relation Networks and YOLOMAML, you can build powerful machine vision systems that excel in both classification and object detection tasks.

Applications of Few-Shot Learning in Computer Vision

Few-Shot Image Classification

Few-shot image classification has transformed how you can train models to recognize objects or patterns with minimal data. Traditional methods often require thousands of labeled images, but few-shot learning enables systems to classify new categories with just a handful of examples. This makes it ideal for scenarios where collecting large datasets is impractical.

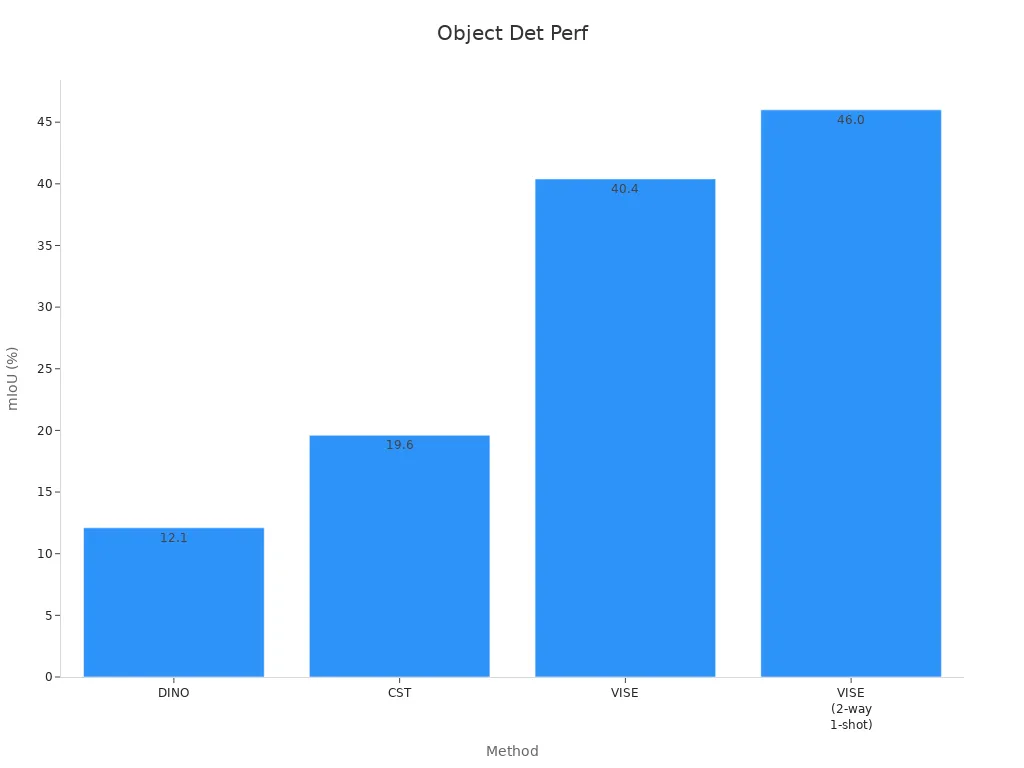

For instance, a case study using the COCO-20i dataset demonstrated the effectiveness of few-shot classification. In a 2-way 1-shot setting, the method achieved a segmentation mean Intersection over Union (mIoU) of 46.0%, showcasing its ability to handle complex tasks with limited data.

| Method | ER (%) | mIoU (%) |

|---|---|---|

| DINO | - | 12.1 |

| CST | 78.2 | 19.6 |

| VISE | 84.5 | 40.4 |

| VISE (2-way 1-shot) | 87.0 | 46.0 |

Few-shot image classification is particularly useful in agriculture. Studies have shown its success in identifying crop pests and classifying plant diseases with limited labeled images. For example, researchers used few-shot learning to classify cotton leaf spot diseases and achieved remarkable accuracy. This approach not only saves time but also reduces the cost of data collection.

Few-Shot Object Detection

Few-shot object detection allows you to identify and locate objects in images with minimal training data. This is especially valuable in dynamic environments where new objects frequently appear. Unlike traditional object detection methods, which require extensive datasets, few-shot learning adapts quickly to new tasks.

A hybrid algorithm like YOLOMAML combines the real-time detection capabilities of YOLO with the adaptability of MAML. This enables you to detect objects in real-time while learning new categories with just a few examples. For instance, YOLOMAML can identify vehicles like trucks after being trained on cars with minimal additional data.

Few-shot object detection has proven effective in various fields. In agriculture, it has been used for automatic plant counting and location. This technique helps farmers monitor crops efficiently, saving both time and resources.

Medical Imaging

Few-shot learning has revolutionized medical imaging by addressing the challenge of data scarcity. In this field, collecting large datasets is often difficult due to privacy concerns and the rarity of certain conditions. Few-shot learning enables you to train models effectively with limited examples, making it a game-changer for healthcare.

For example, few-shot learning has been applied to detect rare diseases and classify medical images with high accuracy. This approach reduces the time required for diagnosis and improves patient outcomes. In one study, researchers used few-shot learning to identify abnormalities in X-rays and achieved results comparable to traditional methods that rely on extensive datasets.

💡 Tip: Few-shot learning is not just about accuracy. It also reduces the cost and time associated with data collection and labeling, making it a practical solution for medical applications.

By leveraging few-shot learning, you can build robust systems for medical imaging, crop monitoring, and object detection. These applications highlight the versatility and efficiency of this innovative approach in computer vision.

Facial Recognition

Facial recognition has become a cornerstone of modern technology, and few-shot learning is taking it to the next level. Traditional facial recognition systems require thousands of labeled images to identify individuals accurately. Few-shot learning eliminates this dependency by enabling systems to recognize faces with just a few examples. This makes it ideal for applications where collecting large datasets is impractical or impossible.

Few-shot object detection plays a critical role in facial recognition. It allows you to detect and classify faces in images or videos, even when the system has limited training data. For example, a security system can identify a new employee after being shown only a few images of their face. This capability enhances adaptability and reduces the time needed for system updates.

In addition to security, few-shot object detection improves personalization in consumer technology. Smartphones and laptops use facial recognition for unlocking devices and customizing user experiences. Few-shot learning ensures these systems adapt quickly to new users or changes in appearance, such as wearing glasses or growing a beard.

The efficiency of few-shot object detection also benefits industries like healthcare. It enables systems to identify patients in medical records or monitor individuals in real-time, ensuring accurate and timely care. By leveraging this technology, you can build facial recognition systems that are both powerful and versatile.

Robotics and Autonomous Vehicles

Few-shot learning is revolutionizing robotics and autonomous vehicles by enhancing their ability to perceive and interact with the world. Object detection is a fundamental skill for these systems, and few-shot learning makes it more efficient. Robots and self-driving cars can identify and respond to new objects or obstacles with minimal training data.

Few-shot object detection allows robots to adapt to dynamic environments. For instance, a warehouse robot can recognize and handle new types of packages after being shown only a few examples. This adaptability reduces downtime and improves operational efficiency.

In autonomous vehicles, few-shot object detection ensures safety and reliability. Self-driving cars must identify pedestrians, traffic signs, and other vehicles in real-time. Few-shot learning enables these systems to adapt to new scenarios, such as recognizing unfamiliar road signs in different regions. This capability enhances the vehicle's ability to navigate safely in diverse environments.

The integration of few-shot object detection in robotics and autonomous vehicles also accelerates innovation. Developers can create systems that learn and improve continuously, reducing the need for extensive retraining. By adopting this technology, you can build smarter, more adaptable machines that excel in real-world applications.

Benefits of Few-Shot Learning in Machine Vision

Reduced Data Dependency

Few-shot learning reduces the need for large labeled datasets, making it a practical solution for scenarios where data collection is challenging. Traditional machine learning models often require thousands of examples to perform well. Few-shot learning, however, enables systems to learn new concepts from just a handful of samples. This approach mimics how humans grasp new ideas quickly without extensive repetition.

For instance, in computer vision, few-shot learning allows models to classify objects or detect patterns with minimal data. This capability is especially useful in fields like medical imaging, where labeled data is scarce. By leveraging advanced techniques like meta-learning, few-shot learning systems can generalize effectively, even with limited input.

| Benefit | Description |

|---|---|

| Learning from Few Examples | Few-shot learning enables AI models to learn new concepts from only a few examples, unlike traditional methods. |

| Reduced Data Dependency | Few-shot learning allows models to learn from limited labeled data, reducing the need for extensive data annotation. |

🧠 Quick Insight: Reducing data dependency not only saves time but also lowers costs associated with data collection and labeling.

Enhanced Adaptability

Few-shot learning enhances adaptability by enabling models to scale and adjust to new tasks or environments. This flexibility is crucial in dynamic contexts, such as event detection systems or robotics. For example, a robot equipped with few-shot learning can recognize new objects in a warehouse after being shown only a few examples.

This adaptability stems from the ability of few-shot learning systems to leverage prior knowledge. Instead of starting from scratch, these models use what they’ve learned from previous tasks to handle new challenges. This makes them ideal for applications in computer vision, where the variety of tasks can be vast.

| Benefit | Description |

|---|---|

| Mimics Human Learning | Few-shot learning mimics human ability to quickly grasp new ideas without extensive repetition. |

| Enhanced Adaptability | It enhances the scalability and adaptability of event detection systems in dynamic contexts and for unseen event categories. |

🚀 Pro Tip: Few-shot learning’s adaptability makes it a game-changer for industries like autonomous vehicles and robotics.

Improved Generalization

Few-shot learning excels at generalizing from limited data. This means that models trained on a small number of examples can perform well on unseen tasks. For instance, a few-shot learning system trained to identify animals can recognize a new species with just a few images.

This capability is achieved through techniques like prototype creation and meta-learning. By focusing on the relationships between data points, few-shot learning systems can identify patterns that apply across different scenarios. This flexibility ensures that models remain effective even when faced with unfamiliar challenges.

| Benefit | Description |

|---|---|

| Generalization Capabilities | Few-shot learning techniques allow models to generalize effectively using just a small number of samples. |

| Flexibility | It enables AI models to adapt rapidly to new scenarios by leveraging prior knowledge. |

🔍 Did You Know? Few-shot learning’s generalization capabilities are transforming fields like healthcare and e-commerce by enabling systems to handle diverse tasks efficiently.

Few-Shot Learning is reshaping how you approach machine vision. It empowers systems to learn new tasks with minimal data, making them adaptable to diverse challenges. You can see its impact in fields like healthcare, robotics, and autonomous vehicles, where efficiency and scalability are critical.

🌟 Key Insight: Few-Shot Learning reduces data dependency and improves adaptability, unlocking possibilities for innovation across industries.

As this technology evolves, it promises to drive breakthroughs in computer vision. You can expect smarter systems that handle complex tasks with ease, paving the way for advancements in artificial intelligence.

FAQ

What is the main challenge in the few-shot learning problem?

The main challenge lies in training models to perform well with very few labeled examples. Unlike traditional methods, few-shot learning requires systems to generalize effectively from limited data, making it suitable for tasks where collecting large datasets is impractical.

How does few-shot learning differ from traditional machine learning?

Few-shot learning focuses on learning from minimal data, while traditional machine learning relies on large datasets. This approach enables you to train models for new tasks quickly, making it ideal for applications like medical imaging or rare object detection.

Can few-shot learning be applied to real-world problems?

Yes, few-shot learning is already solving real-world problems. It’s used in medical imaging to detect rare diseases, in facial recognition for security, and in robotics for adapting to new environments. Its ability to work with limited data makes it highly practical.

What industries benefit the most from few-shot learning?

Industries like healthcare, e-commerce, and autonomous vehicles benefit significantly. Few-shot learning helps in diagnosing rare conditions, personalizing shopping experiences, and enabling vehicles to adapt to new road scenarios with minimal retraining.

Is few-shot learning suitable for small businesses?

Absolutely. Few-shot learning reduces the need for extensive datasets, lowering costs for data collection and labeling. Small businesses can use it to build efficient AI systems for tasks like product categorization or customer behavior analysis without requiring large-scale resources.

See Also

Understanding Few-Shot And Active Learning In Machine Vision

The Impact Of Neural Network Frameworks On Machine Vision

The Role Of Deep Learning In Advancing Machine Vision