Can Filtering Machine Vision Systems Boost Accuracy?

Filtering machine vision systems enhance accuracy by improving the quality of visual data. These advanced systems filter out noise, highlight critical details, and adjust lighting conditions to deliver sharper images for analysis. For example, optimized lighting enables machines to identify fine patterns and textures that might be overlooked. By concentrating on essential features, filtering machine vision systems boost the reliability of automated processes, allowing for smarter, real-time decision-making. Whether applied in manufacturing or medical imaging, these systems ensure precise and trustworthy results.

Key Takeaways

- Filtering machine vision systems make images clearer by removing noise. They highlight important parts, helping analyze data more accurately.

- Optical filters help machines recognize images better by focusing on key areas. This is very important in fields like factories and healthcare.

- Filtering improves how well machines work in bad lighting by reducing extra noise and boosting useful signals.

- Using filters makes decisions faster by giving cleaner data. This helps many jobs run more smoothly and efficiently.

- It's important to adjust filtering carefully to avoid overdoing it. Too much filtering can hide key details and make systems less accurate.

Understanding Filtering in Machine Vision Systems

What Is Filtering in Machine Vision?

Filtering in machine vision systems refers to the process of enhancing visual data by removing unwanted elements and emphasizing critical features. You can think of it as a way to clean up images captured by vision sensors, ensuring they are ready for accurate analysis. Optical filters play a key role in this process by controlling how light interacts with the sensors. For example, filters can block specific wavelengths or reduce glare, making it easier to detect fine details in complex environments.

Modern machine vision systems rely on advanced technologies like convolutional neural networks (CNNs) to process filtered data. These systems analyze hundreds of images in seconds, improving both speed and accuracy. Filtering ensures that the data fed into these systems is of high quality, which is crucial for tasks like defect detection in manufacturing or medical imaging.

The Role of Filters in Visual Data Processing

Filters are essential for optimizing the performance of machine vision systems. They help vision sensors focus on relevant information while ignoring distractions. Optical filters, such as polarizers and bandpass filters, adjust lighting conditions to enhance image clarity. For instance, polarizers reduce reflections, making it easier to inspect shiny surfaces like steel or glass.

A study on optical flow estimation highlights the impact of filtering techniques. Median filtering improves robustness and accuracy, while bilateral filtering prevents smoothing across motion boundaries. These methods ensure that visual data remains sharp and reliable for analysis.

A documented case study in construction settings demonstrates how filtering enhances decision-making. By removing irrelevant content, filters allow systems to focus on critical frames, improving efficiency in built environments.

Filtering also plays a vital role in applications where lighting conditions are challenging. Whether you're working in low-light environments or dealing with excessive glare, filters ensure that your machine vision system delivers consistent results.

| Study Title | Findings |

|---|---|

| A Quantitative Analysis of Current Practices in Optical Flow Estimation | Median filtering improves robustness and accuracy in optical flow estimation. |

| Bilateral filtering prevents smoothing across motion boundaries, enhancing flow estimation accuracy. | |

| L1 robust penalty models yield flow fields as accurate as specialized methods, demonstrating the effectiveness of generic optimization methods. |

How Filtering Machine Vision Systems Enhance Accuracy

Noise Reduction for Cleaner Data

Noise in images can obscure critical details, making it harder for machine vision systems to process data accurately. Filtering techniques play a vital role in removing this unwanted noise, ensuring cleaner and more reliable data for analysis. By using advanced methods like deep neural networks for denoising, you can achieve significant improvements in noise reduction. These networks excel because they learn to ignore random noise components in the data, especially when trained on experimental datasets.

- Training on real-world experimental data often outperforms artificial noise profiles, even when the latter is more abundant.

- Multiscale training procedures further enhance performance by covering a wide range of noise statistics.

For example, when inspecting products on a manufacturing line, noise reduction ensures that defects like scratches or dents are not missed due to visual interference. This process allows vision sensors to deliver high-quality images, enabling better decision-making.

Feature Extraction for Improved Recognition

Feature extraction is another critical aspect of filtering machine vision systems. It focuses on identifying and isolating the most relevant parts of an image, such as edges, textures, or patterns. This process improves recognition accuracy by ensuring that the system concentrates on meaningful data rather than irrelevant details.

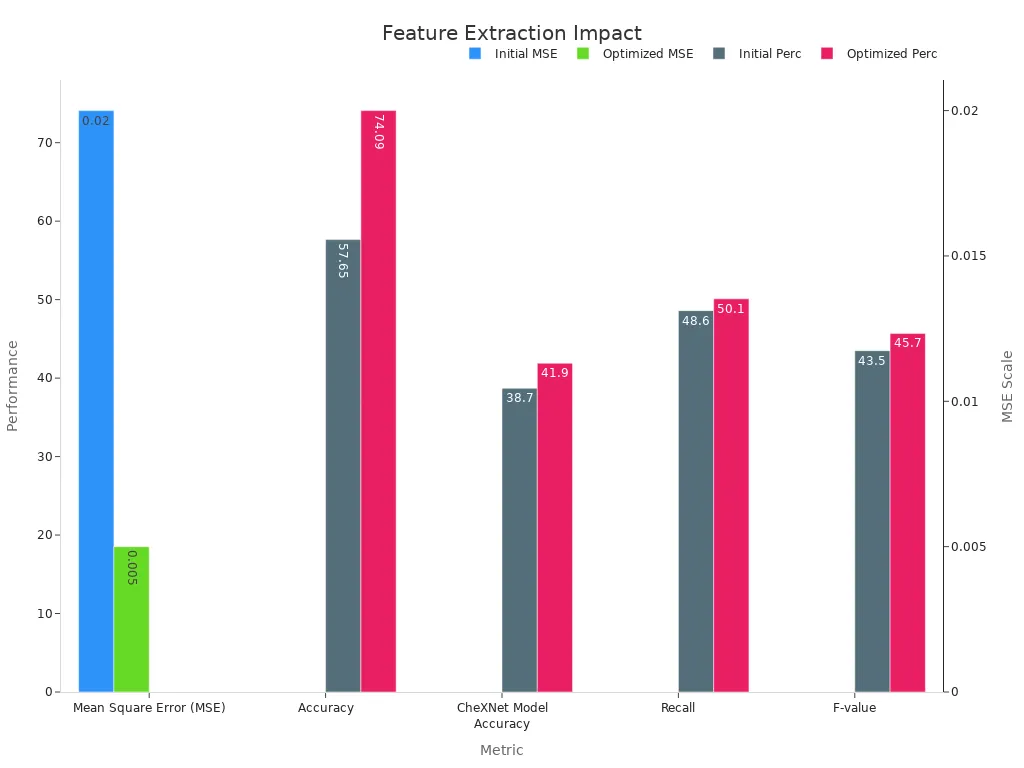

Comparative experiments highlight the impact of feature extraction on recognition accuracy. The table below illustrates how optimized feature extraction techniques lead to measurable improvements:

| Metric | Initial Value | Optimized Value | Improvement |

|---|---|---|---|

| Mean Square Error (MSE) | 0.02 | 0.005 | Significant reduction |

| Accuracy | 57.65% | 74.09% | Increased by 16.44% |

| CheXNet Model Accuracy | 38.7% | 41.9% | Uplift of 3.2% |

| Recall | 48.6% | 50.1% | Uplift of 1.5% |

| F-value | 43.5% | 45.7% | Uplift of 2.2% |

By leveraging optical filters, you can enhance feature extraction even further. For instance, polarizers can reduce glare, making it easier to detect fine details on reflective surfaces. This capability is especially useful in industries like quality control, where precision is paramount.

Optimizing Signal-to-Noise Ratio

The signal-to-noise ratio (SNR) is a key metric for evaluating the quality of visual data. A higher SNR indicates that the useful signal in an image is much stronger than the background noise, leading to better performance in tasks like classification or object detection. Filtering techniques help optimize this ratio by effectively reducing noise while preserving essential details.

An effective noise reduction strategy can substantially enhance the SNR, enabling cleaner component extraction. For example, in a study evaluating SNR levels, researchers used a nominal-the-best approach to measure accuracy and stability. The formula applied was:

SN = 10 * log(y^2/s^2)

Here, y represents the average value, and s is the standard deviation of the data. This approach ensures that variations in other factors do not compromise the overall performance of the system.

Optimizing SNR is particularly beneficial in challenging environments with poor lighting conditions. By using optical filters like bandpass filters, you can control the wavelengths of light reaching the vision sensors. This adjustment improves image clarity, allowing machine vision systems to perform consistently, even in low-light or high-glare scenarios.

Benefits of Filtering Machine Vision Systems

Enhanced Precision and Reduced Errors

Filtering machine vision systems improve precision by refining the quality of visual data. Optical filters play a crucial role in this process. They eliminate distractions like glare and chromatic aberration, ensuring that the system focuses on essential details. For example, a bandpass filter can correct chromatic aberration, resulting in sharper and more accurate images. This enhanced precision reduces errors in tasks such as defect detection or object classification.

When you use filtering techniques, you allow the system to capture clearer images even in complex environments. A monochrome camera paired with a blue bandpass filter demonstrates this advantage. It utilizes all pixels effectively, achieving 90% efficiency compared to just 25% with a color camera. This improvement ensures that your machine vision system delivers reliable results, minimizing costly mistakes.

Faster and More Reliable Decision-Making

Filtering techniques speed up decision-making by providing cleaner and more focused data for analysis. Optical filters, such as neutral density filters, optimize lighting conditions to improve inspection capabilities. For instance, when counting LEDs, a neutral density filter reduces light intensity, enabling the system to accurately identify and count each LED. This streamlined process saves time and enhances reliability.

By reducing noise and emphasizing critical features, filtering machine vision systems allow you to make faster decisions without compromising accuracy. In barcode scanning applications, an IR bandpass filter eliminates interference from surrounding barcodes. This ensures that the desired barcode is read quickly and correctly, improving operational efficiency in industries like logistics and retail.

Improved Performance in Challenging Environments

Filtering machine vision systems excel in environments where lighting conditions are less than ideal. Optical filters adapt to these challenges by controlling the wavelengths of light reaching the sensors. For example, a bandpass filter enhances contrast and sharpness, making it easier to capture clear images in low-light or high-glare scenarios.

In demanding applications, filtering techniques ensure consistent performance. Whether you're inspecting shiny surfaces or working in dimly lit spaces, optical filters help your system deliver reliable results. The table below highlights how filtering improves outcomes under challenging conditions:

| Application | Performance Improvement | Description |

|---|---|---|

| Chromatic Aberration Correction | Increased sharpness and contrast | A bandpass filter corrects chromatic aberration, resulting in clearer images. |

| Monochrome vs. Color Camera | 90% efficiency vs. 25% | A monochrome camera with a blue bandpass filter utilizes all pixels effectively compared to a color camera. |

| Barcode Interference Reduction | Elimination of interference | An IR bandpass filter allows the desired barcode to be read while blocking interference from other barcodes. |

| LED Counting | Improved inspection capability | A neutral density filter reduces light intensity, enabling accurate counting of LEDs. |

By leveraging filtering techniques, you can overcome environmental challenges and maintain high levels of accuracy and efficiency. These systems adapt to your needs, ensuring optimal performance in any setting.

Challenges and Limitations of Filtering

Computational Costs and Resource Demands

Filtering machine vision systems often require significant computational power. Advanced filtering techniques, such as deep learning-based denoising, demand high-performance hardware. This can increase costs and energy consumption. For example, training a neural network to filter noise in real-time may involve processing large datasets. You might also need specialized GPUs to handle the workload efficiently. These requirements can make it challenging to implement filtering in resource-constrained environments.

Additionally, the complexity of filtering algorithms can slow down processing speeds. In applications like autonomous vehicles, where decisions must be made instantly, this delay could impact performance. To address this, you should consider balancing the level of filtering with the system's processing capabilities.

Risk of Overfitting or Data Loss

Overfitting occurs when a filtering system becomes too specific to the training data. This can reduce its ability to generalize to new scenarios. For instance, a filter trained to detect defects in one type of material may struggle with variations in texture or color. You can mitigate this risk by using diverse datasets during training.

Excessive filtering can also lead to data loss. Removing too much noise might eliminate critical details needed for accurate analysis. For example, in medical imaging, over-filtering could obscure subtle features like microcalcifications. To avoid this, you should carefully calibrate the filtering intensity based on the application's requirements.

Balancing Filtering Intensity with Application Needs

Finding the right balance between filtering intensity and application needs is crucial. Over-filtering can compromise data quality, while under-filtering may leave too much noise. You can achieve this balance by tailoring the filtering approach to the specific task.

For example, managing lighting conditions is essential for effective filtering. Techniques like using diffuse light sources or strobe lights can enhance image clarity. Adjusting the contrast by repositioning the sample, light, or camera can also improve feature visibility. The table below outlines methods for balancing filtering intensity:

| Method | Description |

|---|---|

| Lighting Techniques | Select appropriate lighting solutions, such as diffuse or strobe lights, based on inspection needs. |

| Ambient Light Management | Use high-power strobing, physical enclosures, or pass filters to control ambient light. |

| Contrast Manipulation | Adjust sample contrast by altering positions or changing lighting structure and color. |

By understanding the physical requirements of your application, you can optimize filtering intensity to achieve the best results.

Real-World Applications of Filtering Machine Vision Systems

Quality Control in Manufacturing

Filtering plays a vital role in ensuring high-quality standards in manufacturing. By enhancing image clarity, it allows you to detect defects like scratches, dents, or misalignments with greater precision. For instance, optical filters can reduce glare on reflective surfaces, making it easier to inspect products like glass or polished metals. This ensures that only flawless items reach your customers.

In automated assembly lines, filtering improves the accuracy of robotic vision systems. It helps these systems identify components quickly and correctly, reducing errors during assembly. You can also use filtering to optimize lighting conditions, ensuring consistent performance even in environments with variable illumination. This makes your quality control processes faster and more reliable.

Autonomous Vehicles and Navigation

Filtering technologies significantly enhance the performance of autonomous vehicles. They improve object detection and navigation by refining the quality of visual data. For example, filtering irrelevant predictions helps the vehicle focus on critical objects, improving trajectory planning and driving quality. Key performance indicators validate these improvements:

- Average Precision (AP) of object detectors increases, ensuring better identification of pedestrians, vehicles, and obstacles.

- PKL metrics for trajectory quality show enhanced path accuracy.

- Filtering irrelevant predictions reduces errors in navigation, leading to smoother and safer driving experiences.

By optimizing the signal-to-noise ratio, filtering ensures that autonomous systems perform reliably, even in challenging conditions like low light or heavy rain. This makes your vehicle safer and more efficient on the road.

Medical Imaging and Diagnostics

In medical imaging, filtering improves diagnostic accuracy by enhancing image quality. It removes noise and highlights critical features, making it easier for you to identify abnormalities. Clinical data shows that integrating imaging with electronic health records (EHR) further boosts accuracy. Multimodal deep learning models that combine these data sources outperform single-modality models. Accuracy improvements range from 1.2% to 27.7%, while AUROC scores increase by 0.02 to 0.16.

For example, filtering techniques help radiologists detect subtle features like microcalcifications in mammograms. This leads to earlier and more accurate diagnoses. In ultrasound imaging, filtering reduces speckle noise, providing clearer visuals of internal organs. These advancements ensure that you receive better and more reliable healthcare outcomes.

Surveillance and Security Systems

Filtering plays a critical role in enhancing the performance of surveillance and security systems. It improves image clarity, making it easier for you to monitor and analyze activities in real time. By reducing noise and optimizing light conditions, filtering ensures that your security cameras capture clear and reliable visuals, even in challenging environments.

Different types of filters serve specific purposes in surveillance applications. For example, neutral density filters reduce the amount of light entering the camera. This feature is especially useful in bright sunlight, where overexposure can obscure important details. Polarizing filters, on the other hand, minimize glare from shiny surfaces, producing sharper images with higher contrast. The table below highlights these benefits:

| Filter Type | Purpose | Benefits |

|---|---|---|

| Neutral Density | Reduces light entering the camera | Useful in bright sunlight |

| Polarizing | Reduces glare from shiny surfaces | Produces clearer images with higher contrast |

Modern surveillance systems also use advanced techniques to adapt to varying lighting conditions. Day/night imaging employs an IR-cut filter to block infrared light during the day, allowing for accurate color images. At night, the filter is removed, enabling the camera to capture clear black-and-white visuals by utilizing both visible and infrared light. This ensures consistent performance around the clock.

Noise Solution: Techniques like FDF (Frequency Divided Filter) and 3D-MNR (3D-Multi-process Noise Reduction) are essential for removing noise under low illumination, which is crucial for maintaining image clarity.

Noise reduction methods further enhance the quality of surveillance footage. These techniques eliminate visual interference, allowing you to detect subtle movements or objects in low-light conditions. Whether monitoring a parking lot or securing a high-risk facility, filtering ensures that your security system delivers accurate and actionable insights.

Filtering machine vision systems enhances accuracy by improving image quality and optimizing data processing. You can rely on these systems to reduce errors and boost efficiency across industries.

- Advancements in automation are increasing the demand for high-precision imaging filters, especially in factory automation and semiconductor manufacturing.

- Industry 4.0 drives the need for smarter quality control systems, further expanding the role of machine vision technologies.

- AI and machine learning are enabling smart filters that self-calibrate and adapt, making filtering even more effective.

As technology evolves, filtering will remain essential for innovation in machine vision systems.

FAQ

What is the main purpose of filtering in machine vision systems?

Filtering improves image quality by removing noise and enhancing critical features. This ensures that your machine vision system processes data accurately, leading to better decision-making in tasks like defect detection, object recognition, and navigation.

How does filtering reduce noise in images?

Filtering uses techniques like deep learning-based denoising or optical filters to eliminate unwanted noise. These methods help you achieve cleaner visuals, making it easier for the system to focus on essential details without interference.

Can filtering work in low-light environments?

Yes, filtering adapts to low-light conditions by optimizing the signal-to-noise ratio. Optical filters like bandpass filters enhance contrast and clarity, ensuring your system performs reliably even in challenging lighting scenarios.

Are there any risks associated with over-filtering?

Over-filtering can remove critical details, reducing the accuracy of your system. For example, in medical imaging, excessive filtering might obscure subtle features. You can avoid this by calibrating the filtering intensity to match your specific application needs.

What industries benefit the most from filtering machine vision systems?

Industries like manufacturing, healthcare, automotive, and security rely heavily on filtering. It improves quality control, enhances diagnostic accuracy, boosts navigation in autonomous vehicles, and ensures clear visuals in surveillance systems.

Tip: Tailor filtering techniques to your industry’s unique challenges for optimal results.

See Also

Understanding Camera Resolution Fundamentals in Vision Systems

Comparing Fixed And Motion Integrated Vision Systems In Machine Vision

An In-Depth Guide To Machine Vision In Automation

Exploring Machine Vision Applications Within Food Manufacturing

Achieving Excellence In OD Scratch Inspection With Vision Technology