What Is K-Nearest Neighbor Machine Vision System in 2025?

The k-nearest neighbor machine vision system uses the k-nearest neighbor algorithm to interpret and classify visual data. In 2025, advancements in computer vision technologies have made it more effective for tasks like image recognition. The growing need for real-time decision-making has also driven improvements in its performance and efficiency.

Key Takeaways

- The k-nearest neighbor method sorts images by finding the 'k' nearest points. It works well for things like recognizing pictures.

- How far apart points are, like using Euclidean distance, is very important for KNN to work well. Picking the right way to measure distance can make it better.

- KNN is simple and flexible, so it does great in many vision tasks, like finding objects and recognizing faces.

How the KNN Algorithm Works in Machine Vision

Understanding the KNN Algorithm

Explain the concept of k-nearest neighbors and the role of the 'K' value in classification and prediction.

The k-nearest neighbor algorithm is a simple yet powerful tool in supervised machine learning. It operates by identifying the 'k' closest data points to a given input and using their characteristics to make predictions. The 'k' value, which you can adjust, determines how many neighbors the algorithm considers. For example, if k equals 3, the algorithm evaluates the three nearest neighbors to classify or predict the input.

This method relies on the principle that similar data points tend to cluster together. In classification tasks, the algorithm assigns the input to the most common class among its neighbors. For regression tasks, it calculates the average value of the neighbors. The flexibility of the k-nearest neighbor approach makes it highly effective for various machine vision applications.

Discuss the importance of distance metrics (e.g., Euclidean distance) in determining proximity.

Distance metrics play a critical role in the knn algorithm. They measure how close or far data points are from one another. The most commonly used metric is Euclidean distance, which calculates the straight-line distance between two points in a multi-dimensional space. Other metrics, such as Manhattan or Minkowski distance, may also be used depending on the dataset and application.

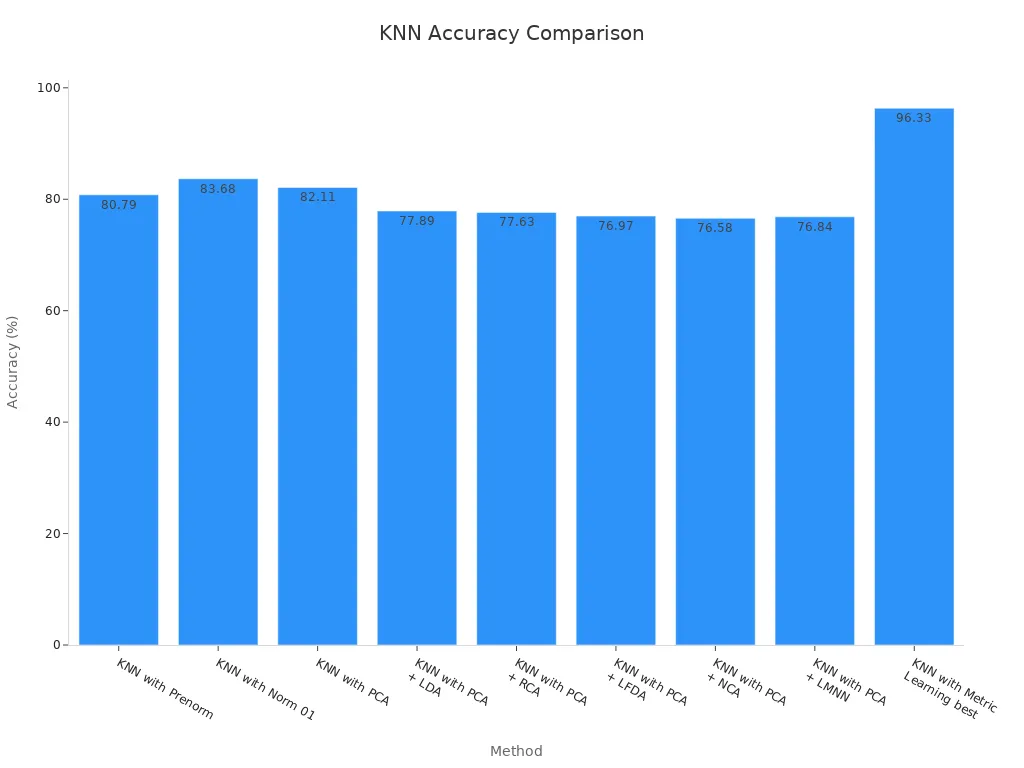

The choice of distance metric directly impacts the algorithm's accuracy. For instance, empirical studies show that using advanced metric learning techniques can significantly enhance classification accuracy. The table below highlights the performance of various distance-based methods:

| Method | Classification Accuracy |

|---|---|

| KNN with Prenorm | 80.79% |

| KNN with Norm 01 | 83.68% |

| KNN with PCA | 82.11% |

| KNN with PCA + LDA | 77.89% |

| KNN with PCA + RCA | 77.63% |

| KNN with PCA + LFDA | 76.97% |

| KNN with PCA + NCA | 76.58% |

| KNN with PCA + LMNN | 76.84% |

| KNN with Metric Learning (best) | 96.33% |

As the table and chart illustrate, advanced distance metrics like metric learning can boost the k-nearest neighbor algorithm's performance, making it a valuable tool for machine vision tasks.

KNN in Machine Vision Tasks

Describe how the KNN algorithm is applied to tasks like image classification, object detection, and feature matching.

The knn algorithm excels in machine vision tasks due to its simplicity and adaptability. In image classification, it assigns labels to images by comparing their features with those of labeled examples. For instance, if you input an image of a cat, the algorithm identifies similar images in the dataset and classifies it as a cat based on the majority vote.

In object detection, the algorithm pinpoints specific objects within an image or video frame. It uses feature matching to compare patterns in the input data with those in the training set. This makes the k-nearest neighbor approach particularly effective for applications like facial recognition and medical imaging.

Highlight the role of labeled datasets in training KNN for machine vision.

Labeled datasets are essential for training the k-nearest neighbor algorithm. These datasets contain input data paired with corresponding labels, enabling the algorithm to learn patterns and relationships. For example, in supervised learning, a dataset might include thousands of images labeled as "dog," "cat," or "car." The algorithm uses this information to classify new, unlabeled images accurately.

However, the quality of the labeled data significantly affects the algorithm's performance. High-quality, diverse datasets ensure better generalization and accuracy. Recent advancements in supervised machine learning have also improved the efficiency of data labeling, making it easier to train the k-nearest neighbor algorithm for complex machine vision tasks.

Applications of K-Nearest Neighbor Machine Vision System in 2025

Object Detection and Recognition

Explore how KNN is used for identifying and classifying objects in images and videos.

The k-nearest neighbor machine vision system plays a vital role in object detection and recognition. You can use it to identify and classify objects in images and videos by comparing their features with those in a labeled dataset. For example, if you want to detect a car in a video, the algorithm analyzes the input frame and matches it with similar patterns in the dataset. This process allows the system to classify the object as a car accurately.

In 2025, the k-nearest neighbor algorithm has become more efficient due to advancements in machine vision technologies. Its ability to handle diverse datasets makes it a reliable choice for applications like medical imaging, where precision is critical. For instance, a KNN-based model achieved an impressive accuracy of 97.8% in object detection tasks. It also demonstrated low error rates, with an RMSE of 0.2476 and an MAE of 0.542. These metrics highlight the algorithm's effectiveness in real-world scenarios.

Discuss advancements in hardware and software that enhance KNN's efficiency in 2025.

Modern hardware and software innovations have significantly improved the performance of the k-nearest neighbor machine vision system. High-performance GPUs and TPUs now process large datasets faster, reducing the time required for classification. Software advancements, such as optimized distance metric calculations, further enhance the algorithm's accuracy. For example, metric learning techniques have increased average performance metrics, including a precision of 95.2% and an F-score of 94.9%.

These improvements make the k-nearest neighbor algorithm a practical solution for industries requiring real-time object detection. Whether you're working in retail, healthcare, or security, the system's enhanced efficiency ensures reliable results.

Facial Recognition Systems

Explain the application of KNN in facial recognition for security and authentication purposes.

Facial recognition systems rely heavily on the k-nearest neighbor algorithm for security and authentication. When you use a facial recognition system, the algorithm compares your facial features with those stored in a database. It identifies the closest matches using distance metrics like Euclidean distance. This process ensures accurate identification, making it ideal for applications like unlocking smartphones or verifying identities at secure locations.

In 2025, the k-nearest neighbor machine vision system has become a cornerstone of facial recognition technology. Its simplicity and adaptability allow it to handle large datasets efficiently. For example, airports now use KNN-based systems to verify passengers' identities, speeding up the boarding process while maintaining high security. These systems also benefit from improved labeled datasets, which enhance their ability to recognize diverse facial features.

Autonomous Systems

Highlight the role of KNN in vision-based navigation for autonomous vehicles and drones.

Autonomous systems, such as self-driving cars and drones, depend on the k-nearest neighbor machine vision system for navigation. The algorithm helps these systems interpret visual data from cameras and sensors. For instance, when a self-driving car encounters a stop sign, the algorithm compares the sign's features with those in its training dataset. It then classifies the object as a stop sign, enabling the car to respond appropriately.

In 2025, advancements in machine vision have made KNN-based navigation systems more reliable. High-quality labeled datasets and improved hardware allow these systems to process visual data in real time. Drones equipped with KNN algorithms can now navigate complex environments, such as forests or urban areas, with greater accuracy. This capability makes them invaluable for tasks like search and rescue or package delivery.

The k-nearest neighbor algorithm's adaptability ensures its continued relevance in autonomous systems. Whether you're developing a self-driving car or a delivery drone, this technology provides a robust foundation for vision-based navigation.

Advantages and Limitations of KNN in Machine Vision

Advantages of KNN

Simplicity and ease of implementation

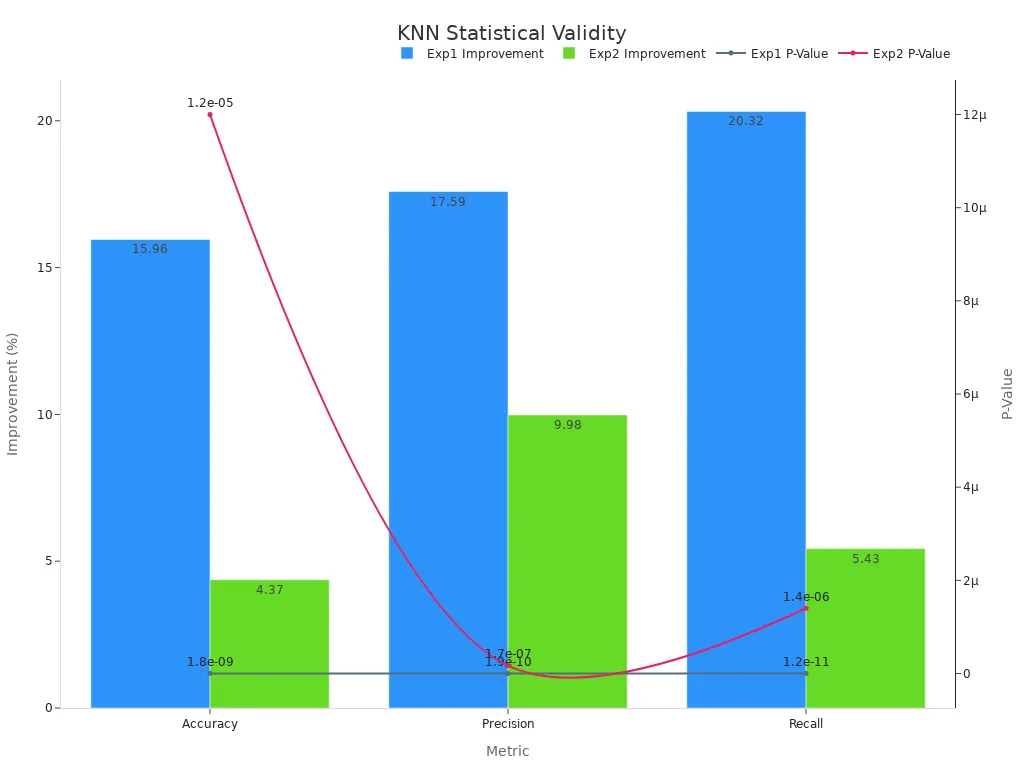

The KNN algorithm stands out for its simplicity. You don’t need complex mathematical models or extensive parameter tuning to implement it. Its straightforward approach makes it accessible for beginners and efficient for quick prototyping. Comparative studies show that KNN improves accuracy by 15.96% across multiple machine vision applications, as illustrated below:

| Metric | IMKNN Improvement | P-value |

|---|---|---|

| Accuracy | 15.96% | 1.8e-9 |

| Precision | 17.59% | 1.9e-10 |

| Recall | 20.32% | 1.2e-11 |

Adaptability to various machine vision tasks

You can apply the KNN algorithm to diverse machine vision tasks, including image classification, object detection, and facial recognition. Its adaptability stems from its ability to work with different types of labeled datasets. Whether you’re analyzing medical images or navigating autonomous systems, KNN provides reliable results across industries.

Effectiveness in low-dimensional data scenarios

The KNN algorithm performs exceptionally well in low-dimensional data contexts. Its accuracy improves in scenarios where data is sparse, such as healthcare diagnostics for rare diseases or fraud detection in finance. Other applications include predictive maintenance in manufacturing and threat detection in cybersecurity.

- Enhances diagnostics for rare diseases, enabling quicker treatment plans.

- Accurately identifies fraudulent activities in large datasets with few instances of fraud.

- Predicts equipment failures with limited historical data.

- Detects evolving cyber threats with minimal examples.

Limitations of KNN

Scalability issues with large datasets

The KNN algorithm struggles with scalability. As the dataset size increases, the computational effort required to calculate distances grows significantly. This limitation makes it less suitable for real-time applications involving massive datasets.

Challenges with high-dimensional data (curse of dimensionality)

High-dimensional data poses a major challenge for KNN systems. The feature space becomes sparse, making it harder for the algorithm to generalize predictions. Overfitting becomes a common issue, as the algorithm relies heavily on proximity metrics.

- Analysis becomes more complex and computationally expensive.

- Predictions lose accuracy due to sparsity in the feature space.

- Overfitting reduces the algorithm’s ability to handle unseen data effectively.

Dependence on high-quality labeled data for optimal performance

The KNN algorithm relies on labeled datasets for training. Poor-quality or insufficient labels can lead to inaccurate predictions. You must ensure that datasets are diverse and well-annotated to achieve optimal results.

The k-nearest neighbor machine vision system helps you interpret and classify visual data effectively. Its simplicity makes it easy to implement, while its adaptability allows it to handle diverse tasks like object detection and facial recognition. Despite challenges like scalability and high-dimensional data, KNN remains a reliable choice due to its strong performance metrics.

| Metric | Class 0 | Class 1 | Macro Avg | Weighted Avg |

|---|---|---|---|---|

| Precision | 0.95 | 0.88 | 0.92 | 0.92 |

| Recall | 0.88 | 0.96 | 0.92 | 0.91 |

| F1-score | 0.91 | 0.92 | 0.91 | 0.91 |

| Accuracy | 0.91 |

Future projections show that KNN will integrate with deep learning techniques, enhancing adaptability in machine vision applications. Studies also highlight its transformative impact, such as achieving 92.59% accuracy in assessing meat freshness, outperforming other algorithms. These advancements ensure KNN will continue evolving as computational power and data processing improve in 2025.

FAQ

What makes KNN suitable for machine vision tasks?

KNN works well because it uses simple distance metrics to classify visual data. Its adaptability allows you to apply it to diverse tasks like object detection.

Tip: Use high-quality labeled datasets to improve KNN's accuracy in machine vision applications.

How does KNN handle large datasets in 2025?

Modern GPUs and TPUs process large datasets faster. Optimized software reduces computational effort, making KNN more efficient for real-time applications.

Can KNN be integrated with other algorithms?

Yes, you can combine KNN with deep learning techniques. This integration enhances adaptability and improves performance in complex machine vision scenarios.

Note: Hybrid models often outperform standalone algorithms in accuracy and scalability.

See Also

Future Trends in Segmentation for Machine Vision Systems

Are Neural Networks Capable of Replacing Human Vision?

Understanding Computer Vision Models and Their Systems

Transforming Machine Vision Systems with Neural Network Frameworks