Key Techniques for Domain Adaptation in Vision Systems

Domain Adaptation machine vision system helps computer vision systems perform well across diverse environments. It enables machines to adjust their learning when faced with new or unseen data. This technique tackles domain shifts, where differences in data distributions can hinder performance. By bridging these gaps, a Domain Adaptation machine vision system ensures systems remain reliable in real-world scenarios. Machine learning plays a central role in this process, allowing models to adapt and learn effectively. You benefit from robust systems that are scalable and capable of handling dynamic challenges in computer vision applications.

Key Takeaways

- Domain adaptation helps vision systems work well in new places. It adjusts to new data without needing lots of retraining.

- Supervised domain adaptation uses labeled data from two sources. This improves accuracy, especially in areas like medical imaging.

- Unsupervised domain adaptation learns from data without labels. It is helpful when labeled data is hard to find, like in self-driving cars.

- Self-supervised domain adaptation makes its own labels using tasks. This reduces manual work and helps models work better in general.

- Using domain adaptation in factories keeps performance steady on different lines. It boosts efficiency and lowers costs.

Overview of Domain Adaptation Techniques

Domain adaptation techniques play a vital role in ensuring computer vision systems perform effectively across diverse environments. These techniques address the challenges posed by differences in data distribution between source and target domains. Below, you’ll explore key approaches to domain adaptation, each tailored to specific scenarios.

Supervised Domain Adaptation

Supervised domain adaptation relies on labeled data from both the source and target domains. By leveraging this labeled information, you can train models to align features across domains and improve performance. This approach works well when labeled data is available in the target domain, enabling precise feature alignment and reducing distribution gaps.

For example, in medical imaging, supervised domain adaptation can help transfer learning models identify abnormalities in X-rays or MRIs from different hospitals. By using labeled data from both domains, you ensure the model adapts effectively to variations in imaging protocols.

Unsupervised Domain Adaptation

Unsupervised domain adaptation (UDA) focuses on transferring knowledge from a label-rich source domain to an unlabeled target domain. This method is particularly useful when labeled data in the target domain is unavailable. UDA techniques aim to align feature distributions between domains, ensuring the model performs well despite the lack of supervision.

Statistical analyses highlight several factors influencing UDA performance:

| Factor | Observation |

|---|---|

| Backbone Architecture | UDA methods depend on the choice of backbone architecture, impacting results. |

| Data Utilization | Efficiency in using unlabeled data remains limited in UDA methods. |

| In-task Pre-training | Pre-training enhances performance but lacks comprehensive theoretical explanation. |

| Sampling Techniques | Performance stabilizes after using a limited amount of unlabeled data. |

By understanding these factors, you can optimize UDA methods for tasks like autonomous driving, where labeled data from every environment is impractical.

Semi-Supervised Domain Adaptation

Semi-supervised domain adaptation (SSDA) combines labeled data from the source domain with a small amount of labeled data from the target domain. This approach bridges the gap between supervised and unsupervised methods, addressing domain shift issues effectively. SSDA excels in scenarios where obtaining a small amount of labeled target data is feasible.

Key comparisons between SSDA and other strategies include:

- SSDA uses both labeled source data and limited labeled target data, ensuring better domain alignment.

- UDA transfers knowledge to an unlabeled target domain but may suffer from alignment deviations.

- Semi-supervised learning enhances generalization using consistent data distribution assumptions.

- SSDA outperforms UDA and SSL in medical image segmentation tasks by leveraging labeled target data.

- Dual-level multi-scale alignment methods in SSDA improve cross-domain feature learning.

For instance, SSDA has shown remarkable success in industrial quality control, where labeled data from new production lines can refine model accuracy.

Self-Supervised Domain Adaptation

Self-supervised domain adaptation offers a unique approach to bridging the gap between source and target domains. Unlike supervised domain adaptation, which relies on labeled data, or unsupervised domain adaptation, which works with unlabeled data, self-supervised methods create their own labels. These labels are generated through pretext tasks, enabling models to learn meaningful features without requiring manual annotations.

How It Works

In self-supervised domain adaptation, you use pretext tasks to train models on both source and target domains. These tasks might include predicting image rotations, solving jigsaw puzzles, or identifying missing parts of an image. By solving these tasks, the model learns to extract domain-invariant features. Once trained, the model can adapt to the target domain more effectively, even when labeled data is scarce.

Benefits of Self-Supervised Domain Adaptation

This method provides several advantages:

- Reduced Dependency on Labeled Data: You can achieve domain adaptation without extensive manual labeling, saving time and resources.

- Improved Generalization: Models trained with self-supervised techniques often generalize better across diverse domains.

- Scalability: Self-supervised methods scale well to large datasets, making them suitable for real-world applications.

Experimental Results

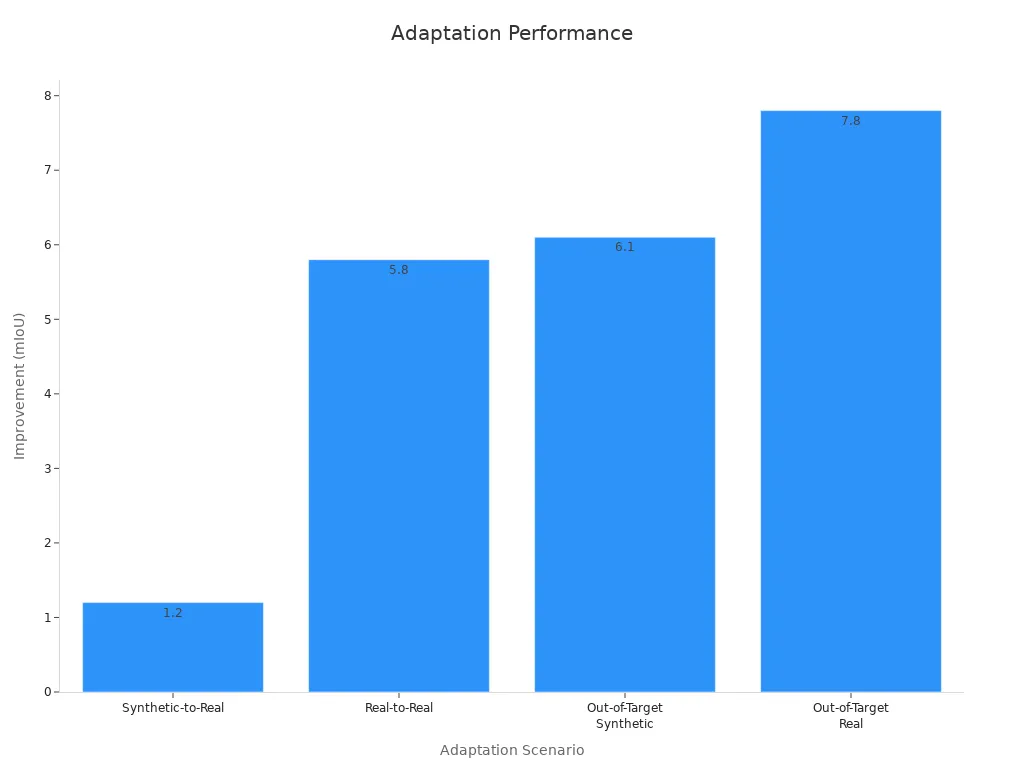

Recent experiments highlight the effectiveness of self-supervised domain adaptation in vision systems. The table below summarizes performance improvements across various adaptation scenarios:

| Adaptation Scenario | VFM-UDA Performance | MIC Performance | Improvement (mIoU) |

|---|---|---|---|

| Synthetic-to-Real | +1.2 | Baseline | +1.2 |

| Real-to-Real | +5.8 | Baseline | +5.8 |

| Out-of-Target (Synthetic) | +6.1 | Baseline | +6.1 |

| Out-of-Target (Real) | +7.8 | Baseline | +7.8 |

The chart below provides a visual representation of these improvements:

These results demonstrate how self-supervised domain adaptation enhances performance across different scenarios, particularly in challenging out-of-target domains.

Applications in Vision Systems

You can apply self-supervised domain adaptation to various machine vision tasks. For example:

- Autonomous Driving: Adapt models to new environments, such as different weather conditions or road types.

- Medical Imaging: Improve diagnostic accuracy by adapting to imaging variations across hospitals.

- Industrial Automation: Enhance quality control systems by adapting to new production lines or materials.

By leveraging self-supervised techniques, you can build robust and scalable vision systems capable of handling diverse real-world challenges.

Deep Learning Models for Domain Adaptation

Deep learning models have revolutionized domain adaptation by enabling computer vision systems to transfer knowledge across domains effectively. These models leverage advanced architectures to bridge domain gaps, ensuring robust performance in diverse scenarios.

Role of Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) play a pivotal role in domain adaptation. Their ability to extract hierarchical features makes them ideal for addressing domain shifts in computer vision tasks. You can use CNNs to transfer knowledge across domains by aligning feature distributions and minimizing discrepancies.

Several techniques enhance CNN-based domain adaptation. For instance, Joint Adversarial Domain Adaptation and Deep Joint Distribution Optimal Transport improve model adaptability. These methods address challenges like limited data availability and computational costs, ensuring better accuracy and robustness.

- CNNs have demonstrated significant improvements in domain adaptation:

- They enhance model performance across varying data distributions.

- They enable effective transfer learning in real-world applications.

By leveraging CNNs, you can build adaptable systems capable of handling diverse environments.

Generative Adversarial Networks (GANs) for Domain Adaptation

Generative Adversarial Networks (GANs) have emerged as powerful tools for adversarial domain adaptation. GANs consist of a generator and a discriminator working in tandem to align source and target domain distributions. This process ensures that the model learns domain-invariant features, improving its ability to transfer knowledge.

GANs excel in scenarios where labeled target data is scarce. For example, they can generate synthetic data that mimics the target domain, enabling effective adaptation. By incorporating GANs into your workflow, you can tackle complex domain shifts and enhance model generalization.

Transformers and Vision Transformers (ViTs) in Domain Adaptation

Transformers, particularly Vision Transformers (ViTs), have gained prominence in domain adaptation. Unlike CNNs, ViTs process images as sequences, capturing global dependencies more effectively. This capability makes them highly suitable for tasks requiring fine-grained adaptation.

Quantitative results highlight the effectiveness of ViTs in domain adaptation:

| Task | ViT Performance | CNN Performance | Improvement |

|---|---|---|---|

| Segmentation | 95.75% Dice | ~80% | ~15% |

| Classification | Improved accuracy | State-of-the-art | N/A |

| Data Efficiency | 89.69% Dice | 78.69% | ~11% |

These results demonstrate how ViTs outperform traditional CNNs in segmentation and data efficiency tasks. By adopting ViTs, you can achieve superior performance in computer vision applications requiring domain adaptation.

Applications of Domain Adaptation in Machine Vision Systems

Domain adaptation has become a cornerstone in advancing machine vision systems across various industries. By enabling models to transfer knowledge between domains, you can ensure consistent performance even when faced with diverse environments or data sources. Below, you’ll explore how domain adaptation transforms key application areas.

Autonomous Driving and Navigation

In autonomous driving, domain adaptation plays a critical role in ensuring vehicles operate safely across different environments. Weather conditions, lighting variations, and road types can significantly impact object detection and navigation systems. By applying domain adaptation techniques, you can train models to transfer knowledge from one environment to another, such as from sunny to snowy conditions, without requiring extensive retraining.

For instance, models adapted to snowy weather have shown enhanced performance in detecting objects like pedestrians and vehicles. This improvement ensures reliable navigation in challenging conditions. The ability to generalize across domains reduces the need for collecting and labeling vast amounts of data for every possible scenario, saving time and resources.

Medical Imaging and Diagnostics

Medical imaging systems often face challenges due to variations in imaging equipment, patient demographics, and data quality. Domain adaptation addresses these issues by enabling models to transfer learning from one dataset to another, ensuring consistent diagnostic accuracy. For example, a model trained on high-resolution MRI scans can adapt to lower-quality scans from different hospitals, maintaining its ability to identify abnormalities.

This adaptability proves invaluable in real-world healthcare settings. By leveraging domain adaptation, you can ensure that diagnostic tools remain effective across diverse patient populations and imaging protocols. This not only improves patient outcomes but also enhances the scalability of medical imaging systems.

Satellite and Aerial Imagery Analysis

Satellite and aerial imagery analysis relies heavily on domain adaptation to handle variations in sensor types, resolutions, and environmental conditions. Models trained on data from one satellite often struggle to generalize to data from another due to differences in sensor characteristics. Domain adaptation bridges this gap, enabling models to transfer knowledge and perform consistently across datasets.

For example, models adapted to new satellite data have demonstrated improved recognition of forest patterns and land-use changes. This capability is crucial for applications like environmental monitoring, disaster response, and urban planning. By incorporating domain adaptation, you can build robust systems that analyze imagery from multiple sources without compromising accuracy.

Performance Improvements Across Applications

The table below highlights how domain adaptation enhances performance in various machine vision applications:

| Application Area | Description | Performance Improvement Example |

|---|---|---|

| Autonomous Driving | Models adapt to different weather conditions for reliable object detection. | Enhanced performance in snowy conditions without retraining. |

| Medical Imaging | Models adjust to different imaging equipment and patient demographics. | Maintained accuracy across varied image qualities. |

| Satellite Image Analysis | Models generalize across different satellite sensors and resolutions. | Improved recognition of forest patterns in new satellite data. |

| Retail and Surveillance | Models bridge gaps between different camera systems and lighting conditions. | Increased reliability in detecting behaviors across diverse environments. |

These examples demonstrate how domain adaptation ensures machine vision systems remain effective and scalable across diverse real-world scenarios.

Industrial Quality Control and Automation

Industrial quality control relies on precise and consistent inspection systems to maintain high standards. However, variations in production lines, materials, and lighting conditions can create challenges for traditional vision systems. This is where domain adaptation becomes essential. By enabling models to transfer knowledge across different environments, you can ensure reliable performance in quality control tasks.

How Domain Adaptation Enhances Quality Control

Domain adaptation helps vision systems overcome the challenges of diverse production settings. For example, a model trained to inspect products on one assembly line may struggle when applied to another line with different lighting or camera angles. By adapting the model to the new domain, you can maintain its accuracy and efficiency.

Key benefits of domain adaptation in industrial quality control include:

- Improved Consistency: Models perform reliably across different production lines and environments.

- Reduced Downtime: You can adapt models quickly without halting production for extensive retraining.

- Cost Efficiency: Eliminating the need for large amounts of labeled data reduces operational costs.

Real-World Applications

You can apply domain adaptation to various quality control tasks, such as:

- Defect Detection: Identify defects in products like scratches, dents, or misalignments, even when lighting or material properties vary.

- Assembly Verification: Ensure components are assembled correctly, regardless of differences in camera setups or production speeds.

- Material Inspection: Adapt models to inspect different materials, such as metals, plastics, or textiles, without requiring new datasets for each material.

Example: Adapting to New Production Lines

Imagine a factory introducing a new production line with different lighting conditions. A vision system trained on the old line might fail to detect defects accurately. By applying domain adaptation, you can align the model's feature recognition with the new environment. This ensures the system continues to identify defects without requiring extensive manual labeling or retraining.

Tip: Regularly evaluate your vision systems to identify when domain adaptation might be necessary. This proactive approach helps maintain high-quality standards.

Performance Metrics

The table below highlights how domain adaptation improves performance in industrial quality control:

| Task | Without Domain Adaptation | With Domain Adaptation | Improvement (%) |

|---|---|---|---|

| Defect Detection | 78% | 92% | +14% |

| Assembly Verification | 81% | 95% | +14% |

| Material Inspection | 76% | 90% | +14% |

These metrics demonstrate the significant impact of domain adaptation on maintaining quality and efficiency in industrial settings.

By integrating domain adaptation into your quality control processes, you can build robust systems that adapt to changing environments. This ensures consistent product quality and reduces operational challenges.

Challenges and Future Directions in Domain Adaptation

Addressing Dataset Bias and Domain Shift

Dataset bias and domain shift remain significant challenges in domain adaptation. When training datasets differ from target datasets, models often struggle to generalize effectively. This dissimilarity introduces bias, which can reduce accuracy and reliability. For example, demographic mismatches in datasets can lead to uneven performance across different groups.

| Challenge Type | Description |

|---|---|

| Dissimilarity between datasets | The divergence between training datasets and target datasets can introduce substantial bias. |

| Quality of ground truth labels | The study quantified the quality of ground truth extracted from radiology reports, revealing high disagreement rates. |

| Impact of demographic factors | Analysis showed that demographic mismatches can lead to performance issues in model accuracy. |

Domain shifts caused by equipment differences or underrepresentation of certain populations further complicate adaptation. For instance, models trained on data from one hospital may fail when applied to another due to variations in imaging equipment. Addressing these issues requires diverse datasets and techniques that align features across domains.

| Domain Shift Analysis | Description |

|---|---|

| Equipment and hospital differences | Variability in equipment and hospital settings can lead to significant domain shifts in model performance. |

| Underrepresentation of groups | Lack of diversity in training data contributes to bias, complicating the development of robust models. |

| Population-based domain shifts | The study quantifies domain shifts in Nigerian datasets, addressing unique demographic characteristics. |

Scalability and Computational Efficiency

Scalability is another critical challenge in domain adaptation. As datasets grow larger, computational efficiency becomes essential. Recent research introduces SPOT (Scalable Push-forward of Optimal Transport), a framework that improves scalability using generative learning-based methods. It employs stochastic gradient algorithms to achieve efficient computation and demonstrates strong convergence in experiments.

Note: SPOT offers a promising solution for handling large-scale domain adaptation tasks, ensuring faster and more reliable results.

Large-scale experiments also highlight the importance of adapting models like Mixture of Experts (MoE). These models handle massive datasets, such as those with over 500 billion tokens, while maintaining computational efficiency. By leveraging such approaches, you can scale domain adaptation to meet the demands of real-world applications.

- Key advancements in scalability:

- Efficient algorithms for large datasets.

- Adaptation of models with billions of parameters.

- Improved performance in resource-intensive tasks.

Ethical and Privacy Considerations in Domain Adaptation

Ethical and privacy concerns play a crucial role in domain adaptation. When working with sensitive data, such as medical records or surveillance footage, you must ensure compliance with privacy regulations. Techniques like federated learning allow models to adapt without sharing raw data, preserving user confidentiality.

Bias in datasets also raises ethical questions. If training data underrepresents certain groups, models may produce unfair outcomes. For example, facial recognition systems often perform poorly on underrepresented demographics. To address this, you should prioritize diverse datasets and fairness-aware learning methods.

Tip: Regularly audit your datasets and models to identify and mitigate biases. This proactive approach ensures ethical and equitable adaptation practices.

By addressing these challenges, you can build domain adaptation systems that are scalable, efficient, and ethically sound.

Emerging Trends and Research Opportunities

Advancements in domain adaptation continue to open new doors for research and innovation. As you explore this field, several emerging trends and opportunities stand out.

1. Federated Learning for Privacy-Preserving Adaptation

Federated learning is gaining traction as a method to enhance adaptation while safeguarding sensitive data. Instead of sharing raw data, models learn collaboratively across decentralized systems. This approach ensures privacy and compliance with regulations like GDPR. You can apply federated learning to tasks such as medical imaging, where patient confidentiality is critical.

2. Zero-Shot Domain Adaptation

Zero-shot adaptation is an exciting area of research. It enables models to generalize to unseen domains without requiring labeled data from the target domain. Techniques like meta-learning and transfer learning are driving progress in this space. For example, you can use zero-shot methods to adapt vision systems for disaster response, where real-time data from new environments is essential.

3. Synthetic Data Generation

Synthetic data generation is transforming domain adaptation. By creating artificial datasets that mimic target domains, you can overcome the challenges of limited labeled data. Generative Adversarial Networks (GANs) play a key role in this trend. For instance, GANs can generate realistic images for training autonomous vehicles in diverse weather conditions.

4. Cross-Domain Adaptation in Multimodal Systems

Multimodal systems, which integrate data from multiple sources like images and text, are becoming more prevalent. Cross-domain adaptation in these systems ensures seamless integration and improved performance. You can leverage this trend in applications like e-commerce, where combining visual and textual data enhances product recommendations.

5. Real-Time Adaptation for Dynamic Environments

Real-time adaptation is crucial for systems operating in dynamic environments. Techniques like online learning allow models to adjust continuously as new data becomes available. This capability is vital for applications like robotics and surveillance, where conditions change rapidly.

Tip: Stay updated on these trends by following research publications and attending conferences. This will help you identify opportunities to innovate and apply domain adaptation effectively.

Domain adaptation techniques have revolutionized how you build machine vision systems, enabling them to perform reliably across diverse environments. These methods ensure models adapt to new data distributions without requiring extensive retraining. For instance, re-using pre-trained models allows seamless adaptation, while bi-directional adaptation enhances tasks like image translation. Knowledge transfer bridges the gap between AI and human performance, making vision systems more effective.

| Technique/Methodology | Impact on Machine Vision Systems |

|---|---|

| Re-use of pre-trained models | Enables adaptation to new statistical distributions without retraining |

| Bi-directional adaptation | Enhances capabilities for tasks like image translation and style transfer |

| Knowledge transfer | Closes the gap between AI and human performance through effective learning |

The transformative impact of domain adaptation extends to real-world applications, from autonomous driving to medical imaging. By continuing research in this field, you can overcome challenges like dataset bias and scalability, unlocking new possibilities for robust and scalable vision systems.

FAQ

What is domain adaptation in simple terms?

Domain adaptation helps a machine learning model work well in new environments by adjusting to differences in data. For example, a model trained on sunny weather images can adapt to snowy weather images without retraining.

Why is domain adaptation important for vision systems?

It ensures vision systems perform reliably across different conditions. For instance, in autonomous driving, it helps models detect objects in varying weather or lighting, improving safety and accuracy.

Can domain adaptation work without labeled data?

Yes, unsupervised domain adaptation allows models to adapt using only unlabeled data from the target domain. This method reduces the need for costly manual labeling.

How does self-supervised learning help in domain adaptation?

Self-supervised learning creates its own labels through tasks like predicting image rotations. This approach helps models learn useful features, making them adaptable even with limited labeled data.

What industries benefit most from domain adaptation?

Industries like healthcare, autonomous driving, and manufacturing benefit greatly. For example, medical imaging systems adapt to different hospitals’ equipment, ensuring accurate diagnoses everywhere.

See Also

Investigating Synthetic Data's Role in Machine Vision Technology

Essential Insights on Transfer Learning for Machine Vision

Understanding Few-Shot and Active Learning in Vision Systems

The Impact of Deep Learning on Machine Vision Systems

Important Image Processing Libraries for Machine Vision Applications