What Makes Mixed Precision Essential for Machine Vision Systems

Mixed precision is transforming how you approach machine vision tasks. It combines different numerical formats, such as FP32, FP16, and INT8, to optimize computational performance. This technique enables faster processing and reduces energy consumption significantly. For example:

- NVIDIA reports up to 36× improvements in inference speed using reduced precision frameworks.

- Training throughput increases between 1.23× and 1.58× have been observed across GPUs.

- Energy usage for operations drops by factors of 18.5× to 30× when switching from FP32 to FP8 arithmetic.

Mixed precision machine vision systems also improve resource efficiency, accelerating large model training by up to 30% with minimal code changes. You gain the ability to handle complex tasks with better scalability and lower costs, making this approach indispensable for modern machine vision.

Key Takeaways

- Mixed precision makes computers faster and uses less energy. It works well for tasks like finding objects and sorting images.

- Using simpler formats like FP16 and INT8 saves resources. This lets bigger models run on the same machines.

- Mixed precision helps handle hard tasks without stressing hardware or spending too much money.

- It can train models quicker and cost less to run, which is great for businesses.

- Begin with one project to test how mixed precision improves speed and efficiency.

Understanding Mixed Precision in Machine Vision

What is Mixed Precision?

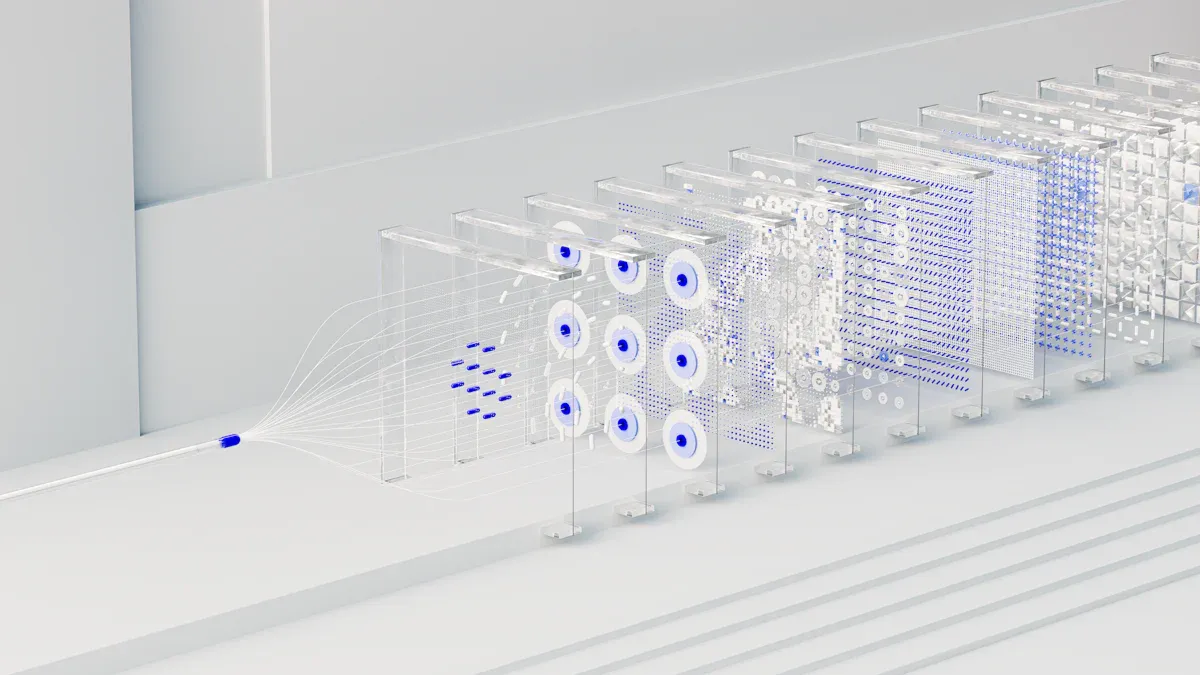

Mixed precision refers to the use of multiple numerical formats, such as float16, bfloat16, and FP32, within a single machine vision system. By combining lower precision data types with higher precision ones, you can optimize computational performance without sacrificing accuracy. This approach is particularly useful in AI applications, where large datasets and complex models demand efficient processing.

Mixed precision allows you to balance speed and resource usage. For example, float16 and bfloat16 formats reduce memory requirements and power consumption, making them ideal for high performance computing tasks. These formats are widely supported across modern GPU architectures, enabling seamless integration into machine vision workflows.

Tip: Mixed precision is not just about reducing precision; it’s about strategically using the right precision for the right task to achieve optimal results.

How Mixed Precision Works in Machine Vision Systems

Mixed precision works by assigning different numerical formats to various components of a machine vision system. For instance, during training, float16 or bfloat16 formats handle intermediate calculations, while FP32 ensures stability for critical operations like gradient updates. This selective use of lower precision data types accelerates processing and reduces energy consumption.

Post-training quantization further enhances efficiency. By converting network weights and activations to lower precision formats, you can improve inference speed without compromising model performance. Techniques like SmoothQuant and Optimal Scaling Factor Ratio Search refine this process, ensuring that mixed precision systems maintain high accuracy.

Mixed precision also supports complex models like Vision Transformers (ViTs). These models benefit from multi-precision components, which optimize both training and deployment. Research by Wang et al. highlights how mixed precision improves power consumption and performance balance, especially for edge device deployment.

Numerical Formats in Mixed Precision (FP32, FP16, INT8)

Mixed precision relies on a variety of numerical formats, each with unique advantages:

- FP32: This format offers high precision and stability, making it ideal for critical calculations. However, it requires more memory and power, which can limit scalability.

- FP16: Also known as float16, this format reduces memory usage and speeds up computations. It’s widely supported across major GPU architectures, making it a popular choice for AI applications.

- INT8: This format is highly efficient for inference tasks. It uses even less memory than float16, making it suitable for deployment on edge devices.

Support for these formats varies across hardware. For example:

- Float16 and bfloat16 are supported across all major Nvidia GPUs.

- Bfloat16 begins with the Ampere architecture, while FP8 starts with Hopper.

- FP4 is available from the Blackwell architecture.

The choice of format depends on your specific machine vision task. For training, float16 and bfloat16 provide a good balance between speed and accuracy. For inference, INT8 offers unmatched efficiency, especially for lightweight models.

Note: Research shows that float16 training achieves comparable classification results to FP32 under certain conditions. This makes float16 a viable option for standalone training in image classification tasks using CNN and ViT architectures.

Benefits of Mixed Precision Machine Vision Systems

Enhanced Computational Performance

Mixed precision machine vision systems deliver remarkable computational performance. By combining numerical formats like FP16 and FP32, you can achieve faster processing without compromising accuracy. This approach is effective at speeding up tasks such as image classification and object detection. For instance, the introduction of ALPS and EAGL metrics has revolutionized performance optimization. These metrics fine-tune precision settings, outperform traditional methods, and use fewer computational resources. They have achieved state-of-the-art accuracy in tasks like natural language processing and image recognition, proving their versatility across architectures.

The performance gain of mixed precision training becomes evident when you consider its impact on real-world applications. Tasks that once required extensive computational power now run more efficiently. This speedup not only reduces processing time but also enables you to handle larger datasets and more complex models. As a result, mixed precision techniques have become a cornerstone for modern machine vision systems.

Tip: To maximize computational performance, ensure your hardware supports mixed precision formats like FP16 and INT8.

Improved Resource Efficiency (Memory and Power)

Mixed precision machine vision systems excel at optimizing resource usage. By reducing memory requirements and power consumption, they allow you to train and deploy models more efficiently. Research findings highlight significant improvements in memory and power usage:

| Improvement Type | Percentage Reduction | Notes |

|---|---|---|

| Peak Memory Usage | Up to 25% | Achieved through mixed precision techniques. |

| Training Speed | 15% | Maintained model accuracy while speeding up training. |

| Peak Memory Use (Sample) | Up to 54% | Compared to standard mixed-precision training. |

| Back-propagation Memory | 11% | Reduction achieved by fusing back-propagation and optimization steps. |

| Real Training Conditions | 20-25% | Observed in ResNet18 experiments. |

| Accuracy Maintenance | Close to full precision | Accuracy levels maintained with 16bits formats. |

These reductions translate into tangible benefits. You can train larger models on the same hardware or deploy machine vision systems on edge devices with limited resources. This efficiency also lowers operational costs, making mixed precision systems a cost-effective solution for businesses.

Note: While reducing precision, always test your model to ensure accuracy remains within acceptable limits.

Scalability for Complex Machine Vision Tasks

Scalability is another key advantage of mixed precision machine vision systems. As tasks grow in complexity, traditional methods often struggle to keep up. Mixed precision techniques, however, provide the flexibility needed to scale your models effectively. By leveraging lower precision formats like INT8 during inference, you can deploy models on edge devices without sacrificing performance.

This scalability extends to training as well. Mixed precision training enables you to handle larger datasets and more intricate architectures, such as Vision Transformers (ViTs). These models benefit from the reduced memory footprint and faster computations offered by mixed precision. As a result, you can tackle advanced tasks like autonomous vehicle navigation, industrial inspection, and medical imaging with ease.

The ability to scale efficiently ensures that your machine vision systems remain future-proof. As datasets grow and tasks become more demanding, mixed precision systems will continue to deliver the performance and efficiency you need.

Callout: Scalability isn’t just about handling larger models. It’s about doing so efficiently, without overburdening your hardware or budget.

Mixed Precision Training for Machine Vision

Key Concepts in Mixed-Precision Training

Mixed-precision training combines different numerical formats to optimize performance while maintaining accuracy. Most of the forward pass uses the float16 data type, except for operations prone to numerical instability. Model weights are stored and updated in float32 to ensure stability during training. Layer-specific data types also play a crucial role in achieving the best results.

This approach allows you to balance speed and precision effectively. By using float16 for less critical calculations, you reduce memory usage and power consumption. Meanwhile, float32 ensures that essential computations remain accurate. This strategic use of numerical formats makes mixed-precision training ideal for training deep learning models and handling complex machine vision tasks.

Tip: Always consider the specific requirements of each layer in your model when implementing mixed-precision training.

Role of Loss Scaling in Mixed Precision Training

Loss scaling is a critical technique in mixed-precision training. It prevents numerical issues like underflow and overflow when using float16. The process involves multiplying the loss function by a constant factor to shift the gradient distribution. This scaling ensures that gradients remain within a representable range during training.

Here’s how it works:

- Multiply the loss function by a scaling factor.

- Perform backpropagation using the scaled loss.

- Adjust the weight updates to account for the scaling factor.

This method maintains the effectiveness of training while leveraging the efficiency of float16. Loss scaling is especially important when training very large models, as it ensures stability without compromising performance.

Callout: Without loss scaling, gradients in float16 could become too small to represent, leading to poor model convergence.

Advantages of Mixed Precision Training for Machine Vision

Mixed precision training offers significant advantages for machine vision. It accelerates model convergence, reduces resource usage, and enables you to train larger models on the same hardware. Performance benchmarks highlight these benefits:

| Model | GPU | Speedup Ratio (CoMP vs FP32) | Speedup Ratio (CoMP vs AMP) |

|---|---|---|---|

| BERT-base | A100 | 1.91× | N/A |

| BERT-base | RTX 4090 | 1.52× | N/A |

| Vgg16 | A100 | 1.88× | 1.15× |

| Vgg16 | RTX 4090 | 1.70× | 1.11× |

These results demonstrate how mixed-precision training improves efficiency across different hardware platforms. By adopting this approach, you can achieve faster training times and lower energy consumption. This makes it an essential technique for training deep learning models in machine vision.

Note: Mixed precision training not only speeds up training but also reduces operational costs, making it a cost-effective solution for businesses.

Practical Implementation of Mixed Precision

Hardware Requirements (GPUs, TPUs, etc.)

To implement mixed precision effectively, you need specialized hardware optimized for this technique. GPUs equipped with Tensor Cores, such as NVIDIA V100, are ideal for mixed-precision operations. These cores dynamically adjust precision levels based on task requirements, ensuring efficient calculations. Tensor Processing Units (TPUs) also support mixed precision, offering high performance for tasks requiring float16 matrix multiplication.

| Hardware Type | Peak Performance (TFLOPS) | Memory Bandwidth (GB/s) | Memory Size (GB) | Mixed Precision Format |

|---|---|---|---|---|

| NVIDIA V100 | 125 | 900 | 16 | float16, float32 |

| TPU v2 | 180 | 2400 | 64 | bfloat16, float32 |

| TPU v3 | 420 | N/A | N/A | N/A |

Frameworks like NVIDIA TensorRT and Intel OpenVINO further enhance mixed-precision inference by optimizing models based on hardware capabilities. These tools allow you to achieve better performance and energy efficiency, especially for machine vision tasks.

Software Frameworks Supporting Mixed Precision (TensorFlow, PyTorch, etc.)

Several software frameworks simplify mixed-precision implementation. PyTorch provides torch.amp, a user-friendly interface that automates mixed-precision training. This feature improves performance on GPUs with Tensor Cores, making it easier to integrate float16 matrix multiplication into your workflows. TensorFlow also supports mixed precision through its native APIs, enabling seamless training on TPUs.

Both frameworks offer significant speed improvements. TensorFlow uses 16-bit and 32-bit floats for mixed-precision training, while PyTorch leverages torch.cuda.amp to optimize computations. These tools require minimal code changes, allowing you to focus on model development rather than implementation complexities. By adopting these frameworks, you can accelerate training and inference while maintaining high accuracy.

Best Practices for Implementing Mixed Precision in Machine Vision

To optimize mixed precision in machine vision, follow these best practices:

- Automatic Mixed Precision (AMP): Use libraries like PyTorch’s torch.amp or TensorFlow’s mixed precision APIs to automate precision adjustments. This reduces manual effort and ensures efficient casting of precision levels.

- Dynamic Loss Scaling: Prevent underflow during training by dynamically adjusting loss values. This technique maintains stability when using float16 for calculations.

- Gradient Checkpointing: Store only a subset of activations during the forward pass to reduce memory usage.

- Efficient Data Loading: Preprocess data directly on the GPU to minimize communication overhead with the CPU.

For transformer models, NVIDIA’s Transformer Engine offers optimized precision levels, enhancing training efficiency. Activation offloading to CPU memory can further extend effective memory capacity, allowing you to train larger models without hardware upgrades. By implementing these strategies, you can maximize the benefits of mixed precision in your machine vision systems.

Real-World Applications of Mixed Precision in Machine Vision

Autonomous Vehicles and Object Detection

Mixed precision plays a vital role in autonomous vehicles by improving object detection systems. These systems rely on deep learning models to identify pedestrians, vehicles, and road signs in real time. By using lower precision formats like FP16 for inference, you can achieve faster processing speeds without sacrificing accuracy. This ensures that vehicles respond quickly to their surroundings, enhancing safety.

Performance metrics highlight the effectiveness of mixed precision in object detection tasks:

| Metric | Description |

|---|---|

| BLEU-4 | Measures the precision of up to 4-gram overlaps between generated text and reference outputs. |

| ROUGE-1 | Captures unigram-level overlap, indicating coverage of key information in text summarization tasks. |

| ROUGE-2 | Extends to bigrams, offering a finer-grained measure of contextual coverage for visual content. |

| ROUGE-L | Uses the longest common subsequence to evaluate sequence-level match, emphasizing recall of information. |

| Mean Squared Error (MSE) | Evaluates the average squared difference between predicted and true values, indicating accuracy in detection. |

These metrics demonstrate how mixed precision enhances both detection accuracy and system reliability. This makes it an essential technology for autonomous driving applications.

Industrial Quality Control and Inspection

In manufacturing, mixed precision revolutionizes quality control and inspection processes. Machine vision systems equipped with mixed precision can detect defects with remarkable accuracy. For example, businesses report defect rates as low as 1% after adopting these systems.

Key improvements include:

- Detection accuracy reaching up to 99%.

- A 25% reduction in inspection time.

- A 40% increase in operational efficiency.

The AI-driven quality inspection market is projected to grow to $70.7 billion by 2029. This reflects a shift towards automation, with over 80% of manufacturers reporting significant improvements in quality standards. Mixed precision enables these advancements by optimizing resource usage and accelerating computations.

Tip: Implementing mixed precision in your quality control systems can reduce costs while maintaining high inspection standards.

Medical Imaging and Diagnostics

Mixed precision also transforms medical imaging and diagnostics. It allows you to process high-resolution images faster, enabling quicker diagnoses. For example, CT scans and MRIs benefit from reduced inference times, which is critical in emergency situations.

By using INT8 for inference, you can deploy models on edge devices in hospitals, ensuring real-time analysis. This improves patient outcomes by providing accurate results in less time. Mixed precision also supports advanced imaging techniques, such as 3D reconstruction, by reducing memory requirements and computational overhead.

Callout: Faster image processing with mixed precision can save lives by enabling timely medical interventions.

Mixed precision is revolutionizing machine vision systems. It boosts performance, reduces energy use, and scales effortlessly for complex tasks. By adopting this approach, you can process data faster, cut costs, and achieve sustainable solutions.

Tip: Start small by integrating mixed precision into one project. Measure the improvements in speed and efficiency to see its potential firsthand.

As technology advances, mixed precision will remain essential. It empowers you to tackle growing challenges in machine vision while staying efficient and future-ready.

FAQ

What is the main advantage of mixed precision in machine vision?

Mixed precision improves computational speed and reduces energy consumption. By using lower precision formats like FP16 or INT8, you can process data faster while maintaining accuracy. This makes it ideal for tasks like object detection and image classification.

Can mixed precision work on all hardware?

No, mixed precision requires compatible hardware like GPUs with Tensor Cores or TPUs. Devices such as NVIDIA V100 or TPU v3 support formats like FP16 and bfloat16, enabling efficient mixed-precision operations.

Does mixed precision affect model accuracy?

Mixed precision maintains accuracy when implemented correctly. Techniques like loss scaling ensure numerical stability during training. Testing your model after applying mixed precision helps confirm that accuracy remains within acceptable limits.

How does mixed precision reduce memory usage?

Lower precision formats like FP16 use fewer bits to represent data. This reduces memory requirements, allowing you to train larger models or deploy systems on resource-constrained devices like edge hardware.

Is mixed precision suitable for small-scale projects?

Yes, mixed precision benefits projects of all sizes. It speeds up training and inference, reduces costs, and optimizes resource usage. Even small-scale projects can achieve better performance with minimal hardware upgrades.

Tip: Start with automatic mixed precision tools in frameworks like PyTorch or TensorFlow for easy implementation.

See Also

The Importance of Triggering in Machine Vision Technology

Why Machine Vision Systems Are Crucial for Bin Picking

Ensuring Precision Alignment with Machine Vision Systems in 2025

Do Filtered Machine Vision Systems Improve Accuracy Levels?

Understanding Camera Resolution Fundamentals for Machine Vision