How Model Configuration Shapes Machine Vision Systems in 2025

Model configuration plays a crucial role in the effectiveness of a machine vision system. By 2025, advancements in AI are transforming how these systems perform, adapt, and scale. You’ll observe this in several key areas:

- Performance metrics now emphasize explainability, enhancing the trustworthiness of AI models.

- Edge AI and federated learning boost adaptability across a variety of environments.

- Techniques such as hyperparameter tuning are fine-tuning system performance.

Emerging AI trends also focus on real-time processing and energy-efficient algorithms, ensuring that every machine vision system aligns with modern industrial demands while minimizing environmental impact.

Key Takeaways

- Setting up models correctly is key for machine vision systems. Good hardware, software, and data setup help tasks run well.

- AI and deep learning make setup easier by automating steps. This improves how accurate and fast machine vision systems work.

- Good data and correct labeling are important for strong models. Using different datasets avoids mistakes and keeps systems reliable.

- Saving energy is becoming more important in machine vision. Smart setups can lower harm to the planet and still work well.

- New ideas like self-setting AI models and edge computing are changing machine vision. These make systems more flexible and better in busy places.

Defining Model Configuration in Machine Vision Systems

What is Model Configuration in Machine Vision?

Model configuration in a machine vision system refers to the process of designing and setting up the components that enable the system to perform specific tasks. These components include hardware, software, data pipelines, and AI models. Each element must work together seamlessly to ensure the system achieves its intended purpose, whether it’s detecting objects, analyzing images, or performing quality control.

To better understand the scope of model configuration, consider the insights from industry reports:

| Report Title | Description |

|---|---|

| Global Markets for Machine Vision Technologies | Explores the market for machine vision hardware and software, detailing components and applications. |

| Global Industrial Machine Vision Market Size, Share, and Trends Analysis Report | Provides definitions and segmentation essential for understanding model configuration. |

| Machine Vision Market Size, Share, Trends, Growth & Forecast | Analyzes the machine vision market, focusing on definitions, segmentation, and applications. |

These reports highlight how model configuration defines the capabilities and limitations of machine vision systems. By carefully selecting and integrating components, you can tailor a system to meet specific needs.

The Importance of Model Configuration for Quality and Performance

The configuration of a machine vision system directly impacts its quality and performance. High-quality data and accurate labeling are essential for building robust models. For example:

| Evidence Description | Impact on Model Performance |

|---|---|

| High-quality data and accurate labeling | Ensures reliability and precision in results. |

| Diverse examples in datasets | Helps models perform well across different conditions. |

| Proper camera selection and creative design of optics | Contributes significantly (85% or more) to application success. |

Imagine a scenario where an object is too small, blurry, or blends into the background. Even the most advanced processing technology will struggle to detect it. This demonstrates why image quality and proper configuration are critical. Additionally, issues like overfitting and underfitting can arise if the data lacks variety or quality, further emphasizing the need for meticulous configuration.

AI and Deep Learning in Model Configuration

AI and deep learning have revolutionized how model configuration is approached. These technologies allow you to customize systems to handle complex tasks with greater accuracy and efficiency. For instance, vision-language models (VLMs) can adapt to different label hierarchies and datasets. Even with a small number of images, effective calibration is possible, although returns diminish after a certain point.

Recent research also shows how deep learning and AI improve performance metrics. Techniques like the Synthetic Minority Over-sampling Technique (SMOTE) address data imbalances, enhancing accuracy, precision, and fairness. This is particularly important in applications where underrepresented data could lead to biased results.

By leveraging AI and deep learning, you can automate many aspects of model configuration, making it easier to achieve high-quality outcomes. These technologies not only improve performance but also ensure that machine vision systems remain adaptable to evolving industry demands.

Core Components of Model Configuration

Hardware: Cameras, Sensors, and Computing Platforms

The hardware forms the backbone of any machine vision system. Cameras, sensors, and computing platforms work together to capture, process, and analyze visual data. Selecting the right hardware ensures the system meets its performance goals.

Cameras play a critical role in the image capturing process. You must choose between global shutter sensors and rolling shutter sensors based on your application. Global shutter sensors capture an entire image at once, making them ideal for high-speed environments. Rolling shutter sensors, on the other hand, capture images line by line, which can lead to distortions in fast-moving scenarios but are more cost-effective. Sensor resolution also matters. Higher resolutions provide more detail but require greater computational power.

Sensors like radar, Lidar, and ultrasonics complement cameras by providing depth and situational awareness. For example, autonomous vehicles often use a combination of these technologies. Some automakers, like Google, rely heavily on Lidar for object classification, while others, like Tesla, prioritize cameras and radar. The choice depends on factors like cost, performance, and market needs.

Computing platforms process the data collected by cameras and sensors. Benchmarks like TransFuser and DriveNetBench evaluate the performance of CPUs and GPUs in these systems. DriveNetBench, for instance, standardizes evaluation metrics, ensuring consistent performance comparisons across different configurations. When selecting hardware, you must balance computational power with energy efficiency to meet your system's requirements.

Lighting is another crucial aspect of hardware. Proper machine vision lighting enhances image quality, ensuring the system can detect objects accurately. You should consider factors like brightness, color temperature, and uniformity when designing your lighting setup.

Software Frameworks and Tools for Machine Vision Systems

Software frameworks and tools simplify the development and deployment of machine vision systems. They provide the building blocks for configuring and optimizing your system.

Different frameworks serve different purposes. OpenVX focuses on portability, making it suitable for systems that need to run on various platforms. OpenCV's DNN module prioritizes ease of use, offering user-friendly tools for deep learning applications. Vendor-specific frameworks, like those from NVIDIA or Intel, optimize performance for specific hardware.

| Framework | Design Goal | Key Considerations |

|---|---|---|

| OpenVX | Portability | Performance, Power Consumption |

| OpenCV DNN Module | Ease of Use | User-friendliness |

| Vendor-specific | Hardware Support | Compatibility with specific platforms |

When choosing a framework, you should consider your system's hardware requirements and the complexity of your application. For example, OpenCV is an excellent choice for beginners due to its simplicity, while vendor-specific frameworks offer advanced features tailored to specific hardware.

AI Models: Pre-trained vs. Custom Deep Learning Models

AI models are the heart of a machine vision system. You can choose between pre-trained models and custom deep learning models, depending on your needs.

Pre-trained models offer several advantages. They are trained on large datasets, reducing the risk of overfitting. You can adapt them for various tasks, making them versatile. Community contributions provide access to advanced techniques, accelerating development. Fine-tuning pre-trained models requires less data and computational resources, making them efficient.

| Evidence Type | Description |

|---|---|

| Reduced Overfitting | Pre-trained models help prevent overfitting due to their training on large datasets. |

| Broad Applicability | They can be adapted for various tasks, enhancing their utility across different applications. |

| Community Contributions | Availability of pre-trained models from the community accelerates access to advanced techniques. |

| Improved Performance | Pre-trained models often outperform custom models, especially with limited datasets. |

| Efficiency of Fine-tuning | Fine-tuning pre-trained models requires less data and computational resources compared to training from scratch. |

Custom models, however, allow you to tailor the system to specific tasks. They are ideal for applications requiring unique datasets or specialized features. While they demand more resources and expertise, they can deliver superior performance in niche scenarios.

When deciding between pre-trained and custom models, you should evaluate factors like dataset availability, computational resources, and application requirements. Pre-trained models are often the best choice for general-purpose tasks, while custom models excel in specialized applications.

Data Management: Pipelines, Annotation, and Training

Data management forms the foundation of any machine vision system. It ensures that your models receive the right inputs for both training and inference. Without a well-structured approach to pipelines, annotation, and training, even the most advanced hardware and software configurations will fail to deliver optimal results.

Pipelines: Streamlining Data Flow

A data pipeline organizes the flow of information from image acquisition to model training and inference. It automates repetitive tasks, reduces errors, and ensures consistency. For example, a robust pipeline can handle image acquisition from multiple sources, preprocess the data, and feed it into your training mode. This process saves time and minimizes manual intervention.

Automation plays a key role here. By automating your computer vision pipeline, you can reduce human errors and improve feedback loops. This leads to better project outcomes and higher model performance. Companies that adopted automation during the COVID-19 pandemic managed to maintain efficiency despite remote operations.

Annotation: Building Quality Datasets

Annotation is the process of labeling images to create datasets for training. High-quality annotations are critical for accurate model predictions. You can use tools like bounding boxes, segmentation masks, or keypoints to mark objects in images. These annotations teach your model to recognize patterns and make decisions during inference mode.

Collaboration tools have made annotation more scalable. During the pandemic, companies with remote annotation teams used these tools to maintain accuracy and efficiency. A well-organized annotation pipeline ensures that your data remains consistent, even when handled by multiple teams.

Transfer learning can also speed up the annotation process. By using pre-trained models, you can reduce the amount of labeled data required for training. This approach improves efficiency and boosts accuracy by an average of 12%.

Training: Turning Data into Intelligence

Training is where your machine vision system learns to perform its tasks. It involves feeding annotated data into the model and adjusting its parameters to minimize errors. The quality of your training data directly impacts the model's performance. Diverse datasets with clear annotations lead to better generalization and fewer biases.

You should also consider the balance between training mode and inference mode. While training requires large datasets and computational resources, inference focuses on real-time decision-making. Optimizing both modes ensures that your system performs well in practical applications.

| Evidence Type | Description | Impact |

|---|---|---|

| Scalable Annotation Pipeline | Companies with robust collaboration systems managed remote annotation teams. | Enhanced efficiency and accuracy. |

| Automation in CV Pipeline | Automation reduces human errors and improves feedback. | Streamlined data management processes. |

| Transfer Learning | Implementing transfer learning improves accuracy by an average of 12%. | Faster annotation and training cycles. |

By focusing on efficient pipelines, accurate annotation, and effective training, you can build machine vision systems that excel in both accuracy and scalability. These processes ensure that your models are ready to handle real-world challenges with confidence.

Overcoming Challenges in Model Configuration for 2025

Balancing Accuracy, Efficiency, and Scalability

Balancing accuracy, efficiency, and scalability is a critical challenge in configuring a machine vision system. You must ensure that the system performs well without overloading resources or compromising its adaptability. Different AI models demonstrate varying trade-offs between these factors. For example:

| Model Type | Efficiency (Latency) | Energy Consumption | Task Complexity |

|---|---|---|---|

| GPT-2 | High | Low | Simple tasks (e.g., BoolQ) |

| Falcon-7B | Moderate | Moderate | Contextual understanding (e.g., SQuADv2) |

| Mistral-7B | Moderate | Moderate | Complex reasoning tasks (e.g., HellaSwag) |

| Larger Models | Low | High | Complex tasks (high resource demands) |

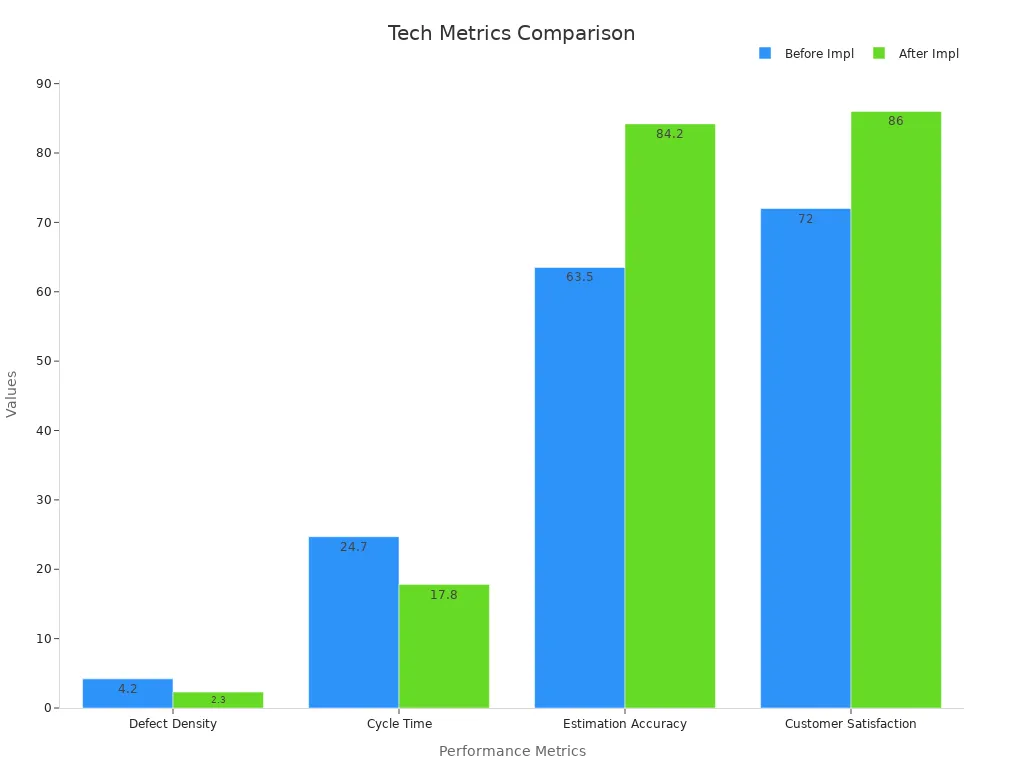

Case studies highlight how machine vision systems achieve this balance. For instance, automating leak detection in the oil and gas sector improved operational efficiency and scalability. The system reduced water loss and repair times while enabling faster responses. Performance metrics showed a 45.2% improvement in defect density and a 27.9% reduction in cycle time. These results demonstrate how careful model configuration can optimize performance across multiple dimensions.

Ensuring Data Privacy and Security in AI Systems

Data privacy and security are essential for building trust in AI-driven machine vision systems. Regulations like GDPR and HIPAA enforce strict rules on data collection, storage, and processing. You must obtain explicit consent for data usage and ensure compliance to avoid penalties. Ethical guidelines also emphasize transparency, accountability, and fairness in AI systems. These principles safeguard user privacy and maintain trust.

To enhance security, you can implement model certification steps, such as Data Quality Reports (DQRs), and continuous monitoring. This systematic approach ensures compliance with regulatory standards while maintaining data integrity. By prioritizing privacy and security, you can create machine vision systems that are both reliable and ethical.

Addressing Hardware and Resource Limitations

Hardware limitations often constrain the performance of machine vision systems. Cameras, sensors, and computing platforms must work together efficiently to meet system requirements. For example, high-resolution cameras improve image quality but demand more computational power. Similarly, lighting plays a crucial role in enhancing image clarity, which directly impacts accuracy.

To address these challenges, you can optimize hardware configurations and adopt energy-efficient solutions. Edge AI offers a promising approach by processing data locally, reducing latency and energy consumption. This decentralized model configuration minimizes reliance on cloud resources, making the system more scalable and sustainable.

By carefully selecting hardware and leveraging advanced technologies, you can overcome resource constraints and build robust machine vision systems.

Automating Configuration with AI and Deep Learning

Automating the configuration of machine vision systems has become more accessible with advancements in AI and deep learning. These technologies allow you to streamline processes that were once time-consuming and prone to errors. By leveraging automation, you can reduce manual intervention and improve the overall efficiency of your system.

AI simplifies tasks like hyperparameter tuning, which optimizes the performance of your models. Instead of manually adjusting parameters, you can use AI-driven tools to find the best settings. This approach saves time and ensures consistent results. For example, automated tools can analyze data pipelines and recommend configurations that enhance both training and inference processes.

Deep learning plays a crucial role in automating data preparation. It can handle tasks like data augmentation, which increases the diversity of your dataset. This step improves the robustness of your models without requiring additional data collection. AI also assists in real-time model updates, enabling your system to adapt to new environments or tasks with minimal downtime.

Another benefit of automation is the ability to monitor and optimize AI model inference. Automated systems can track performance metrics and make adjustments as needed. This ensures that your machine vision system remains accurate and efficient over time. For instance, in industrial settings, automated configuration can help detect defects faster and with greater precision.

By automating these processes, you can focus on higher-level tasks, such as designing innovative applications or exploring new use cases. Automation not only saves time but also enhances the scalability and adaptability of your machine vision systems.

Real-World Applications of Machine Vision Systems

Autonomous Vehicles: Real-Time Object Detection and Navigation

Machine vision systems are transforming autonomous vehicles by enabling real-time object detection and navigation. These systems use cameras and sensors to identify objects like pedestrians, vehicles, and road signs. You can rely on them to make split-second decisions, ensuring safety and efficiency. For example, Tesla’s Autopilot uses advanced vision-based algorithms to detect lane markings and obstacles, allowing the vehicle to navigate complex environments.

Depth-sensing technologies, such as LiDAR and radar, complement cameras by providing 3D spatial awareness. This combination ensures that autonomous vehicles can operate effectively in various conditions, including low light or fog. By integrating machine vision with AI, these systems continuously learn and adapt, improving their performance over time.

Industrial Automation: Enhancing Quality Control

In industrial automation, machine vision systems play a vital role in enhancing quality control. They perform tasks like defect detection, assembly verification, and dimensional measurement with unmatched precision. Unlike manual inspection, these systems work tirelessly and consistently, reducing errors and improving productivity.

For instance, Foxconn implemented a machine vision system to inspect handheld electronics. This system identified 13 types of defects, cutting the labor force for inspections by 50%. Automated optical inspection (AOI) further ensures flawless production by flagging deviations from master images.

A study highlights the impact of machine vision across industries:

| Industry | Metric Description | Improvement Rate |

|---|---|---|

| Automotive | Reduction in defects compared to manual inspection | Up to 90% |

| Food & Beverage | Decrease in product recalls and contamination issues | 70% |

| Electronics | Improvement in defect detection rates | 60% |

| General | Productivity gains and operational cost reduction | Up to 30% and 20% |

These systems not only improve inspection accuracy but also optimize operational costs, making them indispensable in modern manufacturing.

Healthcare: Imaging, Diagnostics, and Precision Medicine

Machine vision systems are revolutionizing healthcare by improving imaging, diagnostics, and precision medicine. You can see their impact in applications like disease detection, surgical assistance, and personalized treatment plans. Deep learning algorithms analyze medical images with remarkable accuracy, often matching or surpassing human experts.

For example, an AI-based diagnostic system for diabetic retinopathy demonstrated exceptional effectiveness in clinical trials. It identified the condition with high accuracy, enabling early intervention and better patient outcomes. Another study compared deep learning algorithms with healthcare professionals, showing that these systems excel in detecting diseases from medical imaging.

| Study Title | Summary |

|---|---|

| Diagnostic accuracy of deep learning in medical imaging | Highlights the high diagnostic accuracy of deep learning techniques. |

| A comparison of deep learning performance against professionals | Demonstrates the effectiveness of machine vision in disease detection. |

| Pivotal trial of an autonomous AI-based diagnostic system | Showcases AI's role in detecting diabetic retinopathy. |

| AI using deep learning to screen for diabetic retinopathy | Validates the use of machine vision in improving healthcare outcomes. |

By integrating machine vision into healthcare, you can achieve faster diagnoses, reduce errors, and provide more personalized care. These advancements are paving the way for a future where technology and medicine work hand in hand.

Retail: Customer Behavior Analysis and Inventory Management

Machine vision is transforming retail by helping you understand customer behavior and manage inventory more effectively. These systems analyze customer movements, preferences, and purchase patterns, enabling you to create a more personalized shopping experience.

Heat map analytics, powered by machine vision, track how customers move through your store. Retailers like Tesco use this data to adjust store layouts in real time, optimizing the customer journey. Queue management also benefits from computer vision. By predicting queue lengths and wait times, you can allocate staff more efficiently, reducing customer frustration.

AI-based cameras provide deeper insights into customer preferences. They analyze viewpoints and purchase patterns, helping you tailor promotions and product placements. For example, understanding which shelves attract the most attention allows you to position high-demand items strategically. These insights also enable you to anticipate consumer demand, ensuring your store stays stocked with the right products.

Inventory management becomes more precise with machine vision. Accurate demand forecasting minimizes stockouts and overstock situations. By analyzing historical sales data and market trends, you can maintain optimal inventory levels. This not only reduces waste but also ensures customers find what they need when they visit your store.

Task automation is another advantage. Robots equipped with computer vision handle tasks like shelf scanning and restocking, freeing up your staff for more customer-focused activities. Additionally, machine vision cameras monitor occupancy levels, ensuring safety and compliance with regulations.

| Benefit | Example Use Case |

|---|---|

| Heat Map Analytics | Adjusting store layouts in real time |

| Queue Management | Predicting wait times for better staffing |

| Demand Forecasting | Minimizing stockouts and overstock |

| Task Automation | Robots performing shelf scanning |

By leveraging machine vision, you can enhance customer satisfaction, streamline operations, and stay ahead in the competitive retail landscape.

Future Trends in Model Configuration for Machine Vision

Self-Configuring AI Models for Enhanced Adaptability

In 2025, self-configuring AI models are transforming how you approach machine vision systems. These models adapt to new tasks and environments without requiring manual intervention. They analyze incoming data, adjust their parameters, and optimize their performance in real time. This adaptability reduces the need for frequent retraining, saving you time and resources.

For example, a self-configuring model in a factory setting can detect changes in lighting conditions or hardware malfunctions. It recalibrates itself to maintain accuracy and ensure consistent results. This capability is especially useful in dynamic environments where conditions change frequently. By leveraging these models, you can achieve higher efficiency and better system reliability.

Quantum Computing’s Role in Machine Vision Systems

Quantum computing is opening new possibilities for machine vision. It processes vast amounts of data at unprecedented speeds, enabling you to solve complex problems that traditional systems cannot handle. For instance, quantum algorithms can optimize hardware configurations, improving the performance of your system.

In training and inference, quantum computing accelerates deep learning models, allowing you to analyze larger datasets in less time. This speed enhances accuracy and reduces the time required for model development. Industries like healthcare and autonomous vehicles benefit significantly from these advancements. Quantum computing also supports energy-efficient solutions, aligning with the growing demand for sustainable technologies.

Edge AI and Decentralized Model Configuration

Edge AI is revolutionizing machine vision by decentralizing model configuration. Instead of relying on centralized servers, you can process data locally at the edge. This approach reduces latency, enhances privacy, and improves reliability.

Decentralized systems operate across multiple edge locations, enabling real-time analysis and decision-making. Federated learning allows models to learn from on-site data while preserving privacy. By processing data locally, you minimize bandwidth usage and scale your operations efficiently.

| Feature | Description |

|---|---|

| Decentralized Processing | AI operates across edge locations, enabling real-time analysis and decision-making. |

| Federated Learning | Supports localized models that learn from on-site data while preserving privacy. |

| Bandwidth Efficiency | Minimizes bandwidth usage by processing data locally instead of sending it to a central location. |

| Scalability | Ideal for organizations running AI at scale without centralizing compute resources. |

Edge AI also addresses hardware limitations by distributing computational tasks. This setup ensures that your system performs well even in resource-constrained environments. By adopting edge AI, you can build scalable, efficient, and privacy-focused machine vision systems.

Sustainability and Energy-Efficient Configurations

Sustainability has become a critical focus for machine vision systems in 2025. You can achieve energy-efficient configurations by optimizing hardware, software, and operational processes. These efforts not only reduce environmental impact but also lower operational costs.

One key area to address is lighting. Machine vision systems rely heavily on proper lighting to capture high-quality images. However, traditional lighting setups consume significant energy. By switching to energy-efficient LED lighting, you can cut power usage by up to 70%. Smart lighting systems further enhance efficiency by adjusting brightness and color temperature based on real-time needs. This reduces waste and ensures optimal performance.

AI plays a pivotal role in sustainability efforts. AI algorithms can monitor energy consumption and suggest optimizations. For example, AI can dynamically adjust the power usage of cameras and sensors during low-activity periods. This approach minimizes energy waste without compromising system performance.

Another strategy involves leveraging edge computing. By processing data locally, you reduce the need for energy-intensive cloud operations. This not only lowers power consumption but also improves system responsiveness. Additionally, you can adopt modular hardware designs, which allow you to upgrade components without replacing entire systems. This reduces electronic waste and extends the lifespan of your equipment.

Tip: Regular maintenance of your machine vision system ensures that components like cameras and lighting operate at peak efficiency. This simple step can significantly reduce energy usage over time.

By prioritizing sustainability, you contribute to a greener future while maintaining the performance and reliability of your machine vision systems. These energy-efficient practices align with both environmental goals and business objectives.

Model configuration defines how machine vision systems perform and adapt. By focusing on innovative tools and ethical practices, you can ensure these systems meet industry demands. Future trends like self-configuring AI and edge computing offer exciting opportunities to enhance adaptability. Prioritizing quality in every aspect of configuration unlocks the full potential of machine vision systems. When you master these techniques, your business stays competitive and delivers exceptional results.

FAQ

What is the role of hardware in machine vision systems?

Hardware captures and processes visual data. Cameras, sensors, and computing platforms work together to ensure your system performs tasks like object detection or image analysis. Choosing the right hardware improves accuracy and efficiency, especially in challenging environments.

How does AI simplify model configuration?

AI automates tasks like hyperparameter tuning and data preparation. It analyzes data pipelines and suggests optimal configurations. This reduces manual effort and ensures consistent results. You can use AI to adapt your system to new tasks or environments with minimal downtime.

Why is data annotation important for machine vision?

Data annotation teaches your model to recognize patterns. High-quality annotations improve accuracy during training and inference. Tools like bounding boxes or segmentation masks help label images effectively. Better annotations lead to better predictions and fewer errors.

Can machine vision systems operate in low-light conditions?

Yes, with proper lighting and sensors. Technologies like infrared cameras or LiDAR enhance visibility in low-light environments. Adjusting brightness and color temperature ensures your system captures clear images, even in challenging conditions.

How does edge AI improve machine vision systems?

Edge AI processes data locally, reducing latency and bandwidth usage. It enhances privacy by keeping sensitive data on-site. You can scale operations efficiently and maintain real-time analysis without relying on centralized servers.

See Also

Understanding Computer Vision Models And Machine Vision Systems

Exploring Field Of View In Machine Vision Systems 2025

An Overview Of Image Processing In Machine Vision Systems

Future Trends In Assembly Verification Using Machine Vision

Transforming Aerospace Manufacturing Through Machine Vision Systems