What You Need to Know About Natural Language Understanding in 2025

Natural Language Understanding machine vision system continues to revolutionize how machines interpret human communication. In 2025, the global conversational AI market is projected to grow rapidly, reaching $58.37 billion by 2031. These advancements stem from the Natural Language Understanding machine vision system's ability to integrate seamlessly with technologies like computer vision, forming systems capable of processing text, speech, and images simultaneously. For instance, a luxury consignment marketplace recently enhanced its authentication process using an NLU machine vision system, saving $2 million annually and reducing errors. By enhancing AI capabilities, the Natural Language Understanding machine vision system is transforming industries, from customer service to healthcare, and shaping the way you interact with technology.

Key Takeaways

- Natural Language Understanding (NLU) helps computers understand human language better.

- By 2025, conversational AI will grow a lot, helping businesses improve customer service.

- NLU uses tools like word mapping and focus systems to understand language clearly.

- Sentiment analysis with NLU shows how customers feel, helping companies make smarter choices.

- Combining NLU with image recognition makes systems smarter, improving how they work with text and pictures.

Understanding Natural Language Understanding (NLU)

What is NLU and why is it important?

Natural Language Understanding (NLU) is a branch of artificial intelligence that focuses on enabling machines to comprehend human language in a meaningful way. Unlike simple text processing, NLU goes deeper by interpreting the intent behind words, understanding context, and even recognizing emotions. This capability allows machines to interact with you in a way that feels natural and intuitive.

Why is NLU so important? It forms the backbone of many AI applications you encounter daily. Virtual assistants like Siri and Alexa rely on NLU to understand your commands. Chatbots use it to provide accurate responses. In 2025, its importance has grown even further, as industries like healthcare, education, and manufacturing adopt NLU to improve efficiency and user experience.

Did you know? Statistical methods play a crucial role in NLU. These methods analyze large datasets to extract patterns and linguistic structures. For example:

- Empirical approaches in NLU rely on exposure to real-world data, helping machines learn language patterns.

- Stochastic techniques, which use probability-based models, have proven highly effective in understanding natural language.

Differences between NLU, NLP, and NLG

You might have heard terms like NLP, NLU, and NLG used interchangeably, but they are not the same. Here's how they differ:

| Term | Full Form | Purpose | Example |

|---|---|---|---|

| NLU | Natural Language Understanding | Focuses on understanding the meaning and intent behind text or speech. | A chatbot interpreting "I need help with my order" as a request for customer support. |

| NLP | Natural Language Processing | Encompasses all tasks related to processing human language, including NLU and NLG. | Translating a document from English to Spanish. |

| NLG | Natural Language Generation | Involves generating human-like text based on data or input. | An AI writing a product description for an e-commerce site. |

While NLP serves as the umbrella term, NLU and NLG are specialized components. NLU helps machines understand you, while NLG enables them to respond in a way that makes sense.

Role of NLU in human-computer interaction

NLU has transformed how you interact with technology. It bridges the gap between human language and machine understanding, making communication seamless. Whether you're asking a virtual assistant to play your favorite song or using a chatbot to troubleshoot an issue, NLU ensures the system understands your intent and provides relevant responses.

Recent advancements in NLU have made these interactions even more sophisticated. For instance:

- Advanced language models like GPT and BERT have improved text understanding, enabling machines to grasp complex queries with high accuracy.

- In manufacturing, NLU adoption has grown significantly, with a compound annual growth rate (CAGR) of 28.4% between 2020 and 2023. By 2027, the global market for NLP in manufacturing is projected to reach $4.3 billion.

Case in point: A study by Ribeiro et al. introduced a tool called CheckList to test NLP models. It revealed that while these models excel at specific tasks, they often struggle with nuances in human language. This highlights the ongoing challenge of making AI systems more human-centric.

As NLU continues to evolve, its role in human-computer interaction will only expand. From personalized customer experiences to smarter healthcare solutions, the possibilities are endless.

How Natural Language Understanding Works

Key components of NLU systems

Natural Language Understanding (NLU) systems rely on several key components to process and interpret human language effectively. Each component plays a specific role in transforming raw text into meaningful insights.

| Key Component | Description |

|---|---|

| Input Embedding | Converts text into numerical data for machine processing. |

| Transformer Layers | Processes data using self-attention mechanisms to identify relationships. |

| Attention Mechanisms | Focuses on the most relevant parts of the input for better understanding. |

| Output Decoding | Translates processed data back into human-readable text. |

These components work together seamlessly. For example, input embedding prepares the text for analysis, while attention mechanisms ensure the system focuses on the most important details. This collaboration allows NLU systems to deliver accurate results in real-world applications.

Tokenization and syntactic analysis

Tokenization and syntactic analysis form the foundation of NLU systems. Tokenization breaks text into smaller units, such as words or phrases, making it easier to analyze. For instance, the sentence "I love AI" would be split into three tokens: "I," "love," and "AI."

Syntactic analysis, on the other hand, examines the grammatical structure of sentences. It identifies relationships between words, such as subject-verb-object connections. This step helps NLU systems understand how words interact within a sentence.

- Tokenization simplifies text for processing.

- Syntactic analysis ensures the system grasps sentence structure.

Together, these processes enable NLU systems to comprehend language more effectively, supporting tasks like text classification and part-of-speech tagging.

Intent recognition and context understanding

Intent recognition allows NLU systems to determine the purpose behind a user's input. For example, when you say, "What's the weather like today?" the system identifies your intent as a request for weather information. Context understanding enhances this process by considering previous interactions or surrounding text.

Recent advancements in machine learning algorithms have significantly improved these capabilities. Multi-turn intent classification, for instance, boosts accuracy by summarizing user intents across multiple exchanges. Additionally, techniques like summarization help systems maintain context, ensuring they respond appropriately even in complex conversations.

These improvements make NLU systems more reliable and efficient, especially in applications like virtual assistants and customer support.

Named Entity Recognition (NER) and its applications

Named Entity Recognition (NER) is a critical component of natural language understanding. It identifies and classifies specific entities in text, such as names, dates, locations, and organizations. By extracting these entities, NER helps machines make sense of unstructured data, enabling more accurate and meaningful interactions.

You encounter NER in many everyday applications. For example, when you search for a restaurant, your virtual assistant uses NER to recognize the restaurant's name and location. In healthcare, NER extracts clinical terms from medical records, helping doctors analyze patient data more efficiently. It also plays a vital role in financial systems by identifying company names and transaction details in documents.

Recent advancements have made NER systems more powerful. Researchers developed BioEN, a Python package designed to extract biomedical entities from unstructured texts. This tool achieved state-of-the-art results, with F1 scores exceeding 90 percent on benchmark datasets. Its applications extend to epidemiology, where it helps track infectious disease spread by analyzing clinical entities and demographics.

| Evidence Description | Findings |

|---|---|

| Development of BioEN | A new Python package for extracting biomedical named entities. |

| Performance Evaluation | Achieved state-of-the-art results with F1 scores around 90 percent. |

| Application Scope | Aids epidemiologists in analyzing infectious disease spread. |

NER's versatility makes it indispensable across industries. In customer service, it powers chatbots by identifying user-specific details like names or order numbers. In legal tech, it extracts clauses and legal terms from contracts, saving time for lawyers. These applications demonstrate how entity recognition enhances efficiency and decision-making in various fields.

Tip: As NER technology evolves, its ability to handle multilingual data and complex contexts will continue to improve, opening doors to even more innovative applications.

Applications of Natural Language Understanding in 2025

Conversational AI and virtual assistants

In 2025, conversational AI and virtual assistants have become indispensable tools for businesses and consumers alike. These systems leverage natural language understanding to interpret your intent and provide accurate, context-aware responses. Whether you're asking a virtual assistant to schedule a meeting or using a chatbot to resolve a billing issue, NLU ensures the interaction feels seamless and human-like.

The effectiveness of conversational AI in improving customer engagement is backed by impressive data. For example:

| Source | Evidence | Result |

|---|---|---|

| Sephora | AI-powered chatbot and recommendation engine | 11% increase in conversion rates |

| Bank of America | AI virtual assistant 'Erica' | 2 billion interactions, 98% query resolution in 44 seconds, 56 million engagements per month |

| General Consumer Survey | 65% comfortable using AI for ordering, 59% for returns | N/A |

| McKinsey | AI-powered personalization | Up to 15% increase in revenue |

| General Business Insight | Conversational AI | 95% of client interactions in 2025, 25% boost in operational efficiency |

These advancements highlight how conversational AI enhances customer experiences while driving business growth. For instance, Bank of America's virtual assistant, Erica, resolves queries in under a minute, showcasing the speed and efficiency of modern NLU systems. As conversational AI continues to evolve, you can expect even more personalized and intuitive interactions across industries.

Sentiment analysis for actionable insights

Sentiment analysis, powered by NLU, enables businesses to understand how customers feel about their products, services, or brand. By analyzing text data from reviews, social media, and surveys, sentiment analysis tools identify patterns and trends that help you make informed decisions.

The impact of sentiment analysis on modern business environments is significant:

| Insight | Description |

|---|---|

| 83% of software companies | Report significant improvements in customer satisfaction metrics within the first year of deployment. |

| Predictive accuracy | Sentiment analysis predicts software feature adoption rates with 74% accuracy, reducing development risk. |

| Time-to-market reduction | Companies using sentiment-driven development cycles reduce time-to-market by 37% on average. |

| ROI | Businesses integrating sentiment analysis report an average ROI of 287% over three years. |

For example, by analyzing early user feedback, companies can predict which features will succeed, reducing wasted resources and accelerating product launches. Sentiment analysis also helps you identify areas for improvement, ensuring your offerings align with customer expectations. This application of NLU not only boosts efficiency but also strengthens customer loyalty.

Machine translation and multilingual communication

Machine translation has revolutionized global communication by breaking down language barriers. In 2025, advancements in NLU have made these systems more accurate and reliable, enabling you to communicate effortlessly across languages. Whether you're collaborating with international teams or accessing content in a foreign language, machine translation ensures clarity and understanding.

Recent studies validate the effectiveness of machine translation systems. For instance, ChatGPT outperformed other tools like Claude and AWS Translate in translating multilingual bug reports. It retained both lexical fidelity and semantic integrity, making it particularly useful in technical fields like software engineering. Additionally:

- Research shows bilingual users trust machine translation tools more in specialized contexts, such as medical and legal fields.

- Late bilinguals are better at detecting translation errors, especially when translating evidentiality between Turkish and English.

These findings highlight the growing trust in machine translation systems and their ability to handle complex linguistic nuances. By integrating NLU with machine translation, businesses can expand their reach, improve collaboration, and enhance customer experiences on a global scale.

Integration with computer vision for multimodal AI systems

The integration of natural language understanding with computer vision has transformed how machines interpret and interact with the world. By combining these two technologies, multimodal AI systems can process both text and images simultaneously, creating a deeper understanding of context. This capability allows machines to perform tasks that were once impossible or highly error-prone.

For example, imagine a system analyzing a photo of a riverbank while processing the caption "A family is fishing by the bank." Without integration, the system might struggle to determine whether "bank" refers to a financial institution or the edge of a river. By cross-referencing visual patterns in the image with the language context, the system can accurately identify the intended meaning. This ability to resolve ambiguities makes multimodal AI systems more reliable and effective.

Multimodal AI systems offer several benefits:

- They improve accuracy by combining information from multiple sources. Tasks like object recognition and sentiment analysis become more precise.

- They enhance human-computer interaction by enabling systems to understand gestures, voice commands, and visual cues.

- They provide a better understanding of real-world scenarios by processing diverse data sources together.

These systems also excel in real-time data processing. For instance, a self-driving car equipped with multimodal AI can analyze traffic signs, road conditions, and spoken navigation commands simultaneously. This ensures safer and more efficient decision-making on the road.

Advancements in deep learning models have played a crucial role in this progress. Modern models can handle vast amounts of data from different modalities, learning complex patterns and relationships. This has led to breakthroughs in areas like healthcare, where multimodal AI systems assist doctors by analyzing medical images alongside patient records. In retail, these systems enhance customer experiences by combining visual product searches with natural language queries.

The integration of NLP and computer vision has also opened new possibilities for accessibility. For example, systems designed for visually impaired users can describe their surroundings by combining image recognition with natural language understanding. This empowers users to navigate their environment more independently.

As these technologies continue to evolve, their potential applications will expand further. From autonomous vehicles to personalized education tools, the integration of NLP and computer vision is shaping the future of AI. By leveraging the strengths of both modalities, these systems are creating smarter, more intuitive solutions for real-world challenges.

Did you know? Multimodal AI systems not only process data more effectively but also make interactions feel more natural. For instance, a virtual assistant that understands both your words and gestures can provide a more seamless user experience.

Future of NLP and Computer Vision Integration

Advancements in deep learning and multimodal AI

Deep learning has revolutionized how machines process multimodal data, combining text, images, and audio into unified systems. You benefit from models that can represent and process diverse data types uniformly, enabling tasks like object recognition and language understanding to work together seamlessly. For example, multimodal fusion techniques combine visual and textual data, improving the accuracy of AI systems.

Modern AI systems also excel at multitask learning, allowing them to perform multiple tasks simultaneously. This capability enhances efficiency, especially in industrial applications where systems analyze RGB images and LiDAR scans to optimize production processes. Multimodal alignment further improves performance by ensuring data from different sources works cohesively.

The shift toward data-centric AI emphasizes the importance of high-quality datasets. Large-scale multimodal models now generate narratives about complex processes, showcasing the integration of NLU and computer vision. These advancements highlight the future of NLP and computer vision integration, where AI systems achieve superior performance and emergent capabilities.

| Aspect | Description |

|---|---|

| Multimodal Data Representation | Models process diverse data types uniformly. |

| Multimodal Fusion | Combines visual and textual data for improved accuracy. |

| Multitask Learning | Performs multiple tasks simultaneously using multimodal data. |

| Multimodal Alignment | Aligns data from different modalities for better performance. |

| Multimodal Transfer Learning | Transfers knowledge between modalities to enhance learning efficiency. |

| Zero-shot Learning | Generalizes to unseen tasks without additional training. |

Real-time processing and scalability challenges

Real-time processing in multimodal AI systems faces challenges that require innovative solutions. You encounter issues like data integration complexity, biases, and trust in outputs. For example, integrating diverse data types can lead to inefficiencies, while traditional metrics may fail to capture interactions between modalities.

To address these challenges, robust data integration tools and real-time monitoring systems are essential. These tools ensure consistent performance across modalities, improving real-time applications. Companies like Adobe and Google have adopted frameworks to enhance real-time insights and scalability. Adobe transitioned to continuous feedback systems, while Google implemented OKRs to align goals and track progress effectively.

- Adobe: Continuous feedback improved employee engagement.

- Google: OKRs fostered accountability and performance tracking.

- Microsoft: Growth mindset reviews enhanced satisfaction and productivity.

These examples demonstrate how real-time solutions can overcome scalability challenges, ensuring AI systems remain efficient and reliable.

Ethical considerations in AI systems

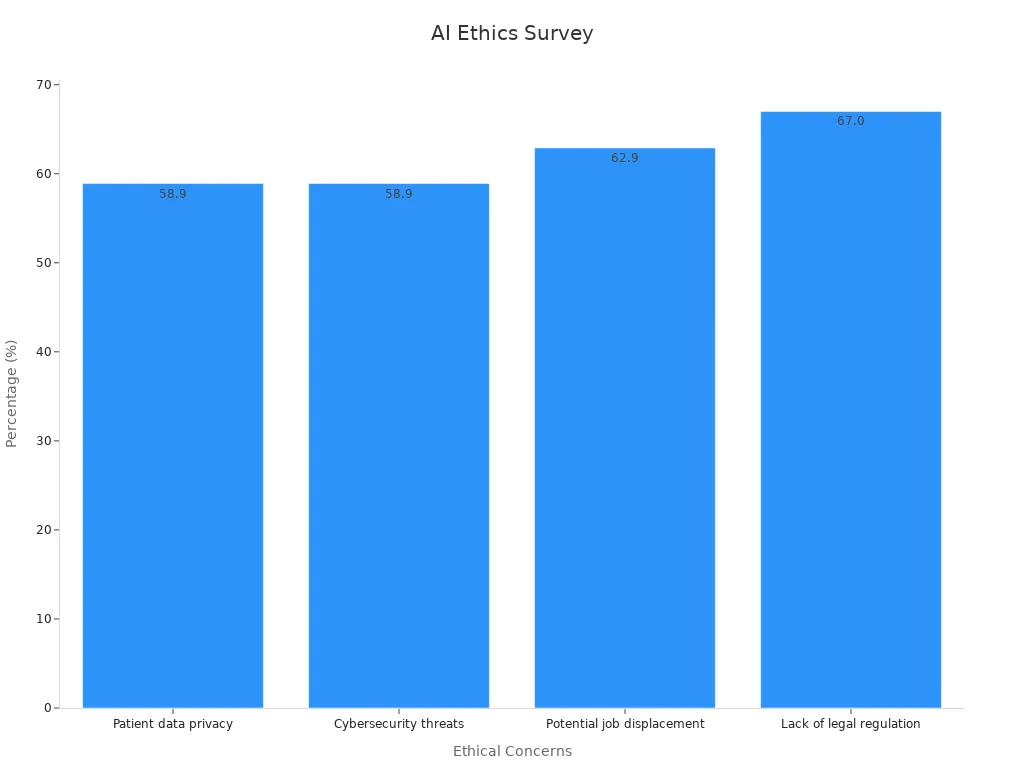

Ethical concerns in AI systems are growing as technology advances. You must consider issues like patient data privacy, cybersecurity threats, and job displacement. For instance, 58.9% of respondents worry about privacy, while 62.9% express concerns about job displacement.

Lack of legal regulation remains the most significant concern, with 67% of respondents highlighting this issue. Addressing these challenges requires transparent policies and robust security measures. AI systems must prioritize fairness and accountability to build trust among users.

By tackling these ethical considerations, you ensure AI systems contribute positively to society. The future of NLP and computer vision integration depends on balancing innovation with responsibility.

Emerging use cases in autonomous systems and healthcare

Autonomous systems and healthcare are transforming rapidly with the help of natural language understanding (NLU). These technologies are creating smarter solutions that improve efficiency and patient outcomes. You can already see their impact in areas like fraud detection, claims processing, and disease prevention.

In healthcare, intelligent bots are revolutionizing administrative tasks. These bots log every action within systems, ensuring transparency and reducing errors. AI models predict potential fraud by analyzing patterns in claims data. Hyperautomation, which combines AI, robotic process automation (RPA), and computer vision, automates repetitive processes. This integration speeds up claims processing by 40% and improves data security by 25%.

AI also plays a critical role in patient care. It analyzes medical records and lab data to detect early signs of chronic diseases. This proactive approach helps doctors intervene sooner, improving patient outcomes. For example, AI systems can identify subtle changes in lab results that might indicate diabetes or heart disease. By catching these signs early, you can receive treatment before conditions worsen.

| Evidence Description | Quantitative Metric |

|---|---|

| Reduction in fraudulent claims | 30% within six months |

| Improvement in data security | 25% |

| Faster claims processing | 40% increase in efficiency |

Autonomous systems are also making strides in transportation and logistics. Self-driving vehicles equipped with NLU and computer vision navigate complex environments with precision. These systems analyze road conditions, traffic patterns, and spoken commands to make real-time decisions. This technology reduces accidents and improves delivery efficiency.

The integration of NLU in healthcare and autonomous systems is reshaping industries. You benefit from faster processes, better security, and improved care. As these technologies evolve, their applications will expand, offering even more innovative solutions for everyday challenges.

Natural language understanding (NLU) in 2025 has reached remarkable milestones, transforming how machines interpret and respond to human communication. The global NLU market is on a rapid growth trajectory, projected to reach USD 286.6 billion by 2033, with a CAGR of 32.6%. This growth reflects its increasing adoption across industries, particularly in North America and Southeast Asia, where major players like IBM Watson and Amazon AWS drive innovation. You can see its impact in applications like conversational AI, sentiment analysis, and multilingual communication, which enhance productivity and user experiences.

The integration of NLU with computer vision has unlocked new possibilities. Intelligent systems now analyze multimedia content by combining visual recognition with language understanding. Robots equipped with these technologies can interpret environments and follow complex instructions, making them more effective in real-world scenarios. These advancements highlight how NLU and computer vision together create smarter, more intuitive systems.

As AI continues to evolve, you have the opportunity to explore its future potential. With 80% of the U.S. workforce expected to see AI influence their tasks, embracing these technologies can help you stay ahead. Whether you're a business leader or a curious learner, understanding NLU's capabilities will empower you to harness its benefits and shape a more innovative future.

FAQ

What is the difference between natural language processing and natural language understanding?

Natural language processing is a broader field that includes tasks like text generation, translation, and understanding. Natural language understanding focuses specifically on interpreting the meaning and intent behind text or speech. It enables machines to comprehend human language in a meaningful way.

How does computer vision enhance natural language understanding?

Computer vision allows systems to analyze images and videos alongside text. By combining these capabilities, machines can understand context better. For example, a system can interpret a photo and its caption together, resolving ambiguities and improving accuracy in tasks like object recognition or sentiment analysis.

Why are large language models important for NLU?

Large language models improve NLU by processing vast amounts of data. They learn patterns, context, and relationships in language, enabling machines to understand complex queries. These models enhance applications like chatbots, virtual assistants, and sentiment analysis, making interactions more accurate and human-like.

What role does entity recognition play in NLU?

Entity recognition identifies specific elements in text, such as names, dates, or locations. It helps systems extract meaningful information from unstructured data. For example, in healthcare, it identifies medical terms in patient records, improving data analysis and decision-making.

How does machine learning technology improve NLU systems?

Machine learning technology enables NLU systems to learn from data and improve over time. It powers tasks like intent recognition, context understanding, and entity extraction. These advancements make NLU systems more accurate and efficient in applications like customer support and multilingual communication.

See Also

Understanding Synthetic Data for AI Inspection Models by 2025

Defining Machine Learning: Insights on Its Functionality in 2025

Exploring Explainable AI in Quality Inspection for 2025