How Neural Networks Power Modern Machine Vision Systems

Neural networks form the backbone of today’s machine vision systems, allowing machines to analyze visual data with impressive precision. These systems excel in tasks like identifying objects, classifying images, and recognizing patterns. For instance:

- Convolutional neural networks (CNNs) achieve accuracy rates above 99% on benchmarks like MNIST.

- CNNs outperform older computer vision methods by 5-15% in image recognition accuracy.

Deep neural networks also shine in specialized applications. In material science, they accurately classify metal surfaces, such as stainless steel and magnesium alloys, using metrics like precision and recall. With such advancements, neural networks machine vision systems now deliver unmatched reliability across industries.

Key Takeaways

- Neural networks help machines understand pictures with great accuracy. This is changing fields like healthcare and manufacturing.

- Convolutional neural networks (CNNs) are very good at recognizing images. They can be over 99% accurate and are better than older methods.

- Machine vision systems use cameras, sensors, and AI to do tasks automatically. These tasks include finding defects quickly and reliably.

- Good data is important for teaching neural networks. Using different types of data helps machines learn better and make smarter choices.

- New ideas like event-based imaging and Vision Language Models are improving machine vision. These tools make data processing faster and smarter.

Neural Networks and Their Role in AI

What Are Neural Networks?

Neural networks are systems inspired by the human brain. They consist of layers of interconnected nodes, or "neurons," that process data. Each neuron receives input, performs calculations, and passes the output to the next layer. This structure allows neural networks to learn patterns and make predictions based on data. For example, in image recognition, a neural network can identify objects by analyzing pixel patterns.

Optimization plays a key role in their functionality. Techniques like hyperparameter tuning and model architecture adjustments can transform a neural network from average performance to near-perfect accuracy. A case study demonstrated how systematic optimization reduced errors and achieved exceptional results, proving the adaptability of neural networks.

How Neural Networks Mimic Human Intelligence

Neural networks replicate aspects of human intelligence by processing information hierarchically. They start with simple features and build up to complex concepts. For instance, convolutional neural networks (CNNs) use a process called convolution to extract local features from images. Lower layers detect edges and textures, while higher layers combine these into shapes and objects.

Comparative studies highlight their effectiveness in mimicking human-like tasks. Algorithms like CMU’s Ultron and TinyFaces excel in face detection and recognition, outperforming others like YOLO and PittPatt. These systems even recognize faces at challenging angles, showcasing their ability to handle nuanced visual data.

Importance of Neural Networks in Visual Data Processing

Neural networks are essential for processing visual data. They enable machines to classify images, detect objects, and recognize faces with high accuracy. CNNs, in particular, are foundational for these tasks. Their hierarchical learning approach ensures reliable results across applications.

Research suggests that feedback connections in neural networks enhance object recognition, similar to how the human brain processes visual information. Recurrent models, which incorporate these connections, improve cognitive tasks like pattern recognition and decision-making.

| Feature | Description |

|---|---|

| Convolution Operation | Extracts local features by sliding filters across input images. |

| Hierarchical Learning | Builds complex concepts from simple features in layered structures. |

| Applications | Powers image classification, object detection, and facial recognition. |

Neural networks continue to redefine how machines interpret visual data, bridging the gap between artificial and human intelligence.

Machine Vision Systems and Their Components

What Is a Machine Vision System?

A machine vision system is a technology that enables machines to interpret visual data for automated decision-making. It uses cameras, sensors, and software to capture and analyze images or videos. You encounter these systems in industries like manufacturing, healthcare, and retail, where they perform tasks such as quality control, defect detection, and inventory management.

Unlike traditional methods, a machine vision system processes data in real-time, ensuring faster and more accurate results. For example, in manufacturing, it can inspect thousands of products per minute, identifying defects with precision. This capability makes it indispensable for modern automation.

Machine Vision vs. Computer Vision

You might wonder how a machine vision system differs from computer vision. While both deal with visual data, their applications vary. Computer vision focuses on enabling machines to "see" and understand images, often for research or consumer-facing technologies like facial recognition.

A machine vision system, on the other hand, is tailored for industrial applications. It integrates hardware components like cameras and lighting with software algorithms to perform specific tasks. For instance, it can check for missing parts on an assembly line or measure dimensions with micrometer accuracy.

Tip: Think of computer vision as the brain and machine vision as the entire body, including the eyes, hands, and tools needed to act on visual information.

Key Components of Machine Vision Technology

A machine vision system relies on several key components to function effectively. These include cameras, sensors, lighting, and software powered by artificial intelligence. Each plays a crucial role in capturing, processing, and analyzing visual data.

| Key Component | Description |

|---|---|

| AI in Quality Control | Enhances inspection accuracy, efficiency, and scalability in manufacturing processes. |

| AI-Powered Defect Inspection | Utilizes machine learning algorithms for speed and precision in defect detection, ensuring consistent performance. |

| Defect Classification | Classifies defects accurately, allowing for efficient sorting and addressing of specific issues. |

| Presence/Absence Checking | Identifies specific features or missing components, crucial for compliance in assembly lines. |

| Integration with Robotics | Combines AI with robotics for advanced inspection of intricate items using high-resolution cameras and sensors. |

| Fusion with Augmented Reality | Overlays data onto physical objects, improving decision-making and safety. |

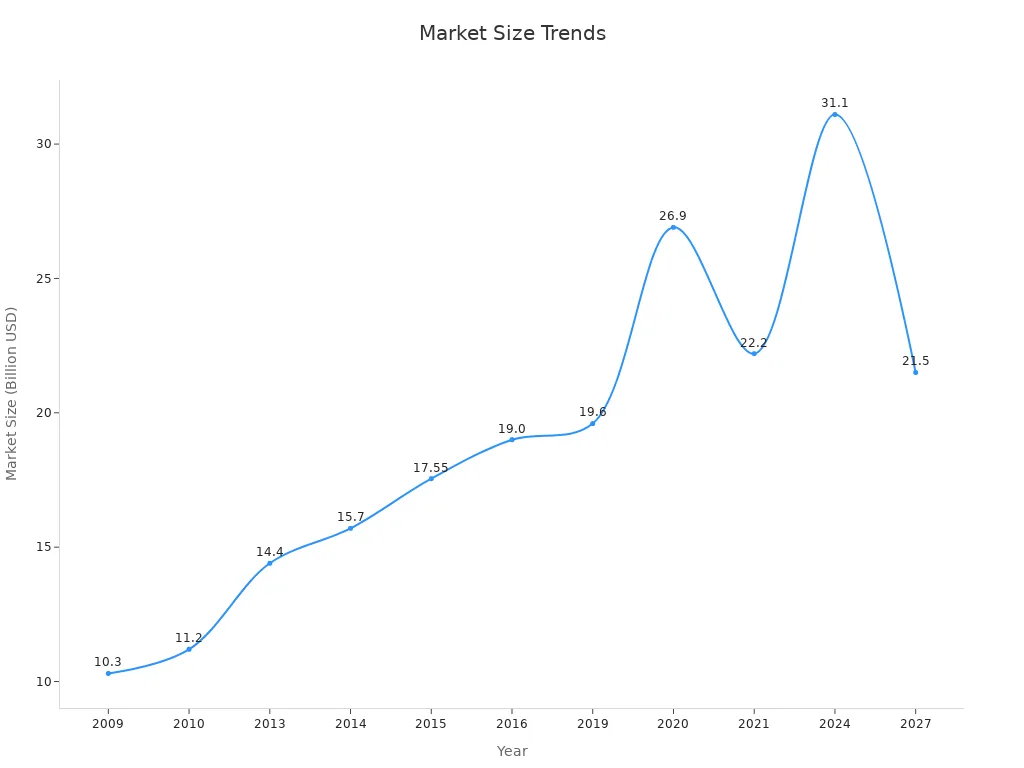

The market for machine vision systems has grown significantly over the years. Below is a chart showing its market size trends:

This growth reflects the increasing demand for automation and precision across industries. As you explore machine vision systems, you'll see how their components work together to deliver reliable and efficient solutions.

Neural Networks in Machine Vision Systems

How Neural Networks Enable Image Analysis

Neural networks have transformed how machines analyze images. By processing visual data hierarchically, they extract meaningful patterns and features from raw inputs. You can think of this as teaching a machine to "see" and understand the world around it. For example, a neural network can identify objects in an image by analyzing pixel arrangements and detecting edges, shapes, and textures.

Deep neural networks excel in handling large datasets, even when the input quality varies. Learning-based solutions compensate for lower-quality inputs, ensuring reliable results. However, studies show that poor-quality data can limit performance. To overcome this, neural networks machine vision systems rely on diverse training datasets to improve accuracy in tasks like cosmetic flaw detection.

Note: Hepta Airborne's drone technology demonstrates the efficiency of neural networks in image analysis. It inspects 250 km of power lines in just 5 minutes, saving 3,000,000 euros annually compared to manual inspections. The system identifies 400% more unique defects, showcasing the enhanced accuracy of neural networks.

The Role of CNNs in Machine Vision

Convolutional neural networks (CNNs) play a pivotal role in machine vision systems. These specialized neural networks are designed to process visual data efficiently, making them ideal for tasks like object detection and object tracking. CNNs use convolution operations to extract local features from images, such as edges and textures, and then combine these features to recognize objects and patterns.

Performance metrics highlight the effectiveness of CNNs in machine vision tasks:

| Metric | Description |

|---|---|

| Accuracy | Measures the proportion of correctly predicted instances out of the total instances in the test set. |

| Precision | Indicates the model's ability to avoid false positives by calculating the ratio of true positives to total predicted positives. |

| Recall | Highlights the model's ability to capture all relevant positives by calculating the ratio of true positives to total actual positives. |

| F1-Score | Provides a balanced measure by combining precision and recall into a single metric. |

| Confusion Matrix | Offers a detailed breakdown of model performance, showing counts of true positives, true negatives, false positives, and false negatives. |

These metrics demonstrate why CNNs are foundational to neural networks machine vision systems. Their ability to achieve high accuracy and precision ensures reliable performance across industries.

Feature Extraction and Decision-Making

Feature extraction is a critical step in machine vision systems. Neural networks analyze images to identify key features, such as shapes, textures, and colors, which are essential for decision-making. You can think of this process as teaching a machine to focus on the most important details in an image.

Traditional techniques like Histogram of Oriented Gradients (HOG) and Local Binary Pattern (LBP) have been widely used for feature extraction. Studies show that HOG outperforms LBP in classifying small metal objects. Combining both techniques yields even better results, proving that hybrid approaches can enhance accuracy.

Deep learning methods, particularly convolutional neural networks, have further revolutionized feature extraction. By learning directly from data, CNNs eliminate the need for manual feature engineering. This allows neural networks machine vision systems to adapt to complex tasks, such as identifying defects in manufacturing or analyzing medical images for diagnostics.

Tip: Successful machine vision systems rely on quality data. Training neural networks on diverse datasets ensures robust feature extraction and accurate decision-making.

Applications of Neural Networks in Machine Vision Technology

Manufacturing: Automation and Quality Control

Neural networks have revolutionized manufacturing by enhancing automation and quality control processes. You can now rely on machine vision technology to inspect products with precision and speed. Traditional monitoring systems often struggled with accuracy, achieving only about 80% due to human error and fatigue. Neural networks eliminate these limitations by automating defect detection and classification.

Deep learning models, such as convolutional neural networks (CNNs), analyze images of products to identify flaws that might go unnoticed by the human eye. For example, they can detect scratches, dents, or misalignments on assembly lines. This ensures consistent quality and reduces waste.

Did you know? The evolution from Statistical Process Control (SPC) to Learning Quality Control (LQC) has made it possible to detect complex, non-linear patterns in manufacturing data. This shift has transformed previously unsolvable engineering challenges into manageable tasks.

Here are some key insights into how neural networks improve manufacturing processes:

- Deep learning applications replace traditional visual inspection systems, solving problems once considered intractable.

- Neural networks detect defects with higher accuracy, ensuring consistent product quality.

- Integration with robotics enables advanced inspection of intricate items using high-resolution cameras and sensors.

By adopting machine vision technology, manufacturers can achieve unparalleled efficiency and reliability in their operations.

Healthcare: Diagnostics and Imaging

In healthcare, neural networks play a critical role in diagnostics and medical imaging. Machine vision technology enables you to analyze medical images with remarkable accuracy, assisting doctors in identifying diseases early. Neural networks excel at detecting patterns in complex datasets, making them invaluable for tasks like tumor detection, organ segmentation, and disease classification.

For instance, CNNs can analyze X-rays, MRIs, and CT scans to identify abnormalities. They highlight areas of concern, allowing doctors to focus on critical cases. This reduces diagnostic errors and improves patient outcomes.

Tip: Neural networks mimic the brain's ability to process visual information hierarchically. This makes them particularly effective in analyzing medical images, where subtle patterns can indicate serious conditions.

The use of neural networks in healthcare extends beyond imaging. They also assist in drug discovery, predicting how new compounds interact with the human body. By leveraging machine vision technology, you can enhance diagnostic accuracy and streamline medical research.

Retail: Customer Insights and Inventory Management

Retailers use neural networks to gain valuable customer insights and optimize inventory management. Machine vision technology helps you analyze customer behavior, predict demand, and improve the shopping experience. For example, AI-powered systems can track store traffic, monitor shelf stock, and even analyze customer emotions through facial recognition.

Starbucks employs an AI demand model to predict store-level traffic. By analyzing factors like weather, events, and historical trends, the system adjusts staffing and stock levels during peak times. Similarly, Target uses AI-driven sales forecasting to optimize inventory. These systems ensure that popular items remain in stock while reducing waste from overstocked products.

Here are some ways neural networks enhance retail operations:

- Store Traffic Prediction: AI models analyze external factors to predict customer flow, helping you manage resources effectively.

- Demand Forecasting: Neural networks optimize inventory by analyzing buying habits and local trends.

- Customer Behavior Analysis: Machine vision technology tracks shopper movements and preferences, providing insights for personalized marketing.

By integrating neural networks into retail operations, you can improve efficiency, reduce costs, and deliver a better customer experience.

Autonomous Vehicles: Navigation and Safety

Autonomous vehicles rely heavily on neural networks to navigate roads and ensure passenger safety. These systems process vast amounts of visual data in real time, enabling vehicles to make split-second decisions. By mimicking human perception, neural networks allow self-driving cars to "see" and interpret their surroundings.

How Neural Networks Enable Navigation

Neural networks play a critical role in helping autonomous vehicles navigate complex environments. They analyze data from cameras, LiDAR, radar, and ultrasonic sensors to identify objects, road signs, and lane markings. This information helps the vehicle determine its position and plan its route.

For example, convolutional neural networks (CNNs) detect pedestrians, cyclists, and other vehicles. Recurrent neural networks (RNNs) predict the movement of these objects, ensuring smooth and safe navigation. Here's how these technologies work together:

- Object Detection: Identifies obstacles and traffic elements like stop signs or traffic lights.

- Path Planning: Calculates the safest and most efficient route to the destination.

- Collision Avoidance: Predicts potential hazards and adjusts the vehicle's speed or direction.

Tip: Neural networks improve over time by learning from new data. This means autonomous vehicles become smarter and safer with every mile they drive.

Enhancing Safety with Neural Networks

Safety is the top priority for autonomous vehicles, and neural networks are at the heart of this mission. These systems monitor the environment continuously, ensuring the vehicle reacts appropriately to unexpected situations. For instance, if a child runs into the street, the neural network processes the event instantly and applies the brakes.

Here are some key safety features powered by neural networks:

- Emergency Braking: Detects imminent collisions and stops the vehicle automatically.

- Blind Spot Monitoring: Identifies objects in areas the driver cannot see.

- Driver Monitoring: Ensures the driver remains attentive in semi-autonomous modes.

- Traffic Sign Recognition: Reads and interprets road signs to follow traffic rules.

Did you know? Tesla's Autopilot system uses neural networks to process over 70,000 video frames per second, ensuring real-time decision-making.

Challenges in Autonomous Vehicle Systems

While neural networks have revolutionized autonomous driving, challenges remain. Adverse weather conditions, such as heavy rain or snow, can obscure sensors and cameras. Neural networks also require extensive training data to handle rare scenarios like animals crossing the road.

| Challenge | Impact |

|---|---|

| Poor Weather Conditions | Reduces sensor accuracy, making navigation more difficult. |

| Limited Training Data | Hinders the system's ability to handle uncommon or unexpected events. |

| Ethical Decision-Making | Raises questions about how vehicles should prioritize safety in dilemmas. |

To address these challenges, researchers are developing more robust neural network models. These advancements aim to improve the reliability and adaptability of autonomous vehicles.

The Future of Autonomous Driving

Neural networks will continue to shape the future of autonomous vehicles. Innovations like edge computing and 5G connectivity promise faster data processing and real-time updates. These technologies will enhance the performance of neural networks, making self-driving cars safer and more efficient.

As you explore the world of autonomous vehicles, you'll see how neural networks are transforming transportation. From navigation to safety, these systems are paving the way for a future where driving is not only easier but also safer for everyone.

Challenges and Future of Neural Networks in Machine Vision

Implementation Challenges in Machine Vision Systems

Implementing machine vision systems comes with several challenges that you must address to ensure success. These challenges often arise due to the complexity of integrating modern technologies into existing workflows.

- Complexity of Existing Systems: Legacy systems can make it difficult to integrate new machine vision technologies.

- Variability in Products: Diverse product characteristics can lead to inconsistent defect detection.

- Data Management: Machine vision systems generate large volumes of data, requiring efficient processing and storage solutions.

- Cost and ROI Concerns: High initial investments demand clear justification of returns on investment.

- Skill Gaps: A lack of specialized knowledge among workers can hinder implementation and maintenance.

By addressing these issues, you can unlock the full potential of machine vision systems in your operations.

Ethical Concerns in Machine Vision Technology

Machine vision technology raises ethical concerns that you should consider carefully. These concerns often relate to privacy, discrimination, and the misuse of data. The following table highlights some documented incidents and their ethical implications:

| Case Study | Ethical Concern | Source |

|---|---|---|

| CV system for person tracking, occupancy, and fall detection | Ethical considerations in development | Coupland et al. (2009) |

| Human remains trafficking | Ethical implications of CV technology | Huffer et al. (2019) |

| CV applications in the global South | Region-specific risks and ethical issues | Dufresne-Camaro et al. (2020) |

| AI cameras and video analytics | Privacy, discrimination, and over-enforcement | Stanley (2019) |

You must ensure that machine vision systems are designed and deployed responsibly to avoid these pitfalls.

Future Trends: Edge Computing and Real-Time Processing

The future of machine vision lies in edge computing and real-time processing. These technologies address the growing demand for low-latency data analysis and enhanced privacy.

- Increasing adoption of IoT devices drives the need for real-time data processing.

- The proliferation of 5G networks enables faster communication between devices.

- Emphasis on data privacy and compliance ensures secure operations.

The U.S. edge computing market is projected to grow from USD 7.2 billion in 2025 to USD 46.2 billion by 2033, with a CAGR of 23.7%. The manufacturing sector benefits significantly from this trend. Smart factories use edge computing to enable predictive maintenance and reduce latency in automated processes. By embracing these advancements, you can stay ahead in the rapidly evolving field of machine vision.

Innovations in Neural Networks for Machine Vision

Neural networks continue to evolve, driving groundbreaking advancements in machine vision. These innovations enhance how machines interpret visual data, making them smarter and more efficient.

One exciting development is the rise of Vision Language Models (VLMs). These models combine computer vision with natural language processing, enabling machines to understand both images and text. For example, a VLM can analyze a photo and generate a detailed description or answer questions about it. This capability improves user interaction and expands the potential applications of machine vision systems.

Another transformative innovation is event-based imaging. This technology mimics how biological systems process visual information. Instead of capturing every frame like traditional cameras, event-based sensors react only to changes in light intensity. This approach offers several advantages:

- Event-based sensors provide high temporal resolution, capturing fast-moving objects with precision.

- They excel in dynamic range, ensuring no loss of detail even in challenging lighting conditions.

- These sensors are ideal for high-speed environments, such as conveyor belts, where they detect defects in real time.

By adopting these technologies, you can achieve faster and more accurate visual data processing. Event-based imaging, for instance, allows you to monitor production lines with unmatched efficiency, reducing waste and improving quality control.

These innovations demonstrate how neural networks are reshaping machine vision. As you explore these advancements, you’ll see how they unlock new possibilities across industries, from manufacturing to healthcare. By staying informed, you can leverage these cutting-edge tools to solve complex challenges and drive progress in your field.

Neural networks have become essential for modern machine vision systems. They allow machines to handle complex visual tasks with remarkable precision. You can see their impact in industries like healthcare, manufacturing, and retail, where they improve efficiency and decision-making.

Did you know? Neural networks mimic the way your brain processes information, enabling machines to "see" and interpret the world.

While challenges like high computational demands and ethical concerns exist, advancements in technology continue to push boundaries. By staying informed, you can harness these innovations to unlock new possibilities and drive progress in your field.

FAQ

What makes neural networks better than traditional algorithms in machine vision?

Neural networks learn directly from data, adapting to complex patterns without manual programming. Traditional algorithms rely on predefined rules, which limits their flexibility. Neural networks handle diverse tasks like object detection and image classification with higher accuracy.

How do convolutional neural networks (CNNs) process images?

CNNs process images by breaking them into smaller sections. They use filters to detect features like edges, textures, and shapes. These features combine in deeper layers to identify objects or patterns in the image.

Can neural networks work with low-quality images?

Yes, neural networks can handle low-quality images by learning from diverse datasets. They adapt to variations in lighting, resolution, and noise. This makes them effective in real-world applications where image quality may vary.

Are neural networks in machine vision systems expensive to implement?

The initial cost can be high due to hardware and training requirements. However, the long-term benefits, such as improved efficiency and reduced errors, often outweigh the investment. Cloud-based solutions can also lower costs.

What industries benefit the most from machine vision systems?

Industries like manufacturing, healthcare, retail, and transportation benefit significantly. Machine vision systems improve quality control, diagnostics, inventory management, and autonomous navigation, making processes faster and more reliable.

See Also

The Impact of Neural Networks on Machine Vision Technology

Is Neural Network Vision Technology Capable of Replacing Humans?

The Role of Deep Learning in Advancing Machine Vision

The Importance of Feature Extraction in Machine Vision

Understanding Computer Vision Models in Machine Vision Systems