What is an ONNX Machine Vision System

An ONNX machine vision system combines the power of Open Neural Network Exchange (ONNX) with advanced machine vision technologies. It enables you to create computer vision solutions that work across different frameworks and hardware. By using ONNX, you can optimize large models for faster inference and reduce their size by up to 50% with techniques like post-training quantization. This integration boosts computing efficiency, especially in embedded applications. With processor-optimized kernels, ONNX enhances performance metrics, making it a reliable choice for modern machine learning tasks.

Key Takeaways

- ONNX lets different AI tools work together easily for training and use.

- It speeds up how models make predictions, great for fast tasks like factory robots.

- ONNX works on many systems, like online servers or small gadgets, making it adaptable.

- It can shrink model size by half, keeping it fast and accurate.

- ONNX is useful for tasks like sorting images, finding objects, and tracking health, helping improve machine vision.

Key Features of ONNX in Machine Vision

Interoperability Across Frameworks and Hardware

ONNX stands out as a standard for achieving interoperability across frameworks and hardware. You can use ONNX to bridge the gap between different deep learning frameworks like TensorFlow, PyTorch, and MXNet. This flexibility allows you to train models in one framework and deploy them seamlessly in another.

ONNX also supports a wide range of hardware, including CPUs, GPUs, and specialized AI accelerators. This compatibility ensures that your machine vision applications can run efficiently on diverse devices, from high-performance servers to edge devices. By adopting ONNX, you gain the ability to scale your computer vision solutions across various platforms without being locked into a single ecosystem.

Tip: Leveraging ONNX for AI interoperability can save time and resources during model deployment, especially when working with multiple frameworks.

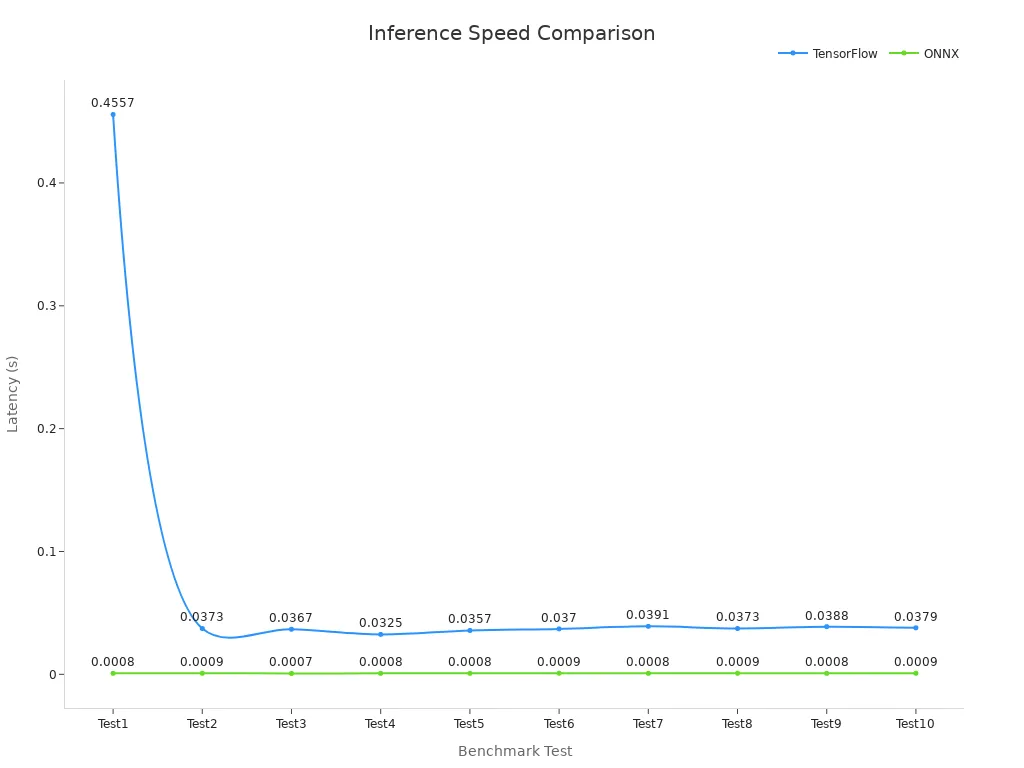

Model Optimization for Faster Inference

Optimizing ONNX models is key to achieving faster inference speeds. ONNX simplifies the process of reducing model size and improving performance through techniques like post-training quantization. These optimizations make your models more suitable for real-time applications, such as industrial automation or quality control.

The performance benchmarks below highlight the significant improvements ONNX delivers compared to TensorFlow:

| Model | Latency (s) | Improvement (%) |

|---|---|---|

| TensorFlow | 0.4557 | 99.8163 |

| ONNX | 0.0008 | |

| TensorFlow | 0.0373 | 97.6264 |

| ONNX | 0.0009 | |

| TensorFlow | 0.0367 | 97.9764 |

| ONNX | 0.0007 |

These results demonstrate how ONNX excels in optimizing models for faster inference, making it ideal for applications requiring high-speed processing.

Scalability for Diverse Deployment Environments

ONNX provides scalability for deploying machine vision models in diverse environments. Whether you're working on cloud-based systems, on-premises servers, or edge devices, ONNX ensures your models can adapt to varying requirements.

You can use ONNX to deploy AI models in resource-constrained environments, such as IoT devices, without sacrificing performance. Its processor-optimized kernels enable efficient execution, even on devices with limited computational power. This scalability makes ONNX a valuable tool for expanding the reach of your machine vision applications.

By using ONNX, you can future-proof your AI solutions, ensuring they remain compatible with emerging technologies and deployment scenarios.

How ONNX Machine Vision Systems Work

Understanding the ONNX Model Format

The ONNX model format provides a standardized way to represent machine learning models. It ensures interoperability across deep learning frameworks and hardware platforms. You can think of it as a universal language for AI models, enabling seamless communication between different tools and systems.

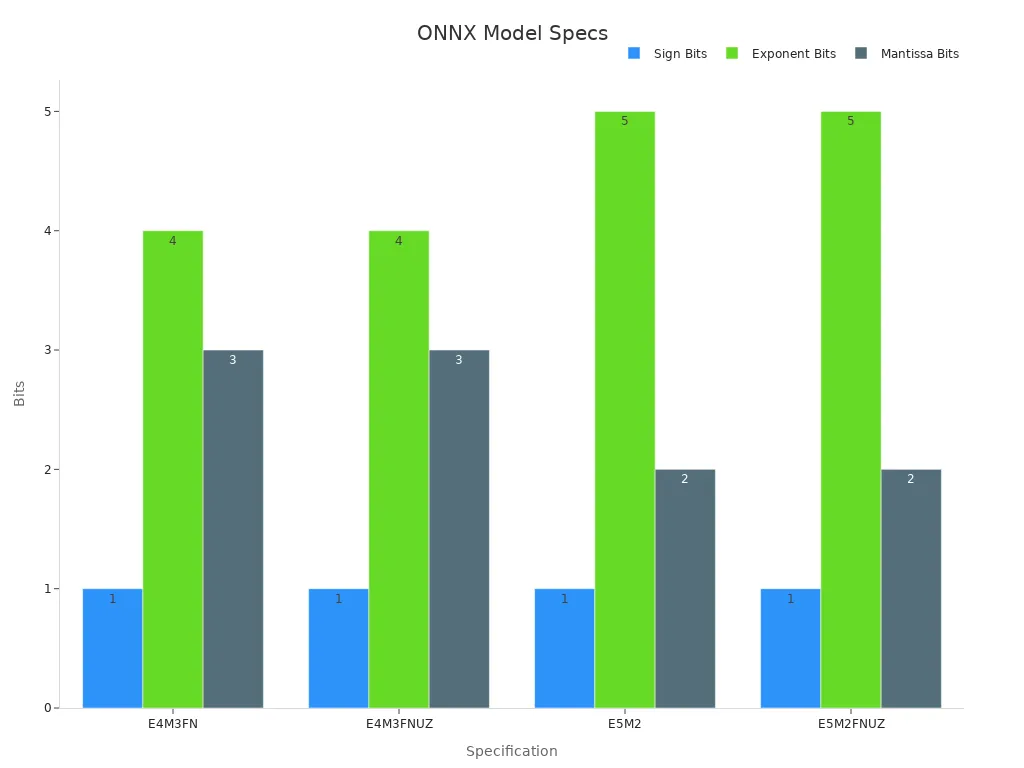

ONNX models use numerical specifications to define their structure and precision. These specifications include formats like E4M3FN and E5M2FNUZ, which determine how floating-point values are represented. The table below outlines these technical details:

| Specification | Description |

|---|---|

| E4M3FN | 1 bit for the sign, 4 bits for the exponents, 3 bits for the mantissa, only NaN values and no infinite values (FN). |

| E4M3FNUZ | 1 bit for the sign, 4 bits for the exponents, 3 bits for the mantissa, only NaN values and no infinite values (FN), no negative zero (UZ). |

| E5M2 | 1 bit for the sign, 5 bits for the exponents, 2 bits for the mantissa. |

| E5M2FNUZ | 1 bit for the sign, 5 bits for the exponents, 2 bits for the mantissa, only NaN values and no infinite values (FN), no negative zero (UZ). |

During quantization, ONNX maps floating-point values to an 8-bit quantization space using a formula: val_fp32 = scale * (val_quantized - zero_point). The scale and zero_point parameters ensure numerical accuracy, making ONNX models suitable for resource-constrained environments like edge devices.

Converting Models to ONNX

Converting existing machine learning models to ONNX format is straightforward. You can follow a step-by-step process to transform models from frameworks like PyTorch or TensorFlow into ONNX.

- Load Necessary Libraries: Import essential libraries such as

torchfor PyTorch functionalities. - Load the Pre-trained Model: Use a configuration file to load the model you want to convert.

- Prepare Input Data: Create a sample input batch using a

DataModuleclass or similar tools. - Convert the Model to ONNX: Apply the

torch.onnx.exportfunction to perform the conversion. - Save the ONNX Model: Store the converted model in a specified directory for deployment.

For TensorFlow models, tools like tf2onnx simplify the conversion process. This tool supports both command-line interfaces and Python APIs, making it accessible for developers.

Tip: When converting models, ensure the input data matches the expected format to avoid errors during deployment.

Tools Supporting ONNX in Machine Vision

Several tools support ONNX, enabling you to build and deploy machine vision systems efficiently. These tools cater to diverse needs, from high-performance inference to edge computing.

| Tool | Adoption Statistics |

|---|---|

| PyTorch | Integrated support for ONNX |

| TensorFlow | Supports ONNX models in various applications |

| Microsoft Cognitive Toolkit | Utilized in Azure Machine Learning |

| Apache MXNet | Compatible with ONNX |

| Scikit-Learn | Supports ONNX models |

| Keras | Integrated with ONNX |

| Apple Core ML | Supports ONNX models |

| ONNX Runtime | Optimized for ONNX model execution |

| NVIDIA TensorRT | Supports ONNX for high-performance inference |

| ONNX.js | Enables ONNX model usage in web applications |

Among these tools, ONNX Runtime stands out for its advanced optimization features like graph optimization and operator fusion. If you're working on mobile applications, PyTorch Mobile offers edge inference capabilities and supports quantization for faster processing. For NVIDIA GPUs, TensorRT delivers system-specific optimizations, achieving up to 3.4x speed improvements for certain models.

Note: Selecting the right tool depends on your deployment environment and hardware requirements.

Applications of ONNX Models in Computer Vision

ONNX models have revolutionized computer vision by enabling faster, more efficient, and scalable solutions. These models are widely used in various real-world applications, including image classification, object detection, and industrial automation. Let’s explore how ONNX enhances these areas.

Image Classification

Image classification is one of the most common applications of ONNX models. With ONNX, you can deploy classification models across different platforms, ensuring high accuracy and performance. These models analyze images and assign them to predefined categories, making them essential for tasks like facial recognition, medical imaging, and product categorization.

For example, ONNX models streamline facial recognition by supporting lightweight architectures optimized for edge devices. This allows you to implement facial recognition algorithms in security systems or mobile applications without compromising speed or accuracy. The ability to convert models from frameworks like PyTorch or TensorFlow into ONNX ensures compatibility with various hardware, from CPUs to GPUs.

Tip: Use ONNX Runtime to optimize classification models for real-time applications, such as detecting defects in manufacturing or identifying objects in autonomous vehicles.

Object Detection

Object detection involves identifying and locating objects within an image or video. ONNX models excel in this domain by offering high detection accuracy and fast inference speeds. These capabilities make them ideal for applications like surveillance, traffic monitoring, and augmented reality.

The table below highlights the performance of ONNX-based object detection models across different hardware platforms:

| Model Version | Hardware Platform | Detection Accuracy | Inference Speed | Object Size Sensitivity |

|---|---|---|---|---|

| YOLO v5 | Intel CPU | High | Fast | 1%, 2.5%, 5% |

| YOLO v8 | AMD CPU | Moderate | Moderate | 1%, 2.5%, 5% |

| YOLO v9 | GPU (TensorRT) | Very High | Very Fast | 1%, 2.5%, 5% |

| YOLO v10 | GPU (OpenVINO) | High | Fast | 1%, 2.5%, 5% |

| YOLO v11 | Various | Variable | Variable | 1%, 2.5%, 5% |

These results demonstrate how ONNX models adapt to different hardware, ensuring optimal performance for object detection tasks. Whether you’re working on a cloud-based system or an edge device, ONNX provides the flexibility to meet your requirements.

Industrial Automation and Quality Control

In industrial automation, ONNX models play a crucial role in streamlining production processes and ensuring quality control. These models analyze images from production lines to detect defects, measure dimensions, or verify product assembly. By integrating ONNX, you can achieve real-time performance and high accuracy, even in resource-constrained environments.

Key metrics validate the effectiveness of ONNX in industrial automation:

- Efficiency: ONNX models use a multi-stage approach to enhance image processing speed, which is critical for real-time applications.

- Accuracy: Patch-based training improves the precision of defect detection, ensuring consistent quality in production.

- Adaptability: The flexible pipeline allows you to adjust the system for specific production needs, making ONNX suitable for diverse industries.

For instance, ONNX models can identify minute defects in electronic components or ensure uniformity in food packaging. These capabilities reduce waste and improve overall efficiency, making ONNX a valuable asset in industrial settings.

Note: When deploying ONNX models in quality control, consider using tools like ONNX Runtime or NVIDIA TensorRT for optimized performance on specialized hardware.

Emerging Use Cases in Edge Computing

Edge computing is transforming how you deploy machine learning models by bringing computation closer to the data source. ONNX plays a pivotal role in this shift, enabling efficient and scalable applications in resource-constrained environments. Let’s explore some of the emerging use cases where ONNX models are making a significant impact.

Healthcare Monitoring in Remote Environments

ONNX models are revolutionizing healthcare by enabling advanced monitoring systems in remote or challenging environments. For example, wearable devices equipped with ONNX-optimized models can preprocess and denoise ECG data in real time. These models extract critical morphological and temporal features from ECG signals, ensuring accurate health monitoring even in deep-space missions or rural areas.

The table below highlights key contributions of ONNX in healthcare and edge computing:

| Contribution | Description |

|---|---|

| 1 | Advanced denoising and preprocessing techniques for ECG data in wearables. |

| 2 | Methods for extracting morphological and temporal features from ECG signals. |

| 3 | Modular machine learning pipeline with sophisticated hyperparameter tuning. |

| 4 | First use of ONNX in healthcare and deep-space edge computing. |

| 5 | Optimized inference performance on Android devices in deep-space scenarios. |

| 6 | Validation of ONNX models against expert cardiologist assessments. |

These advancements demonstrate how ONNX models enhance healthcare applications by delivering reliable performance in environments with limited computational resources.

Smart Cities and IoT Devices

In smart cities, ONNX models power IoT devices to process data locally, reducing latency and bandwidth usage. For instance, traffic cameras equipped with ONNX models can detect and classify vehicles in real time, improving traffic flow and safety. Similarly, smart sensors in buildings can monitor energy usage and optimize consumption patterns without relying on cloud-based systems.

ONNX’s ability to optimize memory allocations and fuse model operators ensures these devices operate efficiently. This makes ONNX an ideal choice for IoT applications where speed and resource efficiency are critical.

Space Exploration and Deep-Space Missions

Space exploration presents unique challenges for machine learning applications, including limited connectivity and extreme environments. ONNX models address these challenges by enabling high-performance inference on edge devices like Android tablets used in deep-space missions. These models minimize tensor transfers and apply tuned matrix math algorithms, ensuring reliable operation in harsh conditions.

Performance benchmarks highlight the adaptability of ONNX models in edge computing:

| Metric | Performance Gain |

|---|---|

| Inference Speed on CPU | 2–3x faster |

| Memory Usage | 60% reduction |

| Model Loading Time | 40% improvement |

These improvements make ONNX a valuable tool for deploying AI in space exploration, where every resource counts.

Industrial Automation at the Edge

ONNX models are also transforming industrial automation by enabling real-time decision-making on factory floors. For example, edge devices equipped with ONNX models can detect defects in products or monitor machinery for signs of wear and tear. This reduces downtime and improves overall efficiency.

By minimizing kernel launch times and optimizing memory usage, ONNX ensures these systems operate smoothly, even in environments with limited computational power. This scalability makes ONNX a key enabler of Industry 4.0.

Tip: When deploying ONNX models in edge environments, consider using ONNX Runtime for optimized performance and compatibility with various hardware platforms.

Benefits and Challenges of ONNX in Machine Vision

Benefits: Flexibility, Speed, and Cost-Effectiveness

ONNX offers unmatched flexibility for machine vision applications. You can train models in one framework, such as PyTorch, and deploy them seamlessly across different platforms. This interoperability ensures your AI solutions adapt to diverse environments, from cloud systems to edge devices. ONNX also supports a wide range of hardware, including CPUs, GPUs, and specialized accelerators, making it ideal for scalable deployment.

Speed is another key advantage. ONNX optimizes models for faster inference, reducing latency and improving real-time performance. Techniques like post-training quantization shrink model sizes without sacrificing accuracy. This makes ONNX perfect for applications requiring quick decision-making, such as industrial automation or traffic monitoring.

Cost-effectiveness is a major benefit. By using ONNX, you can avoid vendor lock-in and reduce expenses associated with proprietary tools. Its open-source nature allows you to leverage community-driven advancements, ensuring your machine learning projects remain budget-friendly.

Tip: Use ONNX Runtime to maximize performance and minimize costs during deployment.

Challenges: Compatibility and Learning Curve

Despite its benefits, ONNX presents some challenges. Converting models to ONNX can be tricky, especially if your models include custom layers. You may need to invest time in debugging and adapting these layers for successful conversion.

Maintaining compatibility across ONNX versions and frameworks also requires attention. Updates to ONNX may introduce changes that affect your existing models, leading to potential deployment issues. Additionally, ONNX has limited support for proprietary operations, which can restrict its use in specialized applications.

The learning curve for ONNX can be steep, particularly for beginners. You need to understand its model format, conversion processes, and optimization techniques to use it effectively. However, once you master these aspects, ONNX becomes a powerful tool for enhancing your AI projects.

Note: Start with simple models and gradually explore ONNX’s advanced features to overcome the learning curve.

Future Prospects of ONNX in Machine Vision

Advancements in AI Model Interoperability

ONNX is shaping the future of AI model interoperability by becoming the universal format for machine learning models. You can use ONNX to standardize how models are shared and deployed across different frameworks and hardware. This trend simplifies collaboration and accelerates innovation in AI.

Emerging advancements in ONNX also focus on optimizing models for low-power devices. These improvements make it easier to deploy AI solutions on mobile phones and IoT devices. Additionally, ONNX is expanding its support for generative AI models, enabling you to work with large-scale architectures like GPT or DALL-E. This integration opens new possibilities for creative applications in industries like design and entertainment.

Another promising area is federated AI learning. ONNX models can now be deployed across decentralized networks, allowing you to train models collaboratively without sharing sensitive data. This approach enhances privacy and security while maintaining high performance.

| Trend | Description |

|---|---|

| Standardization of AI Interoperability | ONNX is becoming the universal ML model format. |

| AI for Low-Power Devices | Enhanced ONNX optimizations for mobile and IoT applications. |

| Integration with Generative AI | ONNX supports large-scale generative models. |

| Federated AI Learning | ONNX models can be deployed across decentralized networks. |

These advancements highlight ONNX’s role in driving the future of AI, making it more accessible and versatile for developers like you.

Expanding Applications in IoT and Edge Devices

ONNX is revolutionizing IoT and edge computing by enabling seamless model portability. You can deploy ONNX models across various environments, including edge devices, without worrying about compatibility issues. This flexibility ensures consistent performance, whether you're working with smart sensors or industrial robots.

Optimizations in ONNX Runtime further enhance performance for AI applications in resource-constrained settings. For example, edge devices equipped with ONNX models can process data locally, reducing latency and bandwidth usage. This makes ONNX ideal for applications like traffic monitoring, healthcare wearables, and smart city infrastructure.

- ONNX enables model portability, allowing deployment across various environments, including edge devices.

- Interoperability ensures consistent performance of models across different platforms.

- ONNX Runtime optimizations enhance performance for AI applications in resource-constrained settings.

By leveraging ONNX, you can create scalable solutions that adapt to the unique challenges of IoT and edge computing. These capabilities position ONNX as a key enabler for the next generation of AI-powered devices.

ONNX machine vision systems combine interoperability, optimization, and scalability to advance computer vision applications. They enable you to deploy models across frameworks and hardware, ensuring flexibility and efficiency. These systems optimize inference speed and reduce model size, making them ideal for real-time tasks like industrial automation and edge computing.

The benefits of ONNX include faster processing, cost-effectiveness, and adaptability to diverse environments. Applications range from image classification to object detection, with emerging use cases in IoT and healthcare. The table below summarizes key metrics that highlight ONNX’s effectiveness:

| Metric Type | Description |

|---|---|

| Accuracy | Measures the overall correct predictions made by the model. |

| Precision | Indicates the accuracy of positive predictions. |

| Recall | Reflects the model's ability to detect positive cases. |

| F1 Score | The harmonic mean of precision and recall, providing a balance between the two. |

| Mean Squared Error | Used in regression tasks to assess prediction accuracy. |

| Processing Latency | Time taken for the model to make a prediction, crucial for real-time applications. |

| Energy Consumption | Indicates operational efficiency, measured in watts or joules per inference. |

| Model Size | Refers to the number of parameters or memory footprint, affecting deployment feasibility. |

Looking ahead, ONNX will continue to drive innovation in machine vision technologies. Its advancements in AI interoperability and edge computing promise to expand its applications in IoT, healthcare, and space exploration. By adopting ONNX, you position yourself to leverage cutting-edge solutions for scalable and efficient computer vision systems.

FAQ

What is ONNX, and why is it important for machine vision?

ONNX (Open Neural Network Exchange) is a format that allows AI models to work across different frameworks and hardware. It simplifies deployment, improves performance, and ensures compatibility. For machine vision, ONNX enables faster inference and scalability, making it ideal for real-time applications.

How do you convert a model to ONNX format?

You can convert a model using tools like torch.onnx.export for PyTorch or tf2onnx for TensorFlow. These tools export your trained model into ONNX format, ensuring compatibility with various platforms. Always verify the converted model to ensure it works as expected.

Tip: Use sample input data during conversion to avoid errors.

Can ONNX models run on edge devices?

Yes, ONNX models are optimized for edge devices. They use techniques like quantization to reduce size and improve speed. This makes them suitable for IoT devices, wearables, and other resource-constrained environments.

What tools support ONNX for machine vision?

Many tools support ONNX, including PyTorch, TensorFlow, ONNX Runtime, and NVIDIA TensorRT. These tools help you train, optimize, and deploy ONNX models efficiently. Choose a tool based on your hardware and application needs.

Are there any challenges when using ONNX?

You may face challenges like compatibility issues with custom layers or a steep learning curve for beginners. Regular updates to ONNX might also require adjustments to your models. Start with simple models to ease the learning process.

Note: Check ONNX documentation for troubleshooting tips.

See Also

Understanding Automotive Machine Vision Systems and Their Functions

Exploring the Role of Illumination in Machine Vision Systems

Quality Assurance in Machine Vision Systems: An Overview