Advancements in Reinforcement Learning for Machine Vision 2025

Reinforcement Learning machine vision systems have transformed the landscape in 2025, enabling them to achieve remarkable adaptability and precision. You can see this in real-world applications, such as drones equipped with advanced learning frameworks. These drones demonstrated an 88.4% success rate in obstacle avoidance, completing 18 of 20 trials with ease. Across industries, Reinforcement Learning machine vision systems revolutionized tasks like quality control and production optimization. For example, an electronics manufacturer in Penang reduced defect rates by 40% and inspection time by 60% using this technology. These advancements create smarter, more efficient Reinforcement Learning machine vision systems that adapt and improve continuously.

Key Takeaways

- Reinforcement learning helps machine vision systems get better over time. This makes tasks like avoiding obstacles more accurate and efficient.

- Deep reinforcement learning uses neural networks with older methods. This greatly improves hard tasks like finding objects and sorting images.

- Simulated environments are safe places to train models. They let models learn without real-world dangers and become stronger.

- Multi-agent systems let many agents work together. This boosts performance in changing environments, perfect for tasks like rescue missions.

- Reinforcement learning is changing fields like healthcare and robotics. It helps find health problems and makes factories work automatically for better results.

Foundations of Reinforcement Learning in Machine Vision

Reinforcement Learning Concept and Its Core Principles

Reinforcement learning is a method where machines learn by interacting with their environment. It uses a trial-and-error approach to maximize rewards. This process involves three key components: the agent (the learner), the environment (where the agent operates), and the actions (what the agent does). The agent receives feedback in the form of rewards or penalties, guiding it to improve its decisions over time.

Some core principles make reinforcement learning effective. For example, the exploration-exploitation trade-off ensures the agent balances trying new actions and using what it has already learned. Another principle, closed-loop experimental design, allows the system to adjust its actions based on real-time feedback. Training on dynamical models further enhances the agent's ability to adapt to complex environments.

| Core Principle | Description |

|---|---|

| Exploration-Exploitation Trade-off | Balances exploring new behaviors and exploiting learned policies, starting with high exploration and gradually reducing it. |

| Closed-loop Experimental Design | Adjusts actions based on real-time feedback, improving adaptability. |

| Training on Dynamical Models | Uses system models to optimize learning, focusing on maximizing information gain. |

How Machine Vision Systems Leverage Reinforcement Learning

Machine vision systems use reinforcement learning to tackle complex tasks. For instance, in lane-following applications, reinforcement learning helps develop steering policies that adapt to changing road conditions. By training on real-world scenarios, these systems improve their accuracy and reliability. A convolutional network trained with reinforcement learning has shown significant improvements in lane tracking, outperforming traditional methods.

Reinforcement learning also enhances tasks like image segmentation, object detection, and colorization. By fine-tuning models during training, machine vision systems achieve higher performance metrics. This adaptability makes reinforcement learning a powerful tool for optimizing computer vision applications.

The Role of Deep Reinforcement Learning in Vision Tasks

Deep reinforcement learning combines neural networks with reinforcement learning to handle complex vision tasks. It excels in areas like object detection, image classification, and pose estimation. For example, the Selective Particle Attention (SPA) algorithm allows models to focus on relevant features, improving learning efficiency. This approach reduces unnecessary data processing and adapts to task-specific needs.

| Application Type | Description |

|---|---|

| Image Classification | Categorizes images into predefined groups. |

| Object Detection | Identifies and locates objects in images. |

| Captioning | Generates descriptive text for images. |

| Object Segmentation | Divides images into meaningful segments. |

| Grasp Planning | Plans how to grasp objects effectively. |

Deep reinforcement learning also uses reward feedback to refine its learning process. This method improves flexibility and speed, making it ideal for dynamic environments. By leveraging these advancements, reinforcement learning machine vision systems continue to push the boundaries of what computer vision can achieve.

Breakthroughs in Reinforcement Learning Machine Vision Systems

Innovations in Deep Reinforcement Learning Architectures

Deep reinforcement learning has revolutionized how machines process and interpret visual data. By combining neural networks with reinforcement learning, these architectures can now handle high-dimensional data with remarkable efficiency. For instance, models like Deep Q-Networks (DQN), Proximal Policy Optimization (PPO), and Advantage Actor-Critic (A2C) have demonstrated significant improvements in learning speed and decision-making accuracy.

| Model | Average Reward per Episode | Episodes to Threshold | Time to Threshold | Frame Utilization Efficiency |

|---|---|---|---|---|

| DQN | High | Low | Short | Efficient |

| PPO | Moderate | Moderate | Moderate | Moderate |

| A2C | Variable | High | Long | Variable |

These advancements enable reinforcement learning machine vision systems to adapt to complex environments. For example, in robotics, these systems allow robots to learn motor skills and navigate diverse terrains. In healthcare, deep reinforcement learning aids in developing personalized treatment strategies by analyzing imaging systems and patient data. This adaptability makes these architectures a cornerstone of modern computer vision.

Enhanced Training Through Simulated Environments

Simulated environments have become a game-changer for training reinforcement learning models. These environments provide a controlled setting where systems can learn and adapt without real-world risks. Metrics like success rate and robustness to disturbances highlight the effectiveness of this approach.

| Metric | History Length | Performance | Baseline Comparison |

|---|---|---|---|

| Success Rate | Varying | Higher with more history | Better than DAgger |

Using an asymmetric critic setup further enhances training. This method improves success rates significantly compared to traditional approaches. For example, direct reinforcement learning from raw pixel data often fails in high-dimensional environments. However, bootstrapping techniques overcome these challenges, enabling systems to excel in tasks like depth estimation and object recognition.

- Improved robustness to unknown disturbances.

- Consistent performance advantages across trials.

Simulated environments also accelerate training for perception models. These models can now process complex visual data, such as depth estimation, with greater accuracy. This capability is crucial for applications like autonomous vehicles and smart surveillance systems, where real-time perception is essential.

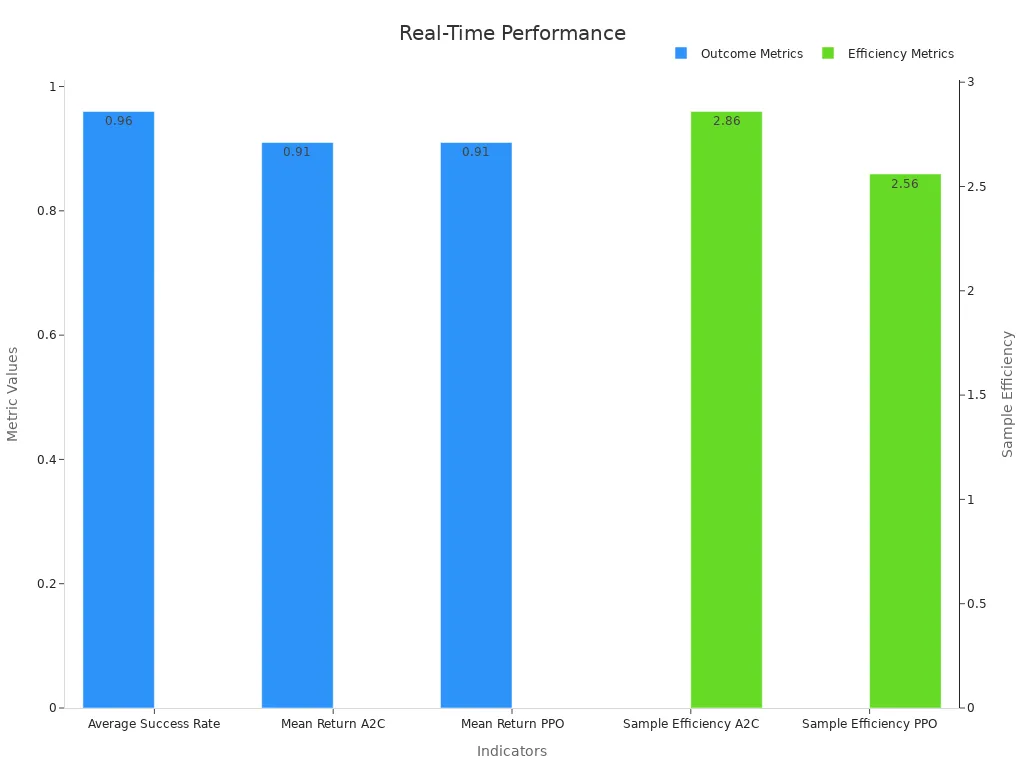

Real-Time Decision-Making in Dynamic Vision Scenarios

Real-time decision-making has always been a challenge for reinforcement learning machine vision systems. Recent advancements have addressed this by improving sample efficiency and decision accuracy. For example, algorithms like PPO and A2C now achieve mean returns of 0.91 with significantly fewer training samples.

| Indicator | Value | Description |

|---|---|---|

| Average Success Rate | 0.96 | Indicates outstanding decision-making performance in dynamic vision scenarios. |

| Sample Efficiency (A2C) | 2.86 times | Shows improvement in sample efficiency compared to vanilla counterparts. |

| Sample Efficiency (PPO) | 2.56 times | Demonstrates enhanced learning efficacy through integration with LVLM2P. |

| Mean Return (A2C) | 0.91 | Achieved with significantly fewer training samples (5.26k). |

| Mean Return (PPO) | 0.91 | Achieved with significantly fewer training samples (9.18k). |

These improvements empower imaging systems to make split-second decisions in dynamic environments. For instance, autonomous drones can now navigate obstacles with a 96% success rate. This capability is critical for applications requiring high-speed perception and decision-making, such as industrial robotics and smart surveillance.

Multi-Agent Systems for Collaborative Vision Tasks

Multi-agent systems have become a cornerstone of collaborative vision tasks in 2025. These systems involve multiple agents working together to achieve a shared goal. Each agent operates independently but communicates and coordinates with others to enhance overall performance. You can think of it as a team of robots or drones collaborating to complete a complex task, such as mapping a disaster zone or inspecting industrial equipment.

Why Multi-Agent Systems Matter

Multi-agent systems excel in scenarios where a single agent would struggle. For example, in large-scale environments, dividing tasks among multiple agents reduces the time required to complete them. This approach also improves reliability. If one agent fails, others can adapt and continue the task.

Tip: Multi-agent systems are particularly useful in dynamic environments where conditions change rapidly. Their ability to share information in real-time ensures they can respond effectively to new challenges.

Key Features of Multi-Agent Systems

Here are some of the defining features that make multi-agent systems so effective in collaborative vision tasks:

- Decentralized Decision-Making: Each agent makes decisions based on local data, reducing the need for a central controller. This improves scalability and robustness.

- Communication and Coordination: Agents share information to align their actions. For instance, drones in a swarm can exchange data about obstacles to optimize their flight paths.

- Task Specialization: Different agents can focus on specific aspects of a task. In a warehouse, one robot might handle object detection while another focuses on navigation.

| Feature | Benefit |

|---|---|

| Decentralized Decision-Making | Enhances scalability and reduces single points of failure. |

| Communication and Coordination | Improves efficiency and adaptability in dynamic environments. |

| Task Specialization | Allows agents to leverage their strengths for better overall outcomes. |

Applications in Machine Vision

Multi-agent systems have unlocked new possibilities for reinforcement learning machine vision systems. In agriculture, drones equipped with advanced vision capabilities can monitor crops collaboratively, identifying areas that need irrigation or pest control. In logistics, fleets of autonomous vehicles work together to optimize delivery routes, reducing fuel consumption and delivery times.

Another exciting application lies in search-and-rescue missions. Imagine a team of robots equipped with cameras and sensors scanning a collapsed building. By sharing visual data, they can quickly locate survivors and map safe paths for human rescuers.

Challenges and Future Directions

While multi-agent systems offer immense potential, they also present challenges. Ensuring seamless communication between agents can be difficult, especially in environments with limited connectivity. Training these systems requires sophisticated algorithms and significant computational resources. However, ongoing research aims to address these issues, paving the way for even more advanced applications.

As you explore the future of machine vision, multi-agent systems will undoubtedly play a pivotal role. Their ability to collaborate and adapt makes them indispensable for tackling complex, real-world problems.

Applications of Reinforcement Learning in Machine Vision

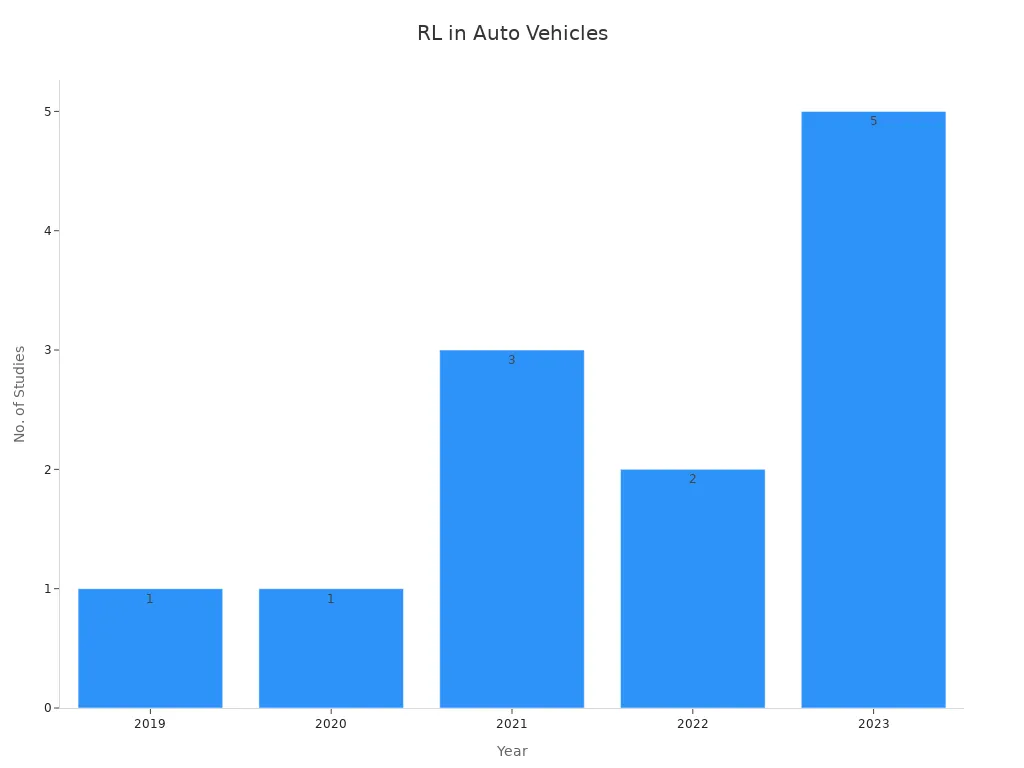

Autonomous Vehicles and Navigation

Reinforcement learning has become a cornerstone in the development of self-driving cars. It enables these vehicles to make decisions in real-time, adapting to dynamic road conditions. By learning from interactions with their environment, self-driving cars can optimize tasks like lane-keeping, obstacle avoidance, and path planning. For instance, reinforcement learning algorithms such as Deep Deterministic Policy Gradient (DDPG) have shown remarkable success in training vehicles to navigate complex urban settings.

Empirical studies highlight the growing impact of reinforcement learning in autonomous navigation systems. Researchers have explored various approaches, from path planning to cooperative adaptive cruise control. The table below summarizes some key studies in this field:

| Study Title | Authors | Year |

|---|---|---|

| Deep Reinforcement Learning in Autonomous Car Path Planning and Control | Mireya Cabezas-Olivenza et al. | 2020 |

| Stability analysis for autonomous vehicle navigation trained over DDPG | Hui Hu et al. | 2023 |

| Path planning for autonomous vehicles in unknown dynamic environment | J. Hossain et al. | 2023 |

| Covernav: Cover following navigation planning in unstructured outdoor | Victor Mazzilli et al. | 2023 |

| Lane following method based on improved DDPG algorithm | Yifan Hu et al. | 2023 |

Reinforcement learning also enhances perception in self-driving cars. Perception models trained with reinforcement learning can process visual data, such as depth estimation, to identify obstacles and predict traffic patterns. This capability ensures safer and more efficient navigation, even in unpredictable environments.

Robotics and Industrial Vision Systems

In robotics, reinforcement learning has revolutionized how machines interact with their surroundings. Robots equipped with computer vision and reinforcement learning can now perform tasks like object manipulation, assembly, and quality inspection with unprecedented precision. For example, reinforcement learning enables robots to learn grasping techniques by trial and error, improving their ability to handle objects of varying shapes and sizes.

Industrial vision systems benefit significantly from reinforcement learning. These systems use imaging systems to detect defects, monitor production lines, and ensure product quality. By leveraging reinforcement learning, they can adapt to changes in manufacturing processes, reducing downtime and increasing efficiency. For instance, a factory might use a robot with depth estimation capabilities to inspect products for defects, ensuring consistent quality.

Tip: Reinforcement learning allows robots to learn from their mistakes, making them more adaptable and reliable in dynamic industrial environments.

The integration of reinforcement learning with robotics has also paved the way for collaborative robots, or cobots. These robots work alongside humans, assisting with tasks that require both precision and adaptability. In warehouses, cobots equipped with perception models can navigate crowded spaces, pick items, and optimize storage layouts.

Healthcare Imaging and Diagnostics

Reinforcement learning is transforming healthcare by enhancing imaging systems and diagnostic tools. Unlike traditional methods that rely on extensive data annotation, reinforcement learning learns directly from interactions with medical data. This approach reduces the time and effort required for training while improving diagnostic accuracy.

In medical imaging, reinforcement learning automates tasks like tumor detection, organ segmentation, and anomaly identification. For example, it can analyze sequential data from imaging systems to track disease progression over time. This capability allows healthcare professionals to make more informed decisions, improving patient outcomes.

Reinforcement learning also addresses challenges like bias in diagnostic systems. By exploring new solutions, it reduces the risk of errors and ensures fairer outcomes. While its applications in healthcare are still emerging, reinforcement learning holds immense potential to revolutionize medical diagnostics.

Note: Reinforcement learning not only improves efficiency but also opens the door to innovative solutions that were previously unattainable.

Smart Surveillance and Security Applications

Reinforcement learning (RL) has transformed smart surveillance and security systems in 2025. These systems now adapt to dynamic environments, detect threats faster, and make decisions in real time. You can see this technology in action in areas like public safety, border control, and private property monitoring.

How Reinforcement Learning Enhances Surveillance

Traditional surveillance systems rely on predefined rules and static algorithms. RL introduces adaptability by allowing systems to learn from their environment. For example, a camera equipped with RL can adjust its focus and angle based on unusual activity. This makes it more effective at identifying potential threats.

Example: Imagine a security camera in a crowded airport. Instead of scanning every individual equally, it prioritizes areas with suspicious behavior, such as unattended bags or unusual movements.

Key Features of RL-Powered Surveillance Systems

Here are some ways RL improves surveillance and security:

- Anomaly Detection: RL models learn to identify unusual patterns, such as unauthorized access or loitering.

- Dynamic Resource Allocation: Systems allocate resources like cameras and drones to high-risk areas.

- Real-Time Decision-Making: RL enables instant responses, such as alerting authorities or locking doors.

- Behavior Prediction: By analyzing past data, RL predicts potential security breaches.

| Feature | Benefit |

|---|---|

| Anomaly Detection | Identifies threats faster and reduces false alarms. |

| Dynamic Resource Allocation | Optimizes the use of surveillance equipment. |

| Real-Time Decision-Making | Improves response times during emergencies. |

| Behavior Prediction | Anticipates risks before they occur. |

Applications in Public and Private Security

You’ll find RL-powered systems in various security applications:

- Public Safety: Cities use RL to monitor traffic, detect accidents, and manage crowds during events. For instance, RL helps identify suspicious objects in real time, ensuring quick action.

- Border Security: Drones equipped with RL patrol borders, identifying unauthorized crossings. These drones adapt to changing weather and terrain, making them more reliable than traditional systems.

- Home Security: Smart cameras with RL recognize familiar faces and alert you to unknown visitors. They also learn your daily routine, reducing false alarms.

Tip: When choosing a security system, look for RL-powered features like adaptive learning and real-time alerts. These systems offer better protection and fewer interruptions.

Challenges and Future Potential

While RL has improved surveillance, challenges remain. Training these systems requires large datasets and significant computational power. Ethical concerns, such as privacy violations, also need addressing. However, ongoing research aims to make RL-powered surveillance more efficient and ethical.

As you explore security solutions, RL-powered systems stand out for their adaptability and intelligence. They represent the future of surveillance, offering smarter and safer environments for everyone.

Challenges in Reinforcement Learning for Machine Vision

Computational Demands and Resource Constraints

Reinforcement learning in machine vision requires significant computational power. Training perception models involves processing large datasets and running complex algorithms. This process demands high-performance hardware, such as GPUs and TPUs, which can be expensive and energy-intensive. For example, training a deep reinforcement learning model for object detection may take days or even weeks on standard hardware. These resource constraints limit access to this technology for smaller organizations and researchers.

You also face challenges in deploying these systems in real-world applications. Many machine vision tasks, like autonomous navigation or real-time surveillance, require low-latency decision-making. Achieving this level of performance often involves optimizing models to run on edge devices with limited computational resources. Balancing efficiency and accuracy remains a critical hurdle.

Data Quality and Training Environment Limitations

The success of reinforcement learning depends heavily on the quality of training data. Poor-quality data can lead to inaccurate perception and unreliable decision-making. For instance, if a dataset lacks diversity, the resulting model may struggle to generalize across different scenarios. This issue becomes even more pronounced in dynamic environments, where conditions change rapidly.

Simulated environments, often used for training, also have limitations. While they provide a safe and controlled setting, they may not fully replicate real-world complexities. A model trained in a simulation might perform well in theory but fail when deployed in unpredictable environments. Bridging this gap between simulation and reality is essential for creating robust machine vision systems.

Ethical and Bias Concerns in Vision Systems

Ethical concerns in reinforcement learning-enabled vision systems often stem from biases in training data. Skewed datasets and a lack of diversity among development teams can result in discriminatory technologies. For example, an image recognition system trained on unrepresentative data might misidentify individuals from certain demographic groups. This not only undermines trust but also raises serious ethical questions.

To address these issues, you can use tools like AI Fairness 360 to monitor and mitigate bias in datasets and models. Regular audits and diverse datasets improve transparency and accountability. Tools such as TuringBox and Bias Detection Tools also help identify and correct biases, ensuring fairer outcomes. However, the "black box" nature of many AI algorithms remains a challenge, as it conceals decision-making processes and hinders accountability.

| Tool Name | Purpose |

|---|---|

| TuringBox | Benchmarks performance and enables independent evaluation. |

| AI Fairness 360 | Detects and mitigates bias in datasets and models. |

| Responsible AI Licenses | Provides ethical guidelines for software development. |

| Bias Detection Tools | Identifies biases in datasets. |

| Datasheets for Datasets | Improves transparency in dataset usage and characteristics. |

By addressing these ethical challenges, you can foster trust in machine vision systems and ensure they serve all users equitably.

Generalization Across Diverse Domains

Reinforcement learning (RL) in machine vision has made significant strides in adapting to diverse domains. You might wonder why this matters. Generalization allows a single system to perform well across multiple tasks without retraining. This capability saves time, reduces costs, and increases efficiency.

Why Generalization is Crucial

Machine vision systems often face challenges when moving from one domain to another. For example, a model trained to detect defects in electronics may struggle with identifying flaws in textiles. Generalization ensures that the system adapts to new environments with minimal adjustments. This flexibility is essential for industries like manufacturing, healthcare, and agriculture.

Tip: Look for RL models that use transfer learning. This technique helps systems apply knowledge from one domain to another, improving adaptability.

Techniques for Achieving Generalization

Several methods help RL systems generalize effectively:

- Domain Randomization: Exposes the model to varied training scenarios, making it robust to unseen conditions.

- Meta-Learning: Teaches the system how to learn, enabling faster adaptation to new tasks.

- Multi-Task Learning: Trains the model on multiple tasks simultaneously, enhancing its versatility.

| Technique | Benefit |

|---|---|

| Domain Randomization | Prepares the system for unpredictable environments. |

| Meta-Learning | Speeds up adaptation to new tasks. |

| Multi-Task Learning | Improves performance across a range of applications. |

Real-World Examples

You can see generalization in action in autonomous vehicles. These systems navigate urban streets, highways, and rural roads without retraining. Similarly, in healthcare, RL-powered imaging tools identify diseases across different patient demographics. This adaptability ensures consistent performance, regardless of the domain.

Note: Generalization is not just a technical achievement. It’s a step toward creating smarter, more reliable systems that work seamlessly across industries.

By focusing on generalization, you unlock the full potential of RL in machine vision. This approach ensures that systems remain effective, no matter the challenge.

Future Directions for Reinforcement Learning in Machine Vision

Quantum Computing and Its Potential Impact

Quantum computing is set to revolutionize reinforcement learning in machine vision. You can think of quantum systems as tools that solve problems faster than traditional computers. These systems use quantum properties like superposition and entanglement to process data in ways classical systems cannot. For example, quantum deep reinforcement learning has already shown promise in healthcare, particularly in adaptive radiotherapy for oncology.

| Source | Insight |

|---|---|

| Exploring the Latest Trends in Machine Learning Research | Quantum algorithms aim to solve complex problems more efficiently than classical methods. |

| A systematic review of quantum machine learning for digital health | Quantum reinforcement learning supports clinical decision-making in oncology applications. |

| What Is the Future of Machine Learning? | Quantum processors enhance machine learning capabilities, boosting reinforcement learning. |

As quantum processors improve, you’ll see faster and more efficient training for machine vision systems. This advancement will allow these systems to handle high-dimensional data, such as images, with greater precision.

Expanding into Augmented and Virtual Reality

Reinforcement learning is also making strides in augmented reality (AR) and virtual reality (VR). These technologies rely on machine vision to create immersive experiences. With reinforcement learning, AR and VR systems can adapt to user behavior in real time. For instance, an AR application could adjust its visual overlays based on your movements, making the experience more interactive.

In VR, reinforcement learning enhances object recognition and scene understanding. This improvement allows virtual environments to respond dynamically to your actions. Imagine a VR training program that adjusts its difficulty based on your performance. These advancements make AR and VR more engaging and effective for education, gaming, and professional training.

Bridging Simulation and Real-World Deployment

Transitioning from simulation to real-world deployment remains a challenge for reinforcement learning systems. The appearance gap, or the visual differences between simulated and real-world images, often hinders performance. Metrics like the Fréchet Inception Distance (FID) help measure this gap. A reported FID score of 198.82 highlights the discrepancies between simulated and real images.

- The appearance gap arises from factors like lighting and texture differences.

- FID quantifies the similarity between simulated and real-world image distributions.

- A lower FID score indicates better alignment between the two datasets.

By addressing these gaps, you can ensure that machine vision systems trained in simulations perform reliably in real-world scenarios. This progress will accelerate the adoption of reinforcement learning in industries like autonomous driving and robotics.

Collaborative Research and Open-Source Innovations

Collaborative research and open-source innovations have become essential for advancing reinforcement learning in machine vision. You can see this in the way researchers, developers, and organizations work together to solve complex problems. By sharing knowledge and resources, they accelerate progress and make cutting-edge technologies accessible to everyone.

Why Collaboration Matters

Collaboration allows you to benefit from diverse perspectives. When experts from different fields come together, they create solutions that are more robust and innovative. For example, partnerships between universities and tech companies often lead to breakthroughs in algorithms and architectures. These collaborations also help bridge the gap between academic research and real-world applications.

Tip: Look for collaborative projects in your field of interest. They often provide opportunities to learn and contribute.

The Role of Open-Source Platforms

Open-source platforms play a key role in democratizing technology. They allow you to access tools, datasets, and pre-trained models without cost. This reduces barriers to entry and fosters innovation. Popular platforms like TensorFlow, PyTorch, and OpenAI Gym have become indispensable for researchers and developers.

| Platform | Purpose | Example Use Case |

|---|---|---|

| TensorFlow | Machine learning framework | Training vision models |

| PyTorch | Deep learning library | Developing reinforcement learning |

| OpenAI Gym | Simulation environment | Testing RL algorithms |

How You Can Contribute

You can contribute to open-source projects by sharing your code, reporting bugs, or improving documentation. These contributions not only enhance the community but also help you build skills and gain recognition.

Note: Collaborative research and open-source innovations ensure that advancements in machine vision benefit everyone, not just a select few.

Reinforcement learning has reshaped machine vision systems in 2025, making them smarter and more adaptive. You’ve seen breakthroughs in deep learning architectures, real-time decision-making, and collaborative multi-agent systems. These advancements have unlocked new possibilities in industries like healthcare, robotics, and security.

Note: The potential of reinforcement learning goes beyond current applications. It holds the key to solving complex problems and creating innovative solutions.

To keep this momentum, you can support ongoing research and open-source collaborations. Together, these efforts will overcome challenges and pave the way for smarter, more efficient technologies. 🚀

FAQ

What is reinforcement learning in simple terms?

Reinforcement learning teaches machines to learn from their actions. They try different actions, get feedback (rewards or penalties), and improve over time. Think of it like training a dog with treats for good behavior.

How does reinforcement learning improve machine vision?

Reinforcement learning helps machine vision systems adapt to new tasks. It trains them to make better decisions by learning from their environment. For example, it improves object detection or navigation in changing conditions.

Can reinforcement learning work in real-time?

Yes, it can. Modern algorithms process data quickly, enabling real-time decision-making. For instance, drones use reinforcement learning to avoid obstacles instantly while flying.

What industries benefit most from reinforcement learning in machine vision?

Industries like healthcare, robotics, and security benefit greatly. It improves diagnostics, automates manufacturing, and enhances surveillance systems. Autonomous vehicles also rely on it for navigation.

Is reinforcement learning hard to implement?

It can be challenging due to high computational needs and complex algorithms. However, open-source tools like TensorFlow and PyTorch make it easier for developers to start experimenting.

Tip: Start with simple projects and gradually explore advanced applications to build your skills.

See Also

Exploring Robotic Vision Systems for Guidance in 2025

The Impact of Deep Learning on Machine Vision Systems

Defining Edge AI for Instant Machine Vision Applications

The Importance of Machine Vision Guidance in Robotics

Envisioning Assembly Verification Through Machine Vision Innovations