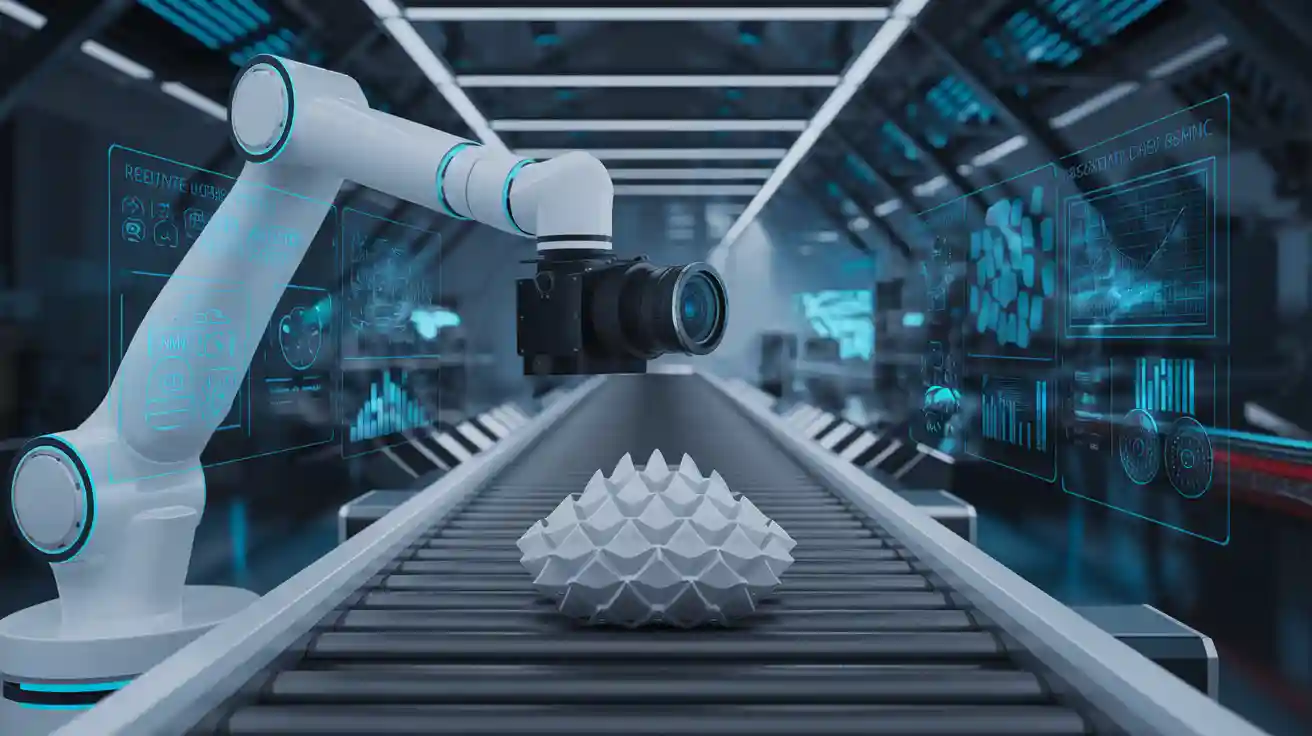

Understanding Robotic Guidance Machine Vision Systems in 2025

Robotic guidance machine vision systems revolutionize how robots perceive and interact with their surroundings. These systems rely on advanced cameras and algorithms to analyze visual data, enabling robots to execute tasks with precision. Their adoption has surged in 2025 due to their ability to enhance productivity and safety in industries like manufacturing and healthcare. For example, machine vision systems improve assembly accuracy and flaw detection, critical in high-speed production lines. Automation powered by these systems drives operational efficiency, with 93% of digital lighthouse factories reporting growth benefits. As the market grows at a projected CAGR of 9.86%, these technologies redefine efficiency and quality control across sectors.

Key Takeaways

- Robotic vision systems help industries work faster and safer.

- They are used in factories and healthcare for better automation.

- Clear cameras and smart programs find objects and check them fast.

- This helps fix mistakes and keeps quality high.

- Robots can talk to controllers to adjust to new tasks easily.

- Vision robots save time by doing the same jobs over and over.

- They make fewer mistakes and keep products the same every time.

- Buying these systems saves money and helps businesses grow later.

Components of Robotic Guidance Machine Vision Systems

Cameras and Vision Sensors

Cameras and vision sensors form the backbone of any robotic guidance machine vision system. These devices capture visual data, enabling robots to "see" and interpret their surroundings. High-resolution cameras, especially those exceeding 25 megapixels, have become industry benchmarks in 2025. They excel at identifying fine defects on large surfaces, making them indispensable in precision-driven industries like automotive and manufacturing. Advanced image-processing software complements these cameras, ensuring real-time analysis and reducing error rates during quality control.

Tip: When selecting cameras for your robot guidance systems, prioritize resolution and compatibility with your application needs. High-resolution sensors are ideal for tasks requiring detailed inspections, while lower-resolution models may suffice for simpler operations.

Processing Software and Algorithms

Image processing software is the brain of a machine vision system. It analyzes the visual data captured by cameras and translates it into actionable insights for the robot. Modern software relies heavily on artificial intelligence and machine learning algorithms to enhance accuracy and efficiency. For example, mixed-methods approaches combine qualitative and quantitative data to evaluate performance, using metrics like accuracy and F1-score.

| Evidence Type | Description |

|---|---|

| Mixed-Methods Approach | Utilized both qualitative and quantitative methods for comprehensive analysis of AI and machine vision. |

| Data Collection | Included primary data from expert interviews and surveys, and secondary data from publicly available datasets. |

| Performance Evaluation | Employed statistical methods and machine learning algorithms to assess model effectiveness using metrics like accuracy and F1-score. |

Comparative studies show that vision-based approaches outperform traditional non-vision methods in processing speed, accuracy, and adaptability. This makes them ideal for dynamic environments where robots must respond to changing conditions.

| Approach Type | Processing Speed | Accuracy | Adaptability |

|---|---|---|---|

| Vision-based | Improved | High | Dynamic |

| Traditional non-vision | Limited | Moderate | Predetermined |

Integration with Robotic Controllers

Integration bridges the gap between machine vision systems and robotic controllers, ensuring seamless communication and responsiveness. Modern protocols support advanced sensor integration, including 3D vision systems and force-torque sensors, with plug-and-play functionality. Networking protocols have replaced discrete signals, offering rich data structures and real-time debugging capabilities.

- Native support for advanced sensor integration, including 3D vision systems and force-torque sensors, with plug-and-play functionality.

- Networking protocols provide robust data structures, messages, and responses for efficient communication.

- ROS 2.0 integration enables real-time control with latency metrics consistently below 1 millisecond.

Case studies validate the effectiveness of integration in improving system responsiveness. For instance, a vision-guided robotics system equipped with deep learning algorithms demonstrated enhanced tracking accuracy and adaptability. This dual-coordinate approach allowed the robot to adjust to environmental changes, making it ideal for tasks like robotic welding and autonomous object tracking.

How Robotic Guidance Machine Vision Systems Work

Capturing and Analyzing Visual Data

A robotic guidance machine vision system begins its process by capturing visual data through cameras or sensors. These devices collect high-resolution images or videos of the environment, which the system then analyzes to extract meaningful information. Advanced 3D vision systems have become a game-changer, offering greater accuracy in tasks like part-picking and inspection. For instance, 3D systems improve picking accuracy by up to 25% compared to traditional 2D systems.

The efficiency of these systems is remarkable. Machine vision reduces inspection errors by over 90% and lowers defect rates by up to 80%. Additionally, automated visual inspection can handle up to 10,000 parts per hour, significantly boosting productivity. The table below highlights some key efficiency improvements:

| Evidence Description | Efficiency Improvement |

|---|---|

| Vision systems reduce inspection errors | Over 90% compared to manual inspection |

| 3D vision systems increase picking accuracy | Up to 25% over traditional 2D systems |

| Automated visual inspection reduces defect rates | Up to 80% |

| Machine vision inspection reduces human error rates | From 25% to under 2% |

Object Detection and Recognition

Once the system captures and processes visual data, it identifies and classifies objects within the scene. Object detection and recognition rely on advanced algorithms, including deep learning models, to achieve high accuracy. These models analyze features like shape, size, and texture to distinguish between objects.

Performance metrics such as Intersection over Union (IoU) and Mean Average Precision (mAP) measure the effectiveness of these systems. For example, IoU evaluates how well the predicted bounding boxes align with the actual objects, while mAP provides an overall accuracy score across different object classes. The table below outlines key metrics used in object detection:

| Metric | Description |

|---|---|

| Intersection over Union (IoU) | Measures the overlap between predicted bounding boxes and ground-truth boxes, with a common threshold of 0.5 for a match. |

| Precision | The ratio of true positive predictions to the total predicted positives, indicating the accuracy of the positive predictions. |

| Recall | The ratio of true positive predictions to the total actual positives, reflecting the model's ability to find all relevant instances. |

| F1 Score | The harmonic mean of precision and recall, providing a single score that balances both metrics. |

| Mean Average Precision (mAP) | The mean of average precision across different classes, often evaluated at various IoU thresholds (e.g., mAP@0.5, mAP@0.75). |

Decision-Making and Task Execution

After detecting and recognizing objects, the system moves to decision-making and task execution. This stage involves interpreting the analyzed data to determine the next steps. For example, a robot might decide how to pick up an object or assemble a component based on its position and orientation.

Machine vision systems excel in standardizing routine tasks and reducing errors. Automated inspection systems detect quality issues in real time, enabling proactive problem-solving. Additionally, technologies like collaborative robots (cobots) ensure consistent task execution, minimizing training time. These systems also increase throughput by maintaining production flow and reducing waste.

| Metric | Benefit |

|---|---|

| Reducing variation and defects | Automated inspection systems detect quality issues in real time, allowing for proactive problem-solving. |

| Increasing throughput | Technologies like AMRs ensure timely material delivery, maintaining production flow and reducing waste. |

| Standardizing routine tasks | Cobots and guided systems ensure consistency in task execution, reducing training time and errors. |

By combining these capabilities, robotic guidance machine vision systems deliver unmatched precision and efficiency, making them indispensable in modern automation.

Applications of Vision-Guided Robots

Manufacturing and Assembly

Vision-guided robots have transformed manufacturing processes by enhancing precision and efficiency. In the aerospace industry, a vision-guided assembly cell reduced cycle time by 26 seconds while achieving 97% consistency in part assembly. These robots use advanced vision systems to align and apply components with remarkable accuracy. This improvement not only boosts productivity but also ensures superior product quality.

In the automotive sector, vision-guided robots excel at detecting and handling randomly placed parts. Their ability to adapt to various orientations and appearances of components makes them indispensable in complex manufacturing environments. For example, automated assembly systems equipped with vision-guided robots streamline workflows, reduce errors, and improve overall quality.

Tip: If you're considering implementing automated assembly systems, focus on tasks requiring high precision and adaptability. Vision-guided robots can handle intricate operations with ease, making them ideal for industries like aerospace and automotive manufacturing.

Warehousing and Logistics

In warehousing and logistics, vision-guided robots optimize operations by automating repetitive tasks. These robots excel at sorting, picking, and packing items with speed and accuracy. For instance, they can identify and retrieve items from shelves, even in cluttered or dynamic environments. This capability reduces human error and increases throughput.

Automated systems powered by vision-guided robots also enhance inventory management. By scanning barcodes or QR codes, these robots track stock levels in real time, ensuring accurate inventory records. This reduces the risk of stockouts or overstocking, saving both time and money.

Note: Vision-guided robots are particularly effective in e-commerce warehouses, where speed and accuracy are critical. Their ability to adapt to changing demands makes them a valuable asset in dynamic supply chain environments.

Healthcare and Surgical Robotics

Vision-guided robots play a pivotal role in healthcare, particularly in surgical applications. These robots assist surgeons by providing enhanced visualization and precision during procedures. For example, molecular image-guided systems enable surgeons to identify and target specific tissues with unparalleled accuracy.

Clinical trials have demonstrated the potential of these systems, though long-term funding challenges limit widespread validation. Performance metrics, such as kinematic data on instrument movement, objectively assess how these innovations improve surgeon-robot interactions. The table below highlights key insights:

| Evidence Type | Description |

|---|---|

| Clinical Trials | Few molecular image-guided procedures validated due to funding challenges. |

| Performance Metrics | Kinematic metrics assess instrument movement, such as speed and path length. |

| Health Technology Assessment | Multivariate assessments link robotic surgery outcomes to policy-making decisions. |

Vision-guided robots also enhance patient safety by reducing the risk of human error. Their precision minimizes tissue damage, leading to faster recovery times and better outcomes. These advancements make vision-guided robots a cornerstone of modern surgical practices.

Autonomous vehicles and drones

Autonomous vehicles and drones rely heavily on vision-guided robots to navigate and perform tasks with precision. These systems use advanced cameras and sensors to capture their surroundings, enabling them to make real-time decisions. For example, drones equipped with machine vision can identify obstacles and adjust their flight paths to avoid collisions. This capability ensures safer and more efficient operations, especially in complex environments.

Machine learning plays a crucial role in enhancing the performance of these systems. A recent study demonstrated how drones equipped with machine learning frameworks successfully navigated unfamiliar territories. The drones achieved an 88.4% success rate in obstacle avoidance, completing 18 out of 20 trials. The table below highlights the key findings from this research:

| Study Title | Key Findings | Performance Metrics |

|---|---|---|

| Machine learning guarantees robots’ performance in unknown territory | The study adapted machine learning frameworks to enhance robot performance in unfamiliar environments, validating techniques through obstacle avoidance tests with a drone. | The drone achieved an 88.4% success rate in obstacle avoidance, successfully navigating 18 out of 20 trials (90%). |

Vision-guided robots also excel in autonomous vehicles, where they enhance safety and efficiency. These systems detect pedestrians, traffic signs, and other vehicles, ensuring smooth navigation. By processing visual data in real time, they reduce the risk of accidents and improve traffic flow. You can see their impact in delivery drones, which use vision systems to locate drop-off points accurately. This technology minimizes errors and ensures timely deliveries.

As you explore the potential of vision-guided robots in autonomous vehicles and drones, you’ll notice their ability to adapt to dynamic conditions. These systems represent a significant step forward in automation, offering solutions that are both innovative and practical.

Benefits of Robot Vision Guidance Systems

Enhanced Precision and Efficiency

Robot vision guidance systems deliver a remarkable level of precision, enabling robots to perform tasks with unmatched accuracy. These systems excel in applications like assembly, inspection, and material handling. For example, vision-guided robots equipped with enhanced part recognition can identify and manipulate components with minimal errors. This capability reduces waste and ensures consistent product quality.

The integration of advanced algorithms further boosts performance. Robots can adapt to variations in size, shape, or orientation of objects, maintaining efficiency even in dynamic environments. By automating repetitive tasks, these systems free up human workers for more complex responsibilities. This not only improves productivity but also enhances overall operational efficiency.

Tip: To maximize efficiency, consider deploying robot guidance systems in processes requiring high precision, such as quality control or intricate assembly tasks.

Improved Safety in Industrial Environments

Vision-guided robots significantly enhance workplace safety by reducing the risk of accidents. These systems monitor environments in real time, identifying potential hazards before they escalate. For instance, Matroid's computer vision platform has improved airbag compliance rates from below 25% to over 90%. It also increased the detection and correction of unsafe observations by 400%, resulting in zero safety incidents since its implementation.

Automated safety monitoring ensures consistent use of personal protective equipment (PPE) and adherence to safety protocols. This proactive approach benefits both workers and production teams, especially in high-risk environments. By minimizing human exposure to dangerous tasks, robot guidance systems create safer workplaces while maintaining productivity.

Note: Vision-guided robots are particularly effective in industries like manufacturing and construction, where safety is a top priority.

Cost-Effectiveness and Scalability

Robot vision guidance systems offer a cost-effective solution for businesses aiming to scale operations. While the initial investment may seem high, the long-term benefits outweigh the costs. These systems reduce labor expenses by automating repetitive tasks and minimizing errors. Additionally, their ability to adapt to new tasks makes them a scalable option for growing businesses.

The modular design of modern vision-guided robots allows for easy upgrades and integration with existing workflows. This flexibility ensures that your investment remains relevant as your operational needs evolve. By improving accuracy and reducing downtime, these systems deliver a strong return on investment, making them an essential tool for businesses of all sizes.

Callout: Investing in robot guidance systems can help you achieve both short-term savings and long-term growth.

Real-time adaptability to dynamic conditions

Robotic guidance machine vision systems excel in adapting to dynamic environments. These systems process visual data in real time, allowing robots to respond instantly to changes in their surroundings. This capability is crucial in industries where conditions can shift rapidly, such as manufacturing, logistics, and healthcare.

For example, in a warehouse, a vision-guided robot can detect when an item has been misplaced or when a new object enters its workspace. It adjusts its actions immediately, ensuring smooth operations without human intervention. This adaptability reduces downtime and increases productivity.

Tip: To maximize efficiency, deploy vision-guided robots in environments where tasks or conditions frequently change. Their ability to adapt ensures consistent performance.

Machine vision systems achieve this adaptability through advanced algorithms and sensors. These technologies enable robots to recognize patterns, track moving objects, and predict potential obstacles. For instance, a robot equipped with 3D vision can identify and pick up items of varying shapes and sizes, even if they are scattered randomly.

| Feature | Benefit |

|---|---|

| Real-time data processing | Immediate response to environmental changes |

| Pattern recognition | Accurate identification of objects in dynamic settings |

| Obstacle prediction | Safer and more efficient navigation |

In healthcare, real-time adaptability proves invaluable. Surgical robots use vision systems to adjust their movements based on the patient’s anatomy. This precision minimizes errors and improves outcomes. Similarly, autonomous vehicles rely on real-time adaptability to navigate traffic and avoid accidents.

By leveraging these capabilities, you can ensure that your operations remain efficient and error-free, even in unpredictable conditions. Vision-guided robots provide the flexibility needed to thrive in today’s fast-paced environments.

Challenges in Implementing Machine Vision Systems

High Costs and ROI Concerns

Implementing machine vision systems often involves significant financial investment. Customizing these systems to fit your production line can cost between $50,000 and $200,000, with installation taking up to six weeks. For many businesses, this upfront cost creates hesitation, especially when ROI timelines are uncertain. Studies show that 68% of manufacturing firms require ROI within 18 months to approve such projects. However, the benefits can be substantial. For example, automotive suppliers have reported a 40% reduction in manual inspection costs after adopting these systems.

Energy efficiency also plays a role in cost considerations. Vision systems consuming less than 50 watts often command a 22% pricing premium. Yet, they can lead to significant savings. A pharmaceutical company projected annual energy savings of $420,000 by switching to energy-efficient systems.

| Evidence Type | Description |

|---|---|

| Customization Costs | Reconfiguring systems costs $50,000–$200,000 per line, taking 3–6 weeks. |

| ROI Dynamics | 68% of firms need ROI within 18 months; 40% cost reduction in inspections. |

| Energy Efficiency Impact | Systems under 50W save $420K annually for a pharmaceutical company. |

Technical Complexity and Integration Hurdles

Integrating machine vision systems into existing workflows can be challenging. Legacy systems often complicate the process due to outdated designs. You may also face difficulties adapting the system to handle diverse product characteristics. Real-time data processing is another critical requirement, as delays can disrupt operations.

Synchronization between hardware and software demands careful planning. For example, using real-time operating systems can improve performance but requires skilled engineers for setup. A systematic approach is essential to address these challenges. Selecting the right hardware and software ensures smoother integration and better results.

- Legacy systems create integration challenges.

- Machine vision must adapt to diverse product features.

- Real-time data processing is crucial for efficiency.

- Synchronization requires robust communication protocols.

- Skilled engineers are necessary for complex setups.

Data Security and Privacy Issues

Machine vision systems often rely on large datasets, raising concerns about data security and privacy. Organizations must limit data collection to lawful and ethical purposes. Providing users with control over their data is equally important. For instance, reacquiring consent becomes necessary if the intended use of data changes.

Protecting sensitive data, such as health or education records, requires strict measures. Cryptography and access-control mechanisms help prevent data leaks. Additionally, privacy risks should be assessed throughout the system's lifecycle to minimize harm. Compliance with regulations, like the EU AI Act, ensures that your system adheres to best practices.

| Evidence | Description |

|---|---|

| Data Minimization | Collect only lawful and necessary data. |

| Consent Mechanisms | Allow users to control and access their data. |

| Security Best Practices | Use cryptography and access controls to prevent leaks. |

| Sensitive Data Protection | Protect sensitive data and use it in narrowly defined contexts. |

| Risk Assessment | Assess privacy risks throughout the system's lifecycle. |

| Regulatory Compliance | Follow governance rules like the EU AI Act for high-risk AI systems. |

Limitations in unstructured environments

Robotic guidance machine vision systems excel in controlled environments, but unstructured settings present unique challenges. These environments lack predictable patterns, making it harder for robots to perform tasks accurately. For example, a cluttered warehouse or a construction site often has irregular objects, uneven surfaces, and unpredictable movements. These factors can confuse even the most advanced vision systems.

One major limitation is the difficulty in recognizing objects with inconsistent shapes, sizes, or textures. A robot might struggle to identify a partially obscured item or one that blends into its surroundings. This issue becomes more pronounced when lighting conditions change, such as in outdoor environments where shadows and glare vary throughout the day.

Note: You can improve performance in unstructured environments by using advanced 3D vision systems and AI algorithms trained on diverse datasets.

Another challenge is the inability to adapt quickly to dynamic conditions. For instance, in a busy warehouse, robots must navigate around moving workers and vehicles. Without precise real-time data processing, the robot may fail to avoid collisions or complete its tasks efficiently.

| Limitation | Impact |

|---|---|

| Irregular object recognition | Reduced accuracy in identifying and handling items |

| Changing lighting conditions | Errors in visual data processing |

| Dynamic environments | Increased risk of collisions and task failures |

You can also face integration issues when deploying these systems in unstructured settings. Robots may require additional sensors or software upgrades to handle the complexity, increasing costs and setup time. Despite these hurdles, ongoing advancements in AI and machine learning are helping robots become more adaptable. By investing in these technologies, you can overcome many of the limitations and unlock the full potential of robotic guidance systems in unstructured environments.

Tip: Start small by testing vision-guided robots in semi-structured environments before scaling to fully unstructured settings.

Latest Advancements in Robotic Guidance Machine Vision Systems

Innovations in AI and Deep Learning

Artificial intelligence and deep learning have transformed how robots perceive and interact with their surroundings. These advancements allow robots to process visual data with remarkable accuracy and speed. Machine learning algorithms now enable real-time data analysis, improving decision-making and task execution. For example, convolutional neural networks (CNNs) enhance image recognition, helping robots identify objects and navigate autonomously.

Deep learning also powers reinforcement learning (RL), which allows robots to learn from their environment. This capability optimizes actions for tasks like navigation and object manipulation. Imagine a robot in a warehouse learning to pick up items more efficiently after each attempt. Natural language processing (NLP) further enhances human-robot interaction by enabling robots to understand and respond to spoken commands.

The integration of computer vision with AI has been a game-changer. Robots can now interpret visual information with precision, making them ideal for tasks requiring high accuracy, such as manufacturing inspections or surgical procedures. These innovations ensure that robot vision guidance systems remain at the forefront of automation technology.

Tip: To maximize the benefits of AI in your robot guidance systems, focus on applications where precision and adaptability are critical.

The Role of Edge Computing in Robotics

Edge computing has become a cornerstone of modern robotics. By processing data near its source, edge computing reduces latency and enhances responsiveness. This capability is crucial for machine vision systems, where real-time data analysis can mean the difference between success and failure. For instance, robots in manufacturing environments rely on edge computing to detect defects instantly, minimizing errors and improving efficiency.

Edge AI also boosts security by keeping sensitive data local, reducing the risk of breaches. It promotes scalability, allowing you to expand your robotic systems without overhauling your infrastructure. Distributed computing further enhances performance, enabling robots to handle complex tasks like predictive maintenance and autonomous navigation.

Case studies highlight the impact of edge computing in Industry 4.0. Machine vision systems integrated with edge AI automate processes that once required human intervention. This automation not only reduces labor costs but also improves accuracy and decision-making. For example, a robot equipped with edge AI can analyze visual data to predict when a machine needs maintenance, preventing costly downtime.

Note: Incorporating edge computing into your robot vision guidance systems can significantly enhance their performance and cost-efficiency.

Notable Technologies in 2025 (e.g., CV2)

The year 2025 has brought groundbreaking technologies to the field of robotic guidance machine vision systems. OpenCV's CV2 library stands out as a versatile tool for image processing. It supports advanced features like object detection, facial recognition, and 3D vision, making it a favorite among developers. CV2's ability to handle large datasets ensures that your robots can process visual information quickly and accurately.

3D vision technology has also seen remarkable advancements. Robots equipped with 3D vision can now identify objects with complex shapes and textures, even in unstructured environments. This capability is invaluable in industries like manufacturing, where precision is paramount. For example, a robot using 3D vision can assemble intricate components with minimal errors, ensuring consistent product quality.

Other notable technologies include improved sensors and cameras that capture high-resolution images in real time. These innovations, combined with AI and edge computing, create a robust framework for modern robot guidance systems. By leveraging these technologies, you can achieve unparalleled efficiency and adaptability in your operations.

Callout: Staying updated on the latest technologies, like CV2 and 3D vision, can give your business a competitive edge in automation.

Future trends and breakthroughs

The future of robot vision guidance systems looks promising, with several exciting trends and breakthroughs on the horizon. These advancements will redefine how robots interact with their surroundings and perform tasks with greater efficiency.

1. Widespread Adoption of 3D Vision Technology

3D vision technology is becoming a game-changer in the field of machine vision. You will see robots equipped with 3D cameras that can perceive depth and spatial relationships more accurately. This capability allows robots to handle complex tasks like assembling intricate components or navigating cluttered environments. For example, a robot with 3D vision can identify and pick up objects of varying shapes and sizes, even if they are stacked or partially hidden.

Tip: If you're planning to implement robot vision guidance systems, consider investing in 3D vision technology. It offers unmatched precision and adaptability for dynamic environments.

2. AI-Powered Predictive Maintenance

Artificial intelligence is transforming robotic guidance machine vision systems by enabling predictive maintenance. In the future, robots will use machine vision to monitor equipment and detect signs of wear or damage. This proactive approach prevents costly breakdowns and reduces downtime. Imagine a factory where robots analyze visual data to predict when a machine needs servicing. This innovation not only saves money but also ensures smooth operations.

3. Integration of Edge AI for Real-Time Processing

Edge AI is set to revolutionize robot vision guidance systems by enabling real-time data processing. Instead of relying on cloud computing, robots will process visual data locally, reducing latency and improving responsiveness. This advancement is particularly useful in industries like healthcare, where split-second decisions can save lives. For instance, surgical robots equipped with edge AI can adjust their movements instantly based on real-time feedback.

4. Enhanced Human-Robot Collaboration

Future breakthroughs will focus on improving human-robot collaboration. Robots will become more intuitive, understanding human gestures and commands through advanced vision systems. This development will make robots more user-friendly and accessible. For example, in a warehouse, a robot could recognize a worker's hand signal to pick up a specific item. This seamless interaction will boost productivity and reduce the learning curve for operators.

5. Sustainable and Energy-Efficient Systems

Sustainability is becoming a priority in the development of robotic guidance machine vision systems. Future robots will feature energy-efficient components and eco-friendly designs. These systems will consume less power while delivering high performance. By adopting sustainable technologies, you can reduce your carbon footprint and contribute to a greener future.

6. Breakthroughs in Autonomous Navigation

Autonomous navigation is another area where significant progress is expected. Robots will use advanced vision systems to navigate complex environments without human intervention. For example, delivery drones will rely on machine vision to identify obstacles and find the most efficient routes. This capability will make autonomous robots more reliable and versatile.

Callout: Staying updated on these trends will help you leverage the full potential of robot vision guidance systems. Embracing these advancements ensures that your operations remain competitive and future-ready.

Robotic guidance machine vision systems redefine automation in 2025. Their ability to enhance precision, efficiency, and adaptability transforms industries like manufacturing, healthcare, and logistics. You can leverage these systems to streamline operations and improve safety.

Tip: Explore resources on AI, edge computing, and 3D vision to understand their potential. Adopting these technologies positions your business for growth and innovation.

Take the first step toward integrating machine vision systems. Their impact will shape the future of industrial and commercial success.

FAQ

1. What industries benefit the most from robotic guidance machine vision systems?

Robotic guidance systems benefit industries like manufacturing, healthcare, logistics, and automotive. They improve precision, efficiency, and safety. For example, they help automate assembly lines, assist in surgeries, and optimize warehouse operations.

2. How do vision-guided robots adapt to changing environments?

Vision-guided robots use real-time data processing and advanced algorithms to adjust their actions. They recognize patterns, track objects, and predict obstacles. This adaptability ensures smooth operations in dynamic settings like warehouses or construction sites.

3. Are machine vision systems expensive to implement?

Initial costs can be high, ranging from $50,000 to $200,000. However, they reduce labor expenses, improve accuracy, and save energy. Many businesses see a strong return on investment within 18 months.

4. Can vision-guided robots work in unstructured environments?

Yes, but they face challenges like irregular objects and changing lighting. Advanced 3D vision systems and AI algorithms improve their performance. Testing in semi-structured environments first can help you scale effectively.

5. What are the latest technologies in machine vision systems?

Technologies like OpenCV’s CV2 library, 3D vision cameras, and edge computing enhance performance. These innovations improve object detection, real-time processing, and adaptability, making them ideal for modern automation needs.

See Also

Understanding How Guidance Vision Enhances Robotics Applications

Improving Safety Protocols with Masking Vision Systems by 2025

Anticipating Developments in Component Counting Vision Systems