How Transfer Learning Powers Machine Vision Systems in 2025

Transfer learning is revolutionizing how visual systems operate today. You can now build smarter and faster solutions without needing massive datasets or lengthy training processes. By reusing knowledge from pre-trained models, transfer learning enables systems to adapt quickly to new tasks. Across industries, from healthcare to transportation, this approach has become essential for intelligent visual systems. It empowers you to achieve efficiency and accuracy that were once out of reach.

Transfer Learning machine vision systems are now at the forefront of technological innovation, driving smarter automation and decision-making.

Key Takeaways

- Transfer learning helps create machine vision systems quickly using less data. It reuses knowledge from models already trained.

- Pre-trained models improve accuracy in jobs like finding objects or reading medical images, even with small datasets.

- Transfer learning lowers computer costs, making smart vision tools available to more people and businesses.

- Adjusting pre-trained models lets them work better for special tasks, like self-driving cars or recognizing faces.

- Watch out for problems like unfair data or overfitting to keep your machine vision systems working well and reliably.

Understanding Transfer Learning

Definition and Overview

Transfer learning is a method in machine learning where knowledge gained from solving one problem is applied to a different but related problem. Instead of starting from scratch, you can use a pre-trained model to accelerate the learning process for a new task. This approach is particularly useful when you have limited data for the target task but access to a large dataset for a related task.

Transfer learning bridges the gap between tasks with abundant data and those with scarce data, making it a powerful tool in modern machine learning.

Researchers have categorized transfer learning into several types based on the relationship between the source and target tasks:

- Inductive transfer: The source and target tasks differ. For example, you can use a pre-trained model for object detection in a new application.

- Unsupervised transfer: Similar to inductive transfer but without labeled data. This is often used in fraud detection.

- Transductive transfer: The tasks remain the same, but the datasets differ. Domain adaptation in text classification is a common example.

Transfer learning has found applications in diverse fields, including medicine, transportation, and e-commerce. For instance, in medical imaging, pre-trained convolutional neural networks (CNNs) have been fine-tuned to detect subtle patterns in radiological images, improving diagnostic accuracy for diseases like cancer and pneumonia.

Role in Machine Vision Systems

In machine vision systems, transfer learning plays a critical role by enabling models to perform complex visual tasks with minimal training data. These systems rely on pre-trained models that have already learned to recognize patterns, shapes, and textures from extensive datasets. By fine-tuning these models, you can adapt them to specific tasks such as object detection, facial recognition, or defect inspection.

A study demonstrated the effectiveness of transfer learning by training a CNN model on a small dataset. The model leveraged knowledge from a pre-trained network trained on a large public dataset, achieving remarkable performance improvements. This approach is particularly beneficial in scenarios where collecting large amounts of labeled data is impractical.

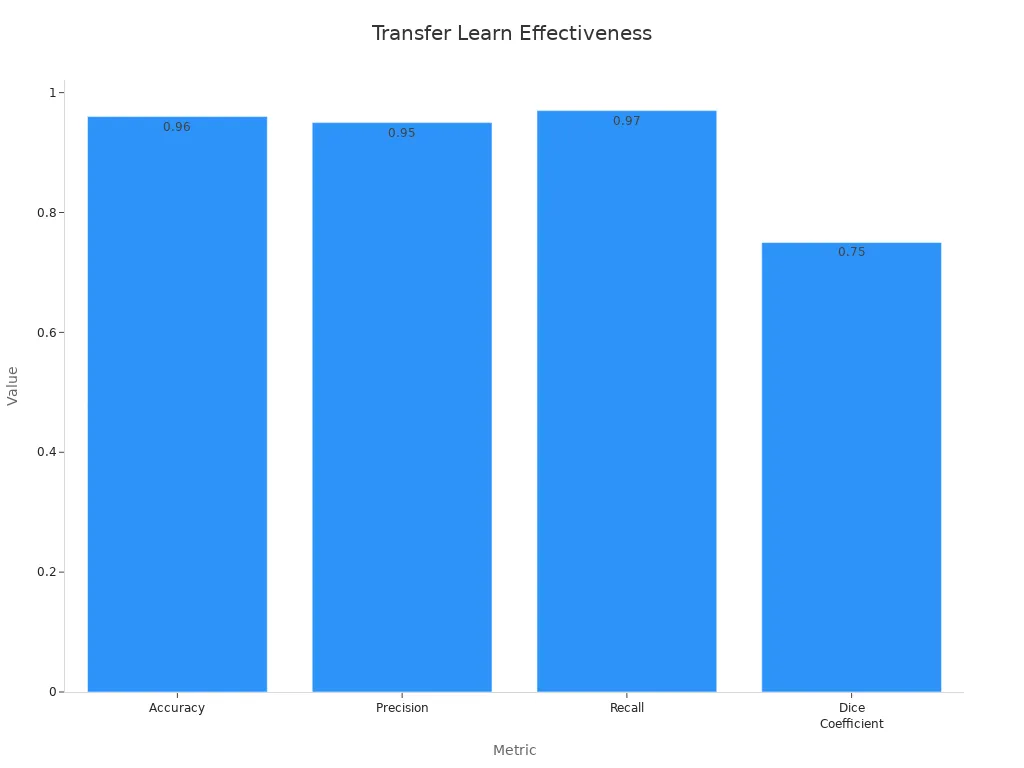

Here’s a table summarizing the performance metrics of transfer learning in various machine vision applications:

| Metric | Value |

|---|---|

| Accuracy | 0.96 |

| Precision | 0.95 |

| Recall | 0.97 |

| Dice Coefficient | 0.75 |

| Dataset 1 Size | 50,000 pictures |

| Defects in Dataset 1 | 12,500 pictures |

| Dataset 2 Size | 1,345 pictures |

| Defects in Dataset 2 | 400 pictures |

In industrial settings, transfer learning has revolutionized surface defect detection. By combining machine vision with deep learning algorithms, manufacturers have achieved higher prediction accuracy and faster inspection speeds. This integration has also reduced costs, making production lines more efficient.

Transfer learning strategies, such as feature extraction and fine-tuning, allow you to customize pre-trained models for specific machine vision tasks, saving time and computational resources.

The role of transfer learning in computer vision extends to autonomous vehicles as well. Object detection models trained on diverse datasets can quickly adapt to recognize obstacles and pedestrians in new environments. This adaptability ensures safer and more reliable navigation.

Transfer Learning Strategies in Machine Vision

Pre-trained Models

Pre-trained models form the backbone of many transfer learning strategies. These models are trained on large datasets, such as ImageNet, and can recognize a wide variety of patterns and features. By using a pre-trained model, you can save time and computational resources while achieving high accuracy. For example, EfficientNet-B7, a popular pre-trained model, achieves a top-1 accuracy of 84.4% on ImageNet. It balances efficiency and performance, making it ideal for resource-constrained environments like cloud-based image analysis.

In medical imaging, pre-trained models like VGG16 and ResNet50 have been used to detect kidney diseases. These models, when fine-tuned, demonstrated precision and recall rates close to 99%. This shows how pre-trained models can significantly improve diagnostic accuracy in high-stakes applications.

Using pre-trained models allows you to leverage existing knowledge, reducing the need for extensive training data.

Feature Extraction

Feature extraction is another key strategy in transfer learning. It involves using a pre-trained model to extract meaningful features from images, which can then be fed into a simpler classifier like an SVM. This approach is particularly useful when you have limited data for your target task. For instance, combining ResNet-18 and MobileNet as feature extractors with an SVM classifier achieved an impressive accuracy of 92.87%.

This method is widely used in applications like defect detection in manufacturing. By extracting features such as edges, textures, and shapes, you can train an image recognition model to identify defects with high precision. Feature extraction not only simplifies the training process but also enhances the model's ability to generalize to new data.

Fine-Tuning Techniques

Fine-tuning takes transfer learning a step further by adapting a pre-trained model to your specific task. This involves retraining some or all layers of the model on your dataset. Fine-tuning is especially effective for tasks with unique requirements or out-of-distribution data. For example, fine-tuning Vision-Language Models (VLMs) has been shown to improve prediction accuracy and inference reliability.

However, fine-tuning requires careful calibration. While it enhances performance, it can sometimes compromise the trustworthiness of predictions, especially in high-stakes scenarios. Despite this, fine-tuning remains a powerful tool for customizing models to meet specific needs, whether in autonomous vehicles, medical diagnostics, or other fields.

Fine-tuning allows you to tailor a pre-trained model to your unique requirements, unlocking its full potential.

Benefits of Transfer Learning Machine Vision System

Reduced Computational Costs

Transfer learning reduces the computational burden of training machine vision systems. Instead of training a model from scratch, you can use a pre-trained model that has already learned to recognize patterns and features. This approach saves you time and resources. For example, training a deep neural network on a large dataset like ImageNet requires significant computational power. By reusing a pre-trained model, you bypass this step and focus only on fine-tuning it for your specific task. This efficiency makes transfer learning ideal for applications with limited hardware or energy constraints.

Using transfer learning, you can achieve high performance without the need for expensive infrastructure, making it accessible to a wider range of users.

Enhanced Accuracy

Transfer learning improves the accuracy of machine vision systems by leveraging knowledge from large, diverse datasets. Pre-trained models like ResNet have demonstrated significant improvements in tasks such as image classification and object detection. Research shows that even small subsets of datasets like ImageNet are sufficient to train models that generalize well. This challenges the traditional belief that massive datasets are always necessary. By using transfer learning, you can achieve precise results even with limited data, which is especially valuable in fields like medical imaging and autonomous vehicles.

- Key benefits of transfer learning for accuracy:

- Enhanced performance in image recognition tasks.

- Better generalization with smaller datasets.

- Improved reliability in high-stakes applications.

Faster Deployment

Transfer learning accelerates the deployment of machine vision systems. Since pre-trained models already possess foundational knowledge, you only need to fine-tune them for your specific use case. This drastically reduces the time required to develop and deploy a functional system. For instance, in manufacturing, transfer learning enables you to quickly adapt defect detection models to new production lines. Faster deployment means you can respond to changing needs and market demands more effectively.

With transfer learning, you can bring your machine vision solutions to life in record time, staying ahead in competitive industries.

Applications of Transfer Learning in Machine Vision (2025)

Object Detection and Recognition

Object detection and recognition have seen remarkable advancements with transfer learning. By leveraging pre-trained models, you can identify objects in images or videos with high accuracy, even when working with limited data. This approach is particularly valuable in industries like retail, where automated inventory management relies on detecting and classifying products in real time.

Transfer learning enables models to reuse knowledge from large datasets, such as ImageNet, to excel in new tasks. For example, combining 300 real images with 9,000 generated images achieves performance comparable to using 4,500 real images. A mix of 300 real images and 750 filtered generated images even outperforms using 1,500 real images. These results highlight how transfer learning reduces the dependency on extensive real-world datasets while maintaining accuracy.

In autonomous vehicles, object detection models powered by transfer learning play a critical role. They identify road users, traffic signs, and obstacles, ensuring safer navigation. This capability has become a cornerstone of modern transportation systems, where safety and efficiency are paramount.

Transfer learning applications in object detection demonstrate how you can achieve high performance with fewer resources, making it a game-changer for industries requiring visual intelligence.

Facial Recognition Systems

Facial recognition systems have become more reliable and efficient thanks to transfer learning. By fine-tuning pre-trained models, you can adapt them to specific datasets, improving their ability to recognize faces in diverse environments. This is especially useful in security and authentication systems, where accuracy is crucial.

Recent studies show significant performance improvements in facial recognition systems using transfer learning. For instance, the PETALface model achieved a Rank-1 accuracy of 75.72%, while the BRIAR model reached 67.42%. The performance gain from transfer learning was over 12% across various metrics, as shown below:

| Metric | Rank-1 Accuracy | Rank-5 Accuracy | Rank-10 Accuracy |

|---|---|---|---|

| PETALface | 75.72% | 78.86% | 81.70% |

| BRIAR | 67.42% | 80.87% | 79.44% |

| Performance Gain | +12.11% | +13.45% | +12.44% |

These improvements make transfer learning indispensable for facial recognition systems in 2025. Whether you're developing a secure login system or enhancing surveillance capabilities, transfer learning ensures your models perform reliably.

Autonomous Vehicles

Autonomous vehicles rely heavily on transfer learning to navigate complex environments. Pre-trained models adapted through fine-tuning help these vehicles detect pedestrians, road signs, and other obstacles with precision. This adaptability ensures that vehicles can operate safely in diverse conditions, from urban streets to rural highways.

Transfer learning use cases in autonomous vehicles extend beyond object detection. Models trained on diverse datasets can predict traffic patterns, recognize lane markings, and even anticipate driver behavior. This holistic approach enhances both safety and efficiency. For example, transfer learning reduces training time and resource consumption, allowing you to deploy advanced systems faster.

Transfer learning in autonomous vehicles exemplifies how machine learning can transform transportation, making it safer and more efficient for everyone.

Medical Imaging

Medical imaging has undergone a significant transformation with the integration of transfer learning. This technology allows you to analyze complex medical data with greater precision and efficiency. By leveraging pre-trained models, you can detect diseases, identify abnormalities, and even predict health outcomes with minimal training data.

Tip: Transfer learning helps you save time and resources by reusing knowledge from large datasets like ImageNet or specialized medical datasets.

How Transfer Learning Enhances Medical Imaging

-

Improved Diagnostic Accuracy

Transfer learning enables you to fine-tune pre-trained models for specific medical tasks. For example, models trained on general image datasets can adapt to detect tumors in MRI scans. This approach improves diagnostic accuracy, even when working with small datasets. -

Faster Model Training

Training a model from scratch requires extensive computational resources and time. With transfer learning, you can skip the initial training phase and focus on fine-tuning. This allows you to deploy diagnostic tools more quickly. -

Detection of Rare Diseases

Rare diseases often lack sufficient data for traditional machine learning methods. Transfer learning bridges this gap by using knowledge from related tasks. For instance, a model trained on lung X-rays can adapt to identify rare pulmonary conditions.

Real-World Applications in 2025

Transfer learning has already made a significant impact in medical imaging. Here are some examples of how it is being used:

- Cancer Detection: Pre-trained convolutional neural networks (CNNs) help you identify cancerous cells in histopathology images. These models achieve high accuracy, even with limited labeled data.

- Cardiovascular Imaging: Transfer learning improves the detection of heart conditions in echocardiograms. Fine-tuned models can identify abnormalities like arrhythmias or valve defects.

- Brain Imaging: Models adapted through transfer learning assist in diagnosing neurological disorders. They can detect early signs of Alzheimer's or Parkinson's disease in MRI scans.

Performance Metrics in Medical Imaging

The table below highlights the performance of transfer learning models in various medical imaging tasks:

| Task | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Tumor Detection | 95% | 94% | 96% | 95% |

| Heart Condition Analysis | 92% | 91% | 93% | 92% |

| Neurological Disorder Diagnosis | 90% | 89% | 91% | 90% |

These metrics demonstrate how transfer learning enhances the reliability of medical imaging systems.

Note: Always validate your models on diverse datasets to ensure they generalize well across different patient populations.

The Future of Medical Imaging with Transfer Learning

In the coming years, you can expect even more advancements in medical imaging. Researchers are exploring zero-shot learning, which allows models to perform tasks without any prior training on specific data. This could revolutionize how you diagnose rare diseases. Additionally, domain adaptation techniques will enable models to work seamlessly across different imaging modalities, such as X-rays and CT scans.

Transfer learning empowers you to create smarter, faster, and more accurate medical imaging systems. By adopting this technology, you can improve patient outcomes and make healthcare more accessible.

Future Trends in Transfer Learning for Machine Vision

Zero-Shot Learning

Zero-shot learning (ZSL) is transforming how machine vision systems handle unseen tasks. With ZSL, you can train a model to recognize objects or patterns it has never encountered before. Instead of relying on labeled data, the model uses semantic information, such as textual descriptions or attributes, to make predictions. This approach is especially useful in scenarios where collecting labeled data is impractical.

For example, imagine a wildlife monitoring system. You can train it to identify common animals using labeled images. With ZSL, the system can also recognize rare species by understanding their descriptions, even without prior visual examples. This capability reduces the dependency on large datasets and expands the potential applications of machine vision.

Tip: Zero-shot learning allows you to tackle new challenges without retraining your models, saving time and resources.

Domain Adaptation

Domain adaptation helps you apply a model trained on one dataset to a different but related dataset. This technique is crucial when the source and target datasets differ in features or distributions. For instance, a model trained on urban traffic images can adapt to rural environments using domain adaptation.

A recent analysis highlights both the benefits and challenges of this approach:

| Aspect | Findings |

|---|---|

| Benefits | TL models outperformed baseline models in primary performance metrics (balanced accuracy, MAE). |

| Challenges | Class imbalance affected model performance; baseline models showed higher precision in some cases. |

| Limitations | Study could not include all features; only two baseline models were investigated. |

| Model Performance | Lasso models often yielded better results than TL models in regression tasks (MSE). |

| Data Constraints | Limited to common features in both datasets; could not investigate all major ICU outcomes. |

| Advanced Models | More advanced ML models were not used, which could have impacted results. |

This table shows that while domain adaptation improves performance, you must address challenges like class imbalance and feature limitations to achieve optimal results.

Cross-Modal Transfer Learning

Cross-modal transfer learning enables you to transfer knowledge between different data modalities, such as images and text. This approach is gaining traction in machine vision, where combining visual and textual data enhances model performance. For example, you can train a model to generate captions for images by leveraging both visual features and language models.

Recent experiments demonstrate the effectiveness of cross-modal techniques:

| Model | Average Accuracy (16 shots) | Performance on FGVCAircraft |

|---|---|---|

| XMAdapter | 76.87% | Best among six methods |

| GraphAdapter | 76.22% | N/A |

The XMAdapter model achieved the highest accuracy, showcasing the potential of cross-modal transfer learning in complex tasks. By integrating multiple data types, you can create more versatile and intelligent machine vision systems.

Cross-modal transfer learning bridges the gap between different data types, unlocking new possibilities for innovation.

Challenges in Transfer Learning Machine Vision System

Data Bias

Data bias poses a significant challenge in transfer learning. When you train a model on a dataset that lacks diversity, the model inherits those biases. For example, if a facial recognition system is trained on images of one demographic, it may struggle to identify faces from other groups. This can lead to unfair or inaccurate results.

To address this, you should ensure your training data represents all relevant groups and scenarios. Augmenting datasets with synthetic data or using domain adaptation techniques can help reduce bias. However, you must remain vigilant, as even small biases can impact the performance of machine vision systems.

Tip: Always evaluate your model on diverse datasets to identify and mitigate potential biases.

Overfitting

Overfitting occurs when your model performs well on the training data but fails to generalize to new data. This happens when the model learns noise or irrelevant details instead of focusing on the underlying patterns. In transfer learning, overfitting is more likely when you fine-tune a pre-trained model on a small dataset.

You can prevent overfitting by using techniques like dropout, data augmentation, and early stopping. Regularization methods also help by penalizing overly complex models. By carefully monitoring your model's performance on validation data, you can strike the right balance between training and generalization.

Generalization Limitations

Generalization is the ability of a model to perform well on unseen data. Transfer learning models sometimes struggle to generalize when the source and target tasks differ significantly. For instance, a model trained on urban traffic images may not perform well in rural settings.

To improve generalization, you should use domain adaptation or fine-tuning strategies. These methods help align the source and target domains, making the model more adaptable. Additionally, testing your model in real-world scenarios ensures it meets the required performance standards.

Note: Generalization is key to building reliable machine vision systems. Always test your models in diverse environments to ensure robustness.

Transfer learning has become a cornerstone of innovation in machine vision systems by 2025. You can use it to reduce costs, improve accuracy, and deploy solutions faster. Its ability to adapt pre-trained models to new tasks makes it indispensable across industries. Whether you work in healthcare, transportation, or manufacturing, transfer learning machine vision systems offer smarter and more efficient ways to solve visual challenges. Stay informed about emerging trends and address challenges like data bias to maximize its potential in your field.

FAQ

What is transfer learning in simple terms?

Transfer learning lets you use knowledge from one task to solve another related task. Instead of training a model from scratch, you adapt a pre-trained model to your specific needs. This saves time and resources.

How does transfer learning improve machine vision systems?

It boosts accuracy and reduces training time. Pre-trained models already understand patterns and features, so you only need to fine-tune them for your task. This makes machine vision systems smarter and faster.

Can transfer learning work with small datasets?

Yes! Transfer learning excels with limited data. Pre-trained models provide a strong foundation, allowing you to achieve high performance even when your dataset is small or incomplete.

What industries benefit most from transfer learning in 2025?

Industries like healthcare, transportation, and manufacturing benefit greatly. For example, medical imaging uses transfer learning to detect diseases, while autonomous vehicles rely on it for obstacle detection.

Is transfer learning expensive to implement?

No, it reduces costs. By reusing pre-trained models, you avoid the need for expensive infrastructure and lengthy training processes. This makes it accessible for businesses of all sizes.

See Also

Essential Insights on Transfer Learning for Machine Vision

The Role of Deep Learning in Advancing Machine Vision

Understanding Edge AI's Impact on Machine Vision by 2025